Lecture 17: Attention

17.1 Limitations of Sequence-to-Sequence with RNNs

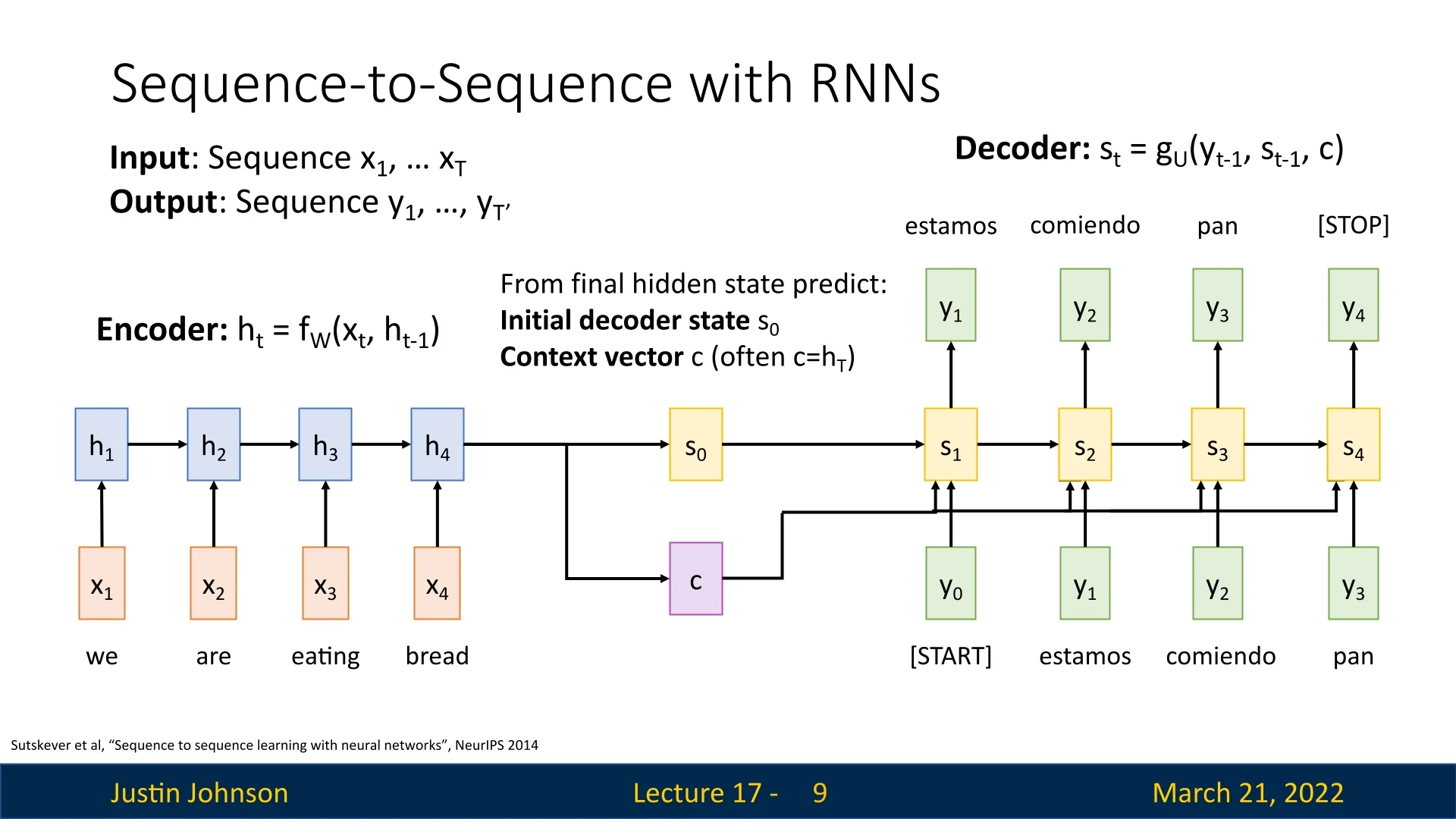

Previously, recurrent neural networks (RNNs) were used for sequence-to-sequence tasks such as machine translation. The encoder processed the input sequence and produced:

- A final hidden state \(s_0\), used as the first hidden state of the decoder network.

- A single, finite context vector \(c\) (often \(c = h_T\)), used at each step as input to the decoder.

The decoder then used \(s_0\) and \(c\) to generate an output sequence, starting with a <START> token and continuing until a <STOP> token was produced. The context vector \(c\) acted as a summary of the entire input sequence, transferring information from encoder to decoder.

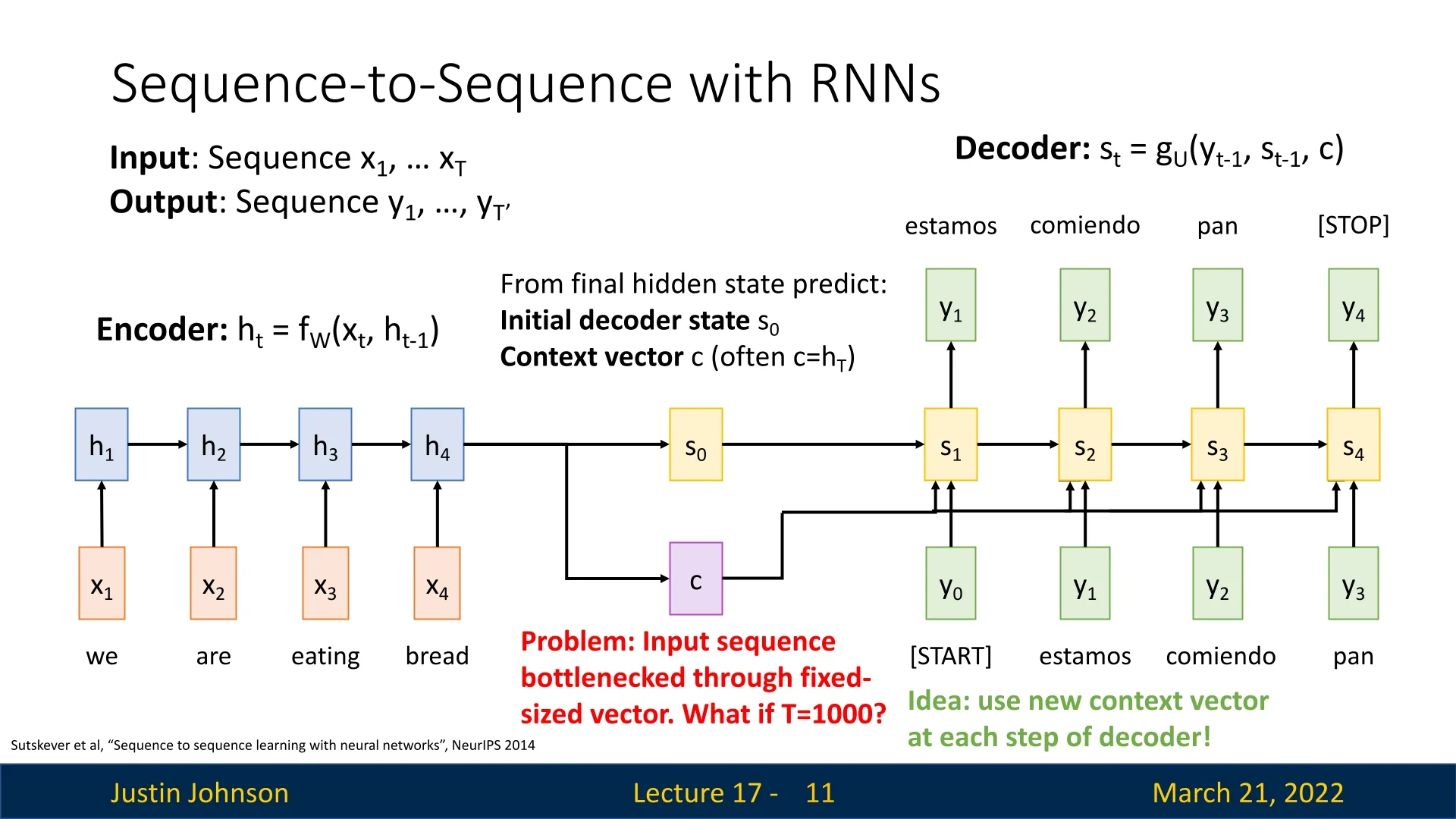

While effective for short sequences, this approach faces issues when processing long sequences, as the fixed-size context vector \(c\) becomes a bottleneck, limiting the amount of information that can be retained and transferred.

Even more advanced architectures like Long Short-Term Memory (LSTM) networks suffer from this issue because they still rely on the fixed-size \(c\) and \(h_T\) representations that do not scale with sequence length.

17.2 Introducing the Attention Mechanism

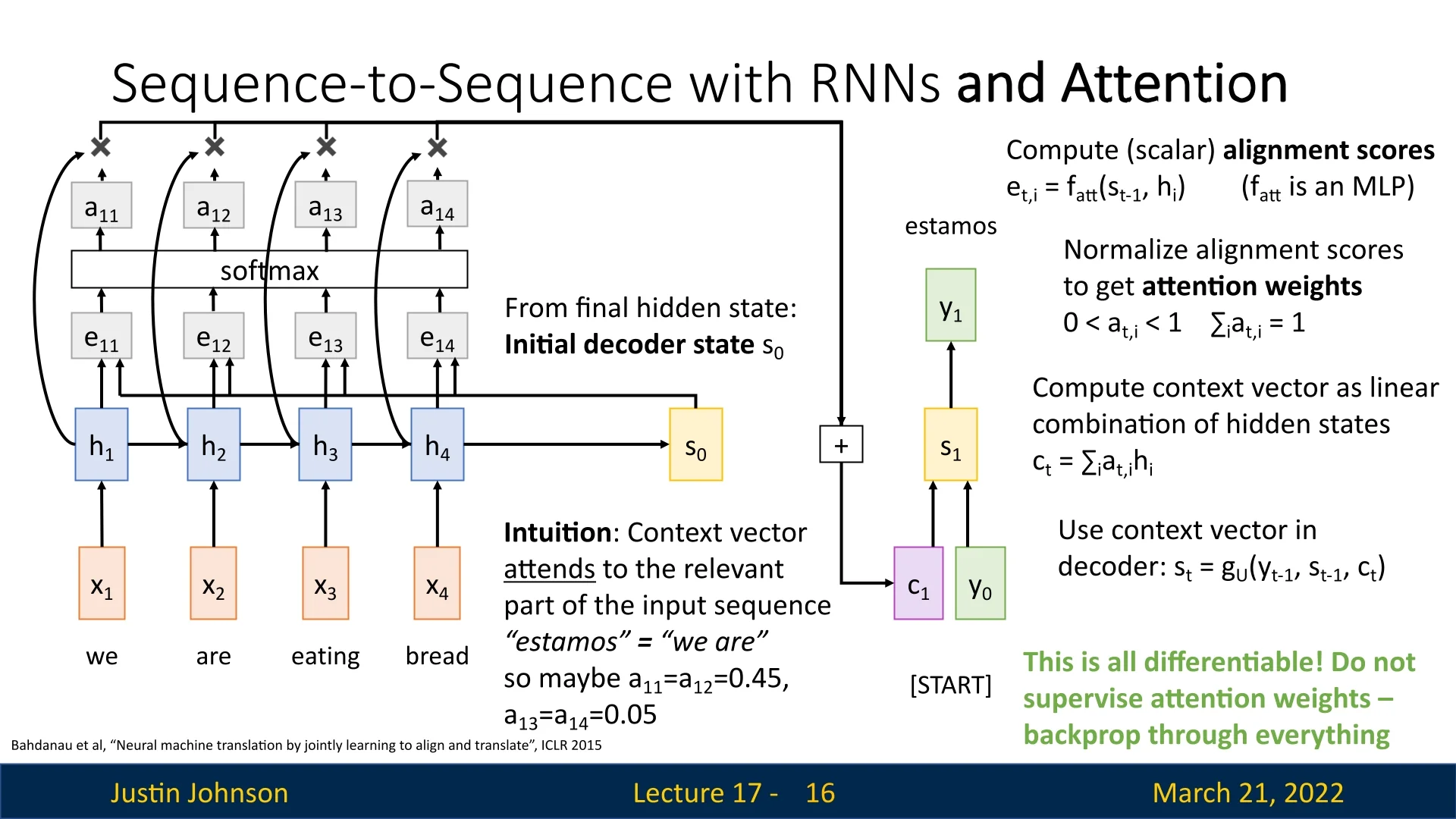

To address the bottleneck issue, the Attention mechanism introduces dynamically computed context vectors at each decoder step, instead of a single fixed vector. The encoder still processes the sequence to generate hidden states \(h_1, h_2, \dots , h_T\), but instead of passing only \(h_T\), an alignment function is used to determine which encoder hidden states are most relevant at each decoder step.

The key idea: Rather than using a single context vector for all decoder steps, the model computes a new context vector at each step by attending to different parts of the input sequence. This is done through a learnable alignment mechanism, historically known as additive attention or Bahdanau attention [22]:

\begin {equation} e_{t,i} = f_{att}(s_{t-1}, h_i), \end {equation} where \(f_{att}\) is a small, fully connected neural network. This function takes two inputs:

- The current hidden state of the decoder \(s_{t-1}\).

- A hidden state of the encoder \(h_i\).

By applying the function many times, over all encoder hidden states, it results in a set of alignment scores \(e_{t,1}, e_{t,2}, \dots , e_{t,T}\), where each score represents how relevant the corresponding encoder hidden state is to the current decoder step.

Applying the softmax function converts these alignment scores into attention weights: \begin {equation} a_{t,i} = \frac {\exp (e_{t,i})}{\sum _{j=1}^{T} \exp (e_{t,j})}, \end {equation} which ensures all attention weights are between \([0,1]\) and sum to \(1\).

The new context vector \(c_t\) is computed as a weighted sum of encoder hidden states: \begin {equation} c_t = \sum _{i=1}^{T} a_{t,i} h_i. \end {equation}

This means that at every decoding step, the decoder dynamically attends to different parts of the input sequence, adapting its focus based on the content being generated. Later in this chapter, we will contrast this additive, network-based scoring function with dot-product and scaled dot-product attention mechanisms, which replace \(f_{att}\) by simple inner products for improved computational efficiency in modern architectures such as Transformers.

Intuition Behind Attention

Instead of relying on a single compressed context vector, attention allows the model to focus on the most relevant parts of the input sequence dynamically. For example, when translating "we are eating bread" to "estamos comiendo pan", the attention weights at the first step might be: \begin {equation} a_{11} = a_{12} = 0.45, \quad a_{13} = a_{14} = 0.05. \end {equation} This means the model places greater emphasis on the words "we are" when producing "estamos", and will shift attention accordingly as it generates more words.

17.2.1 Benefits of Attention

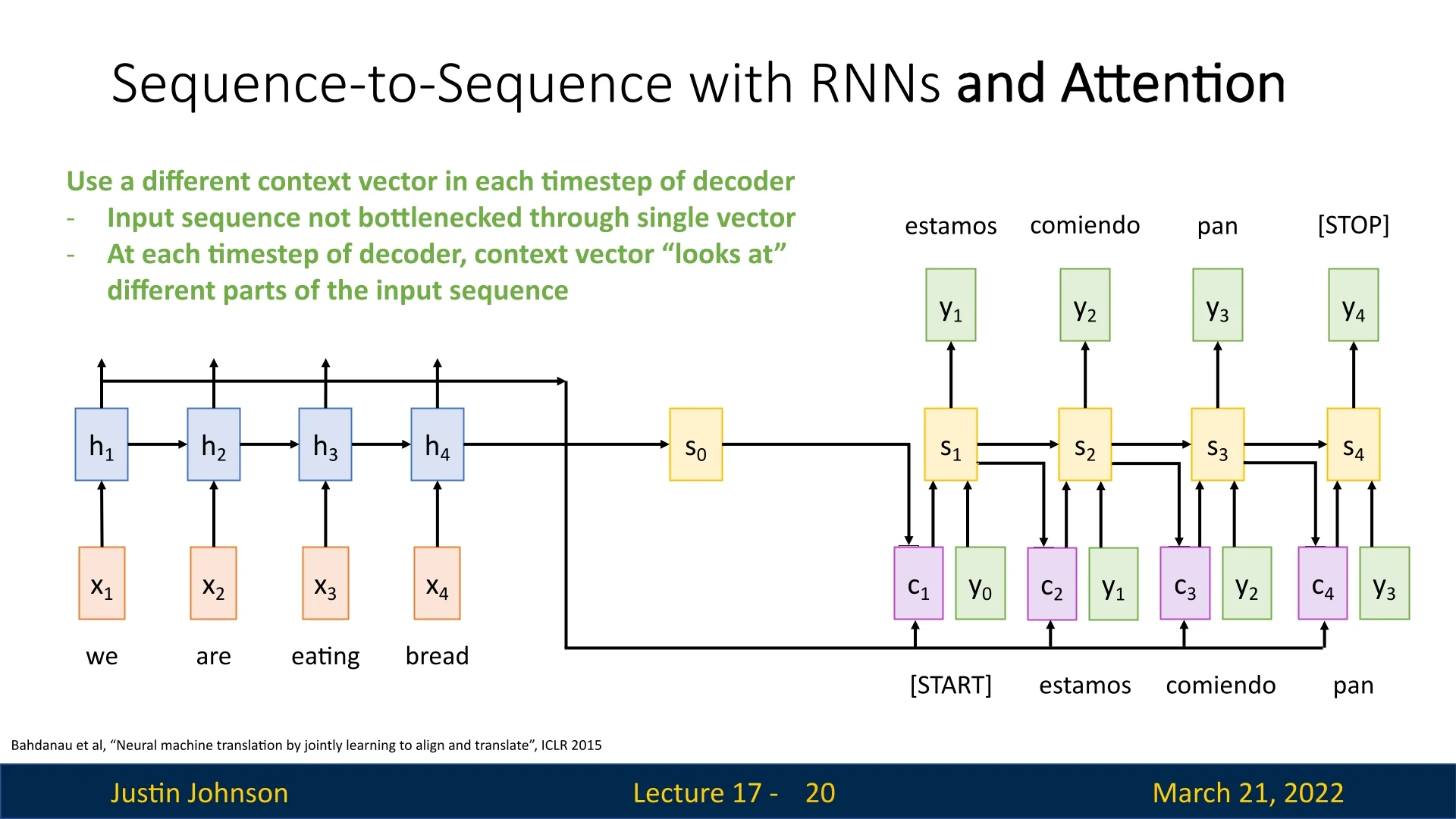

Attention mechanisms improve sequence-to-sequence models in several ways:

- Eliminates the bottleneck: The input sequence is no longer constrained by a single fixed-size context vector.

- Dynamic focus: Each decoder step attends to different parts of the input, rather than relying on a static summary.

- Improved alignment: The model learns to associate corresponding elements in input and output sequences automatically.

Additionally, attention mechanisms are fully differentiable, meaning they can be trained end-to-end via backpropagation without explicit supervision of attention weights.

17.2.2 Attention Interpretability

The introduction of attention mechanisms has revolutionized sequence-to-sequence learning by addressing the limitations of a fixed-size context vector. Rather than relying on a static summary of the input, attention dynamically selects relevant information at each decoding step, significantly improving performance on long sequences and enabling models to learn meaningful alignments between input and output sequences.

One of the key advantages of attention is its interpretability. By visualizing attention maps, we can gain deeper insight into how the model aligns different parts of the input with the generated output. These maps illustrate how the network distributes its focus across the input sequence at each step, offering a way to diagnose errors and refine architectures for specific tasks.

Attention Maps: Visualizing Model Decisions

A particularly interesting property of attention is that it provides an interpretable way to analyze how different parts of the input sequence influence each predicted output token. This is achieved through attention maps, which depict the attention weights as a structured matrix.

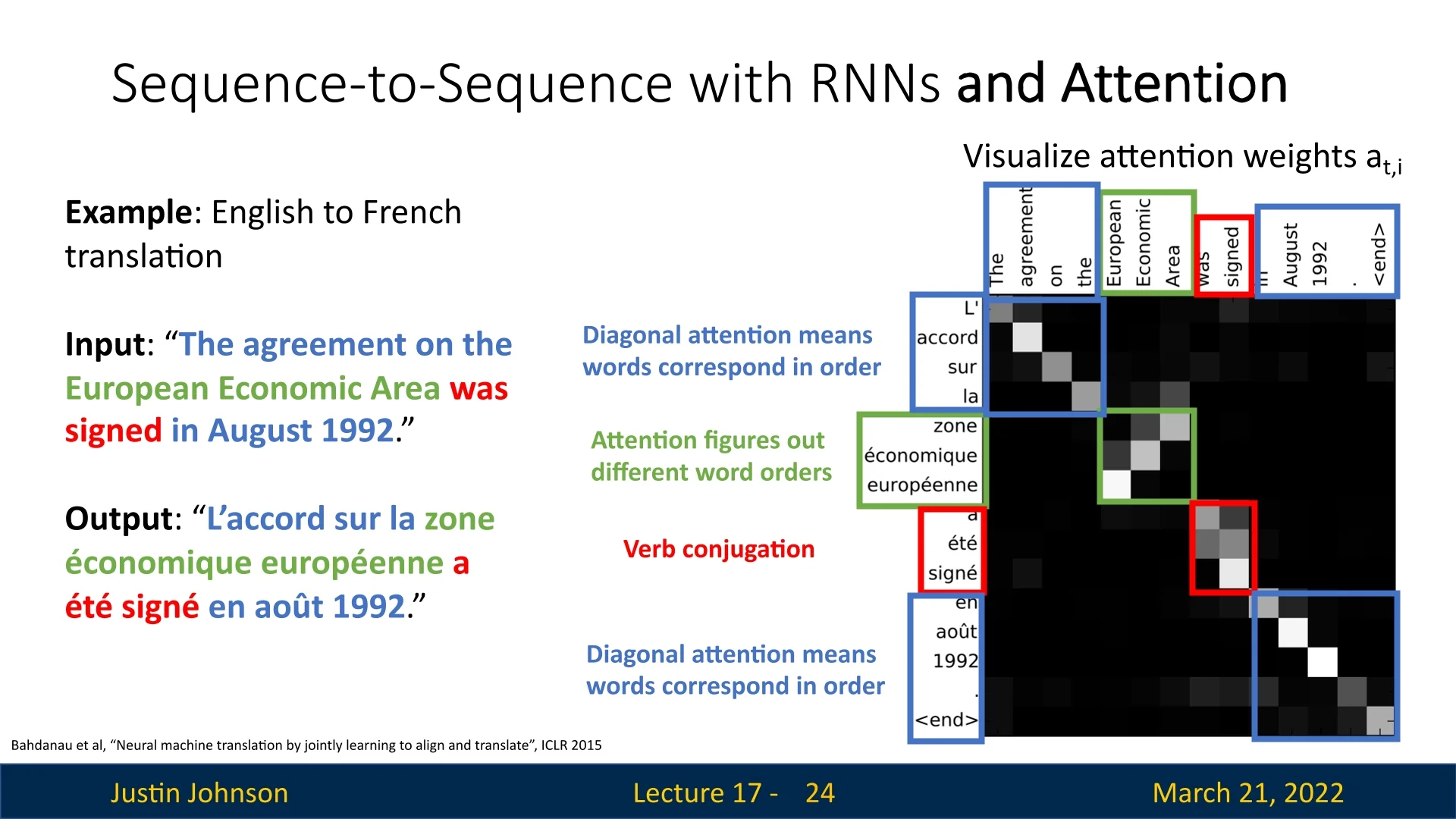

To see this in action, let us revisit our sequence-to-sequence translation example, but now translating from English to French using the attention mechanism proposed by Bahdanau et al. [22]. Consider the following English sentence:

‘‘The agreement on the European Economic Area was signed in August 1992 . <end>’’

which the model translates to French as:

‘‘L’accord sur la zone économique européenne a été signé en août 1992 . <end>’’

By constructing a matrix of size \(T' \times T\) (where \(T'\) is the number of output tokens and \(T\) is the number of input tokens), we can visualize the attention weights \(a_{i,j}\), which quantify how much attention the decoder assigns to each encoder hidden state when generating an output token. A higher weight corresponds to a stronger influence of the encoder hidden state \(h_j\) on the decoder state \(s_i\), which then produces the output token \(y_i\).

In this visualization, each cell represents an attention weight \(a_{i,j}\), where a brighter color corresponds to a higher weight (i.e., stronger attention), and a darker color corresponds to a lower weight (weaker attention). The map reveals how the model distributes its focus while generating each output word.

Understanding Attention Patterns

Observing the attention map, we notice an almost diagonal alignment. This makes sense, as words in one language typically correspond to words in the other in the same order. For example, the English word ‘‘The’’ aligns with the French ‘‘L’ ’’, and ‘‘agreement’’ aligns with ‘‘accord’’. This pattern suggests that the model has learned a reasonable alignment between source and target words.

However, not all words align perfectly in the same order. Some phrases require reordering due to syntactic differences between languages. A notable example is the phrase:

‘‘European Economic Area’’ \(\rightarrow \) ‘‘zone économique européenne’’

Here, the attention map reveals that the model adjusts its focus dynamically, attending to different words at different decoding steps. In English, adjectives precede nouns, while in French, they follow. The attention map correctly assigns a higher weight to ‘‘zone’’ when generating the French ‘‘zone’’, then shifts attention to ‘‘economic’’ and ‘‘European’’ at appropriate steps.

This behavior shows that the model is not merely copying words but has learned meaningful language structure. Importantly, we did not explicitly tell the model these word alignments—it learned them from data alone. The ability to extract these patterns purely from training data without human supervision is a major advantage of attention-based architectures.

Why Attention Interpretability Matters

The interpretability provided by attention maps allows us to:

- Understand model predictions: By visualizing attention distributions, we can verify whether the model is focusing on the right input words for each output token.

- Debug model errors: If a translation error occurs, the attention map can reveal whether it was due to misaligned attention weights.

- Gain linguistic insights: The learned alignments sometimes uncover grammatical and syntactic relationships across languages that may not be immediately obvious.

This ability to interpret how neural networks make decisions was largely missing from previous architectures, making attention a crucial development in deep learning. As we move forward, we will explore even more advanced forms of attention, such as self-attention in Transformers, which allows models to process entire sequences in parallel rather than sequentially.

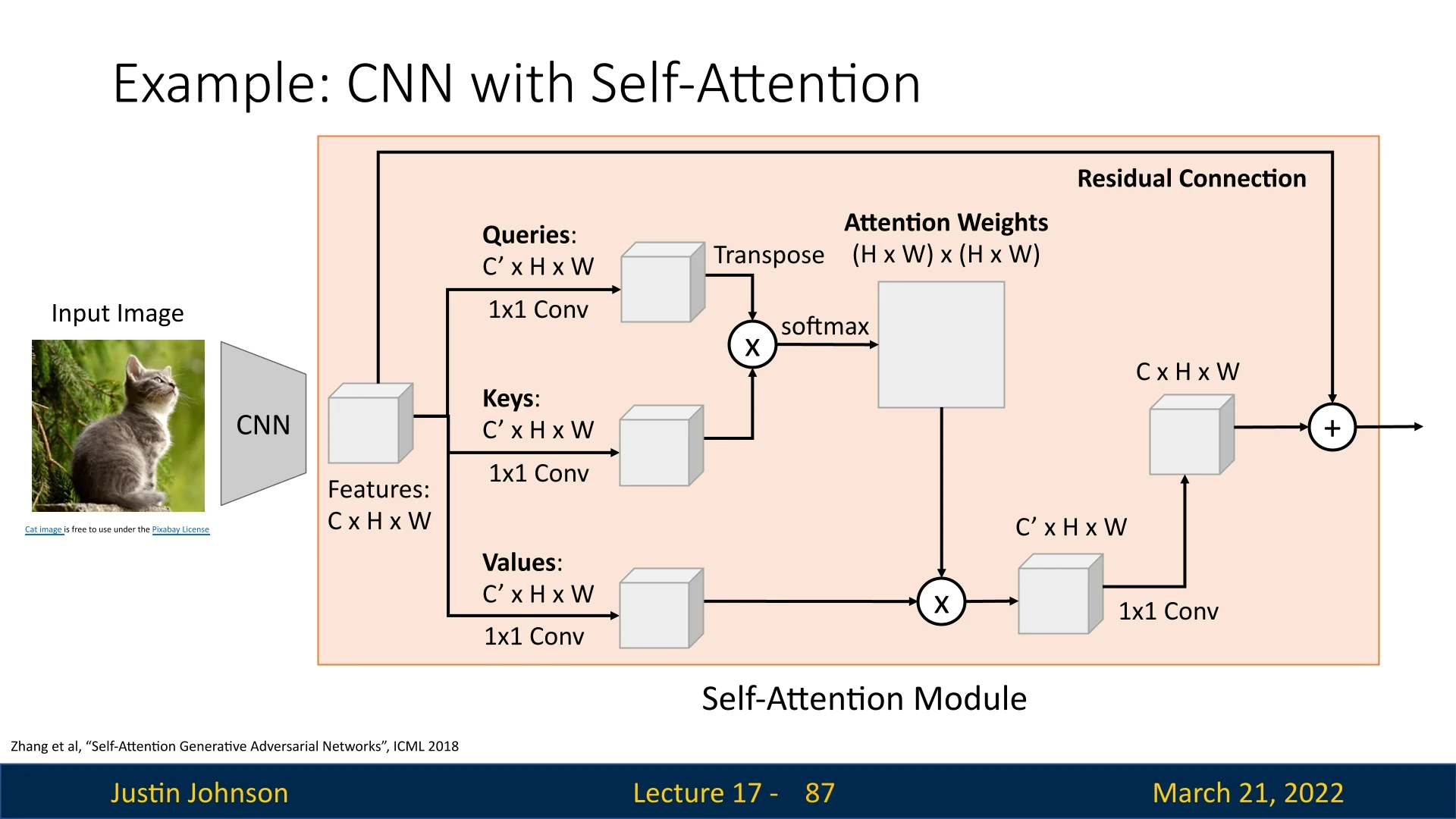

17.3 Applying Attention to Image Captioning

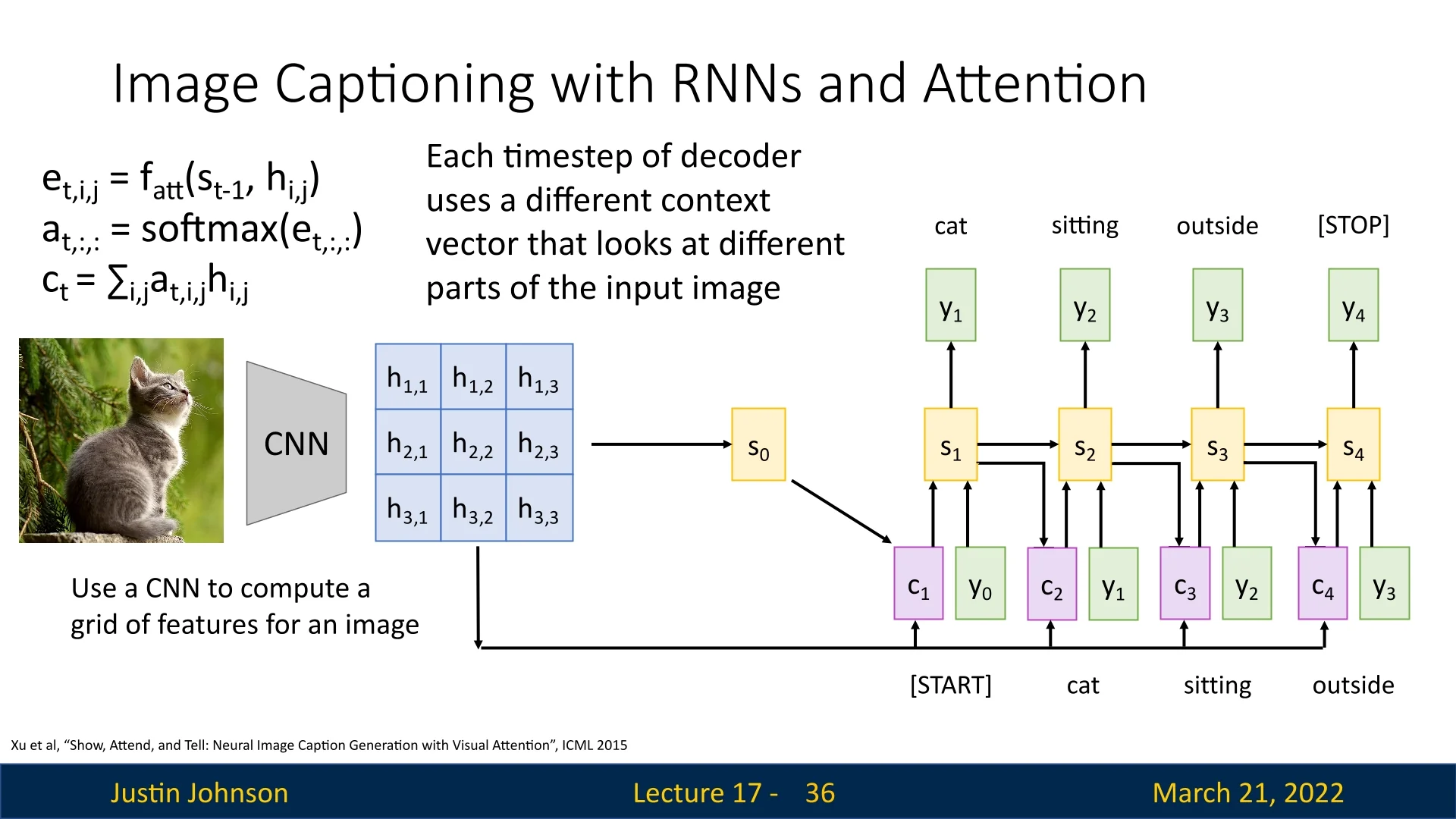

The flexibility of attention mechanisms—in particular, their ability to operate on unordered sets of vectors—allows them to extend naturally from text-based sequence modeling to computer vision tasks such as image captioning. This was demonstrated in the seminal work by Xu et al. [710], “Show, Attend and Tell: Neural Image Caption Generation with Visual Attention”. Rather than compressing an entire image into a single fixed-size feature vector, their model uses an attention-based decoder that dynamically focuses on different image regions while generating each word of the caption.

17.3.1 Feature Representation

The input image is first processed by a convolutional neural network (CNN), and we extract a convolutional feature map of shape \(C_{\mbox{out}} \times H' \times W'\). Instead of treating this as a single global vector, we flatten the spatial dimensions to obtain a set of \(L = H' W'\) feature vectors \[ A = \{ h_1, \dots , h_L \}, \quad h_i \in \mathbb {R}^{C_{\mbox{out}}}. \] Each vector \(h_i\) corresponds to a specific receptive field in the original image and serves as a spatial annotation.

These vectors play the same role as the encoder hidden states \(\{h_1, \dots , h_T\}\) in sequence-to-sequence models: they form an unordered set over which the decoder can apply attention. The goal of the attention mechanism is to construct, at each decoding step, a context vector \(c_t\) that selectively aggregates information from these spatial features.

17.3.2 Attention-Based Caption Decoder

The caption is generated one token at a time by a recurrent decoder (for example, an LSTM) equipped with additive (Bahdanau) attention over the image features.

Initialization Before generation begins, the decoder needs an initial hidden state \(s_0\) that summarizes the overall content of the image.

Rather than initializing \(s_0\) from a special token, we compute it from the spatial features using a small MLP \(g_{\mbox{init}}\): \begin {equation} s_0 = g_{\mbox{init}}\!\left (\frac {1}{L} \sum _{i=1}^{L} h_i \right ). \end {equation} This provides a global, learned summary of the image that grounds the decoding process.

Additive attention and context computation At each timestep \(t\), the decoder maintains a hidden state \(s_{t-1}\) and has access to the image features \(\{h_i\}\). It uses the same additive attention function \(f_{att}\) introduced earlier to compute alignment scores between the current state and each image region: \begin {equation} e_{t,i} = f_{att}(s_{t-1}, h_i). \end {equation} Recall that in additive (Bahdanau) attention, \(f_{att}\) is implemented as a shallow MLP that projects \(s_{t-1}\) and \(h_i\) into a shared space and scores their compatibility; a common choice is \begin {equation} e_{t,i} = v_a^{\top } \tanh (W_a s_{t-1} + U_a h_i), \end {equation} with learnable parameters \(W_a, U_a, v_a\).

These alignment scores are normalized with a softmax to obtain attention weights over all spatial locations: \begin {equation} \alpha _{t,i} = \frac {\exp (e_{t,i})}{\sum _{k=1}^{L} \exp (e_{t,k})}, \end {equation} where \(\alpha _{t,i}\) indicates how strongly the decoder attends to region \(i\) at timestep \(t\).

The context vector \(c_t\) is then computed as the corresponding weighted sum of the spatial features: \begin {equation} c_t = \sum _{i=1}^{L} \alpha _{t,i} h_i. \end {equation}

State update and word prediction Given the previous word \(y_{t-1}\), the previous hidden state \(s_{t-1}\), and the newly computed context vector \(c_t\), the decoder updates its state and predicts the next word: \begin {align} s_t &= \text {RNN}(s_{t-1}, [y_{t-1}, c_t]), \\ p(y_t \mid y_{<t}, \text {image}) &\propto \exp \big (L_o(s_t, c_t)\big ), \end {align}

where \(L_o\) is a learned output layer (typically a linear layer followed by a softmax over the vocabulary). This procedure repeats until the decoder emits the <END> token.

Example: “cat sitting outside” In Figure 17.6, the caption generated for the image is

<START> cat sitting outside <STOP>.

At \(t=1\), when predicting cat, the attention weights \(\{\alpha _{1,i}\}\) typically concentrate on the region containing the cat. At \(t=2\), for sitting, the model continues to focus on the cat but may shift towards regions that reveal posture (such as the body and the surface it sits on). By \(t=3\), when producing outside, attention can expand toward the background, emphasizing regions that signal the outdoor environment (trees, grass, or sky). The caption thus emerges from a sequence of content-dependent glimpses over the image, rather than from a single static global representation.

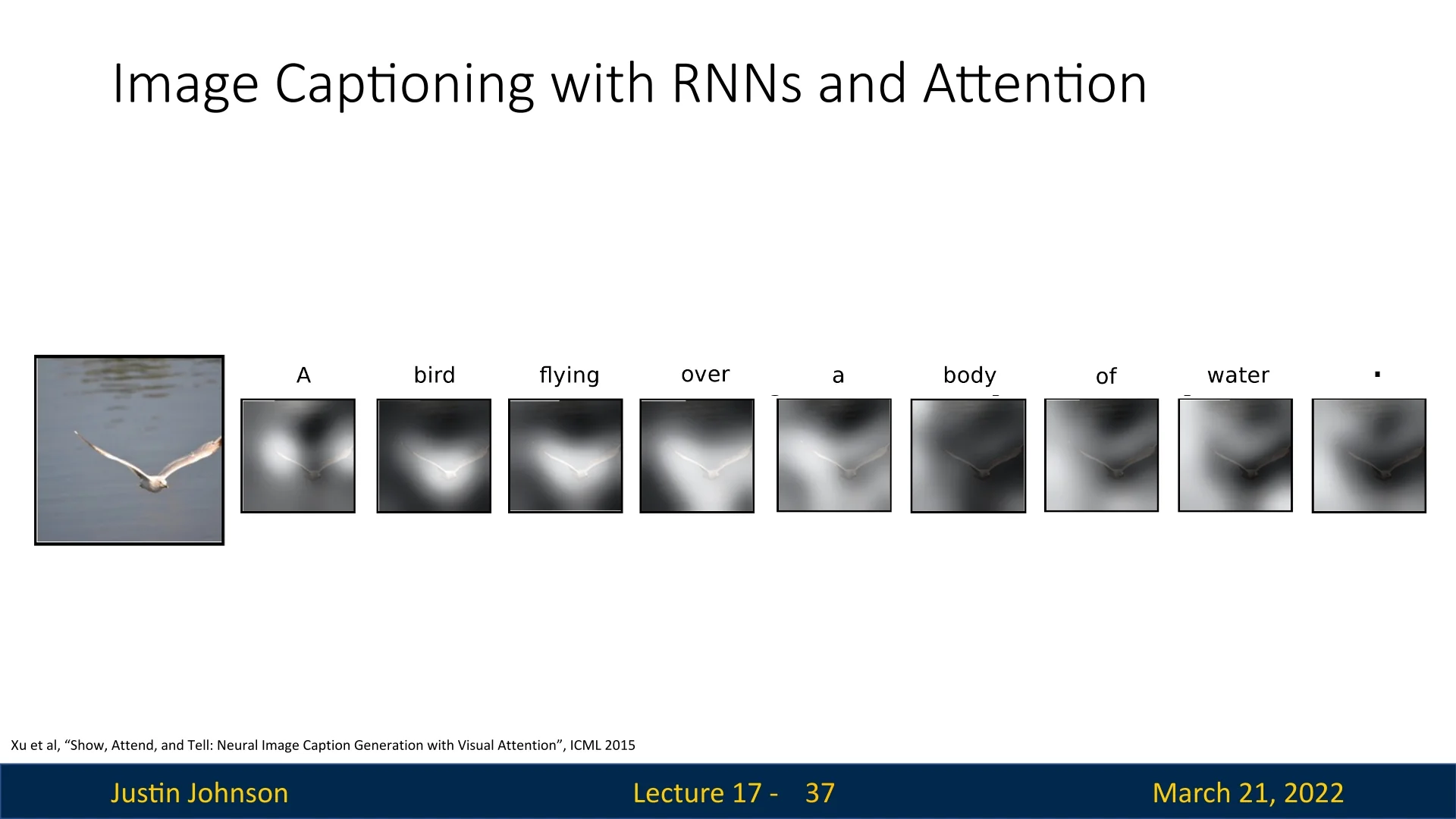

17.3.3 Visualizing Attention in Image Captioning

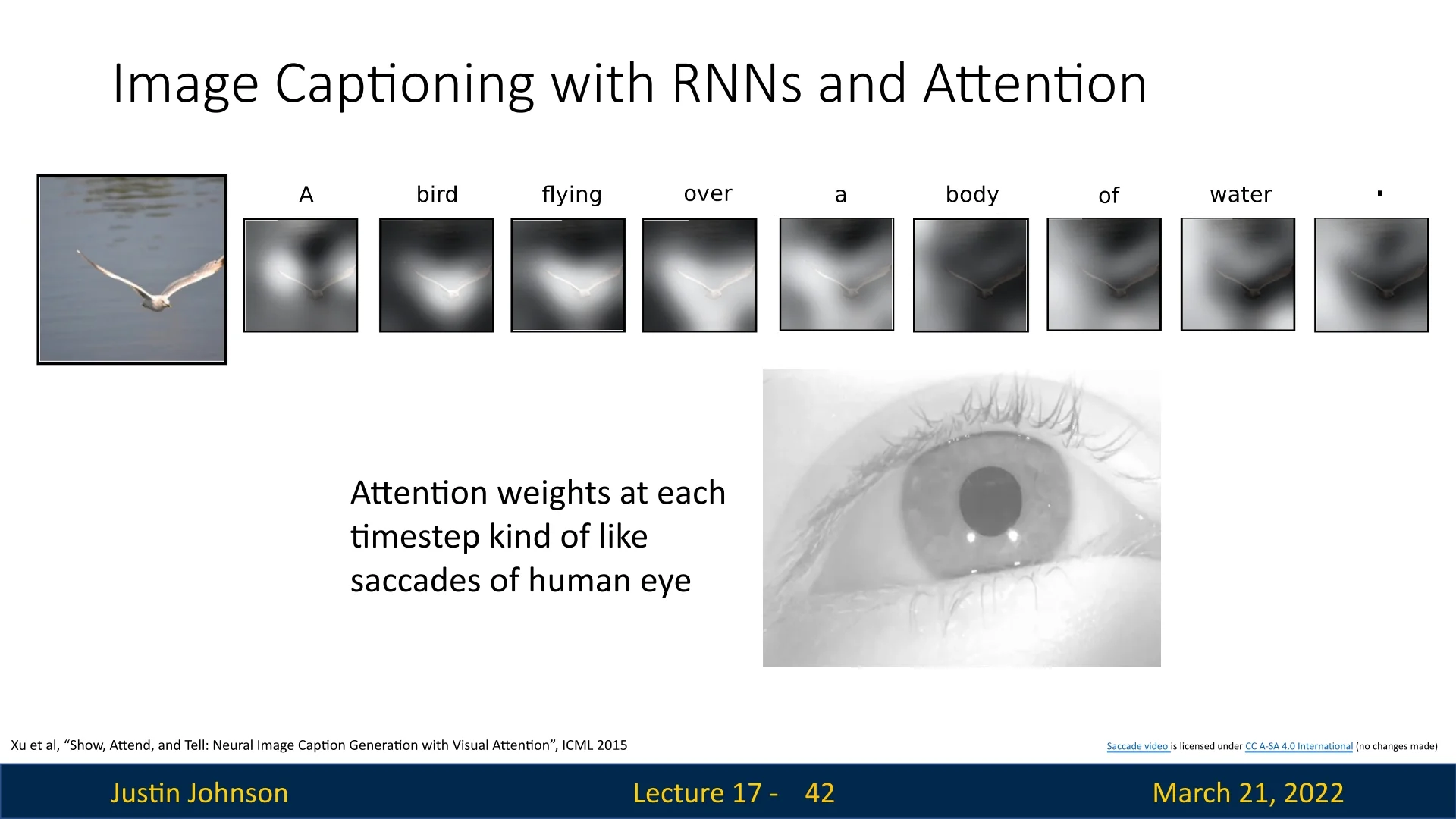

As in the translation examples earlier in this chapter, attention weights in image captioning can be visualized as spatial attention maps, revealing where the model “looks” when generating each word. Figure 17.7 illustrates this interpretability for the caption

‘‘A bird flying over a body of water .’’

generated by an attention-based image captioning model.

Reading the sequence of maps from left to right, we can see how the model’s focus shifts over the image as the caption unfolds:

- For function words such as A or of, the attention is relatively diffuse, reflecting that these tokens are driven more by language modeling than by specific visual evidence.

- When predicting the noun bird, the attention weights concentrate on the pixels covering the bird, grounding the object word in the correct region of the image.

- For the verb flying, the model continues to focus on the bird—particularly around its body and wings—since the action is attributed to that object.

- When generating water, the attention shifts away from the bird and spreads over the lower part of the image corresponding to the water surface, capturing the background context needed to complete the phrase ‘‘a body of water’’.

These maps provide a direct, spatially grounded view of how the model aligns words with image regions. They are produced by the soft attention mechanism described above, in which the model maintains a differentiable distribution \(\{\alpha _{t,i}\}\) over all spatial locations at each timestep. Xu et al. [710] also explore a hard attention variant that samples a single region per timestep; while potentially more efficient, this discrete sampling is not differentiable and therefore requires reinforcement-learning techniques (such as REINFORCE) for training.

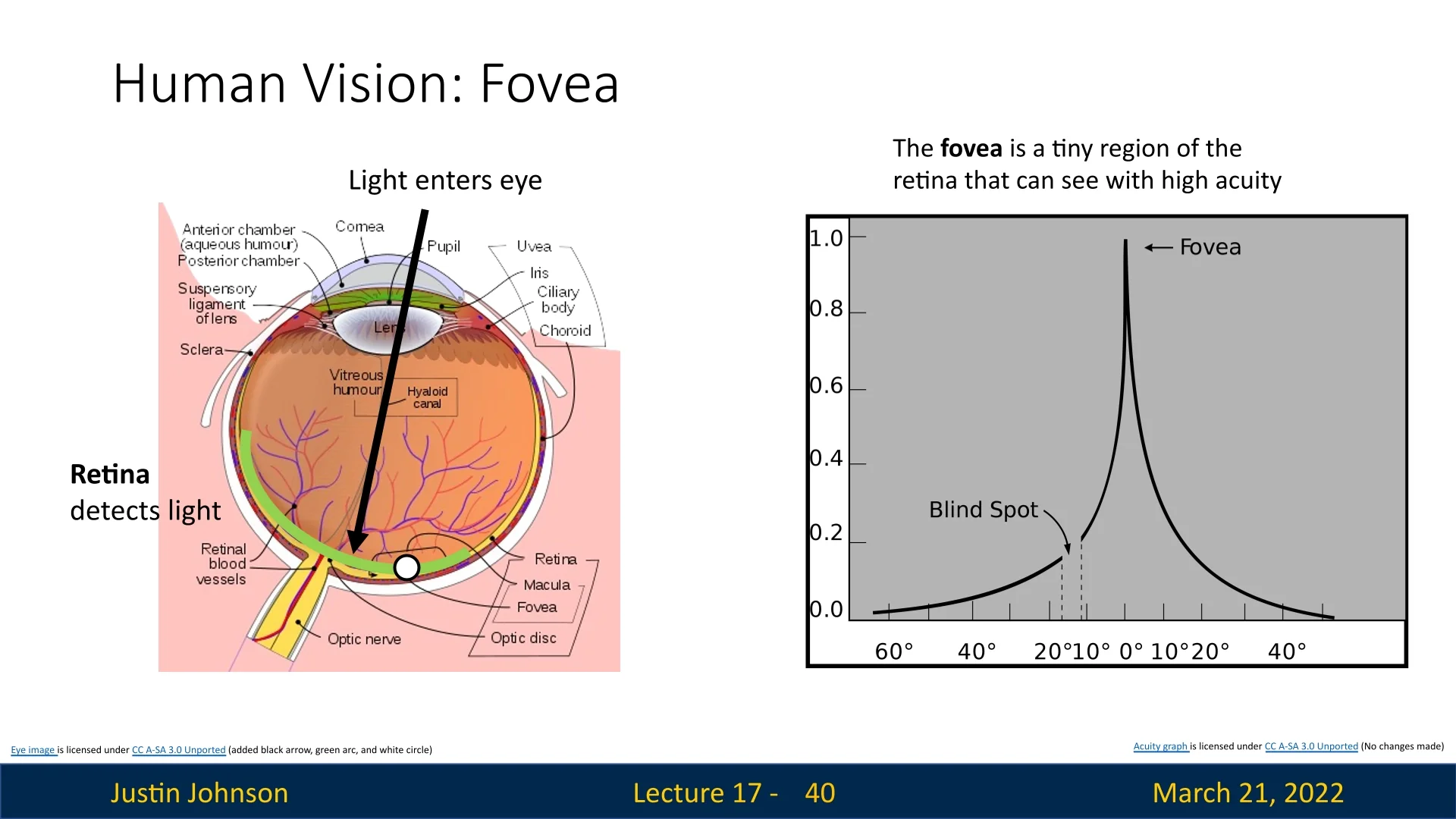

17.3.4 Biological Inspiration: Saccades in Human Vision

An interesting question we can ask ourselves is: How similar is this mechanism to how humans perceive the world? As it turns out, the resemblance is quite significant.

The retina, the light-sensitive layer inside our eye, is responsible for converting incoming light into neural signals that our brain processes. However, not all parts of the retina contribute equally to our vision. The central region, known as the fovea, is a specialized area that provides high-acuity vision but covers only a small portion of our total visual field.

As seen in Figure 17.8, only a small region of the retina provides clear, detailed vision. The rest of our visual field consists of lower-resolution perception. To compensate for this, human eyes perform rapid, unconscious movements called saccades, dynamically shifting the fovea to different areas of interest in a fraction of a second.

Attention-based image captioning mimics this biological mechanism. Just as our eyes adjust their focus to capture different parts of a scene, attention in RNN-based captioning models selectively attends to different image regions at each timestep. The model does not process the entire image at once; instead, it dynamically ”looks” at relevant portions as it generates each word in the caption.

In Figure 17.9, we can see how attention weights at each timestep act like saccades in the human eye. Rather than maintaining a static focus, the model dynamically shifts its attention across different image regions, much like our eyes scan a scene.

Key parallels between saccades and attention-based image captioning:

- Selective focus: Human vision relies on the fovea to process high-resolution details, while peripheral vision provides contextual information. Similarly, attention assigns higher weights to relevant image regions while keeping a broader, low-weighted awareness of the rest.

- Dynamic adjustment: Just as saccades allow humans to explore different parts of a scene, attention-based models shift focus across image regions as new words are generated.

- Efficient processing: The brain does not process an entire scene at once; instead, it strategically selects important details. Attention mechanisms follow the same principle by prioritizing certain regions rather than treating all pixels equally.

This biological inspiration helps explain why attention mechanisms are so effective in vision tasks—they leverage a principle that human perception has refined over millions of years. The next section will explore how this interpretability can be visualized through attention maps.

17.3.5 Beyond Captioning: Generalizing Attention Mechanisms

The power of attention extends beyond image captioning. Inspired by ”Show, Attend, and Tell,” numerous works have applied similar mechanisms to diverse tasks:

- Visual Question Answering (VQA) [709]: Attend to image regions relevant to answering a given question.

- Speech Recognition [75]: Attend to audio frames while generating text transcriptions.

- Robot Navigation [421]: Attend to textual instructions to guide robotic movement.

These applications demonstrate that attention is not merely a tool for sequential processing—it is a powerful and general framework for learning relationships between different modalities. This leads naturally to the development of Attention Layers, which we will explore next.

17.4 Attention Layer

In computer science, when a method proves broadly useful, the natural progression is to abstract and generalize it into a reusable module. This principle applies to attention. What began as a task-specific technique for encoder–decoder RNNs was distilled into a general-purpose Attention Layer that can be integrated across architectures and domains.

To move from the original “RNN + Attention” setting to a modular view, we replace task-specific names with generic roles:

- Query vector \(q\): the vector that asks “what is relevant now?” In encoder–decoder RNNs, this is typically the decoder state \(s_{t-1}\), with shape \(D_Q\).

- Input vectors \(X = \{X_i\}_{i=1}^{N_X}\): the set of vectors we may attend to. These could be encoder states \(h_i\) in translation or spatial CNN features \(h_{i,j}\) in vision, with shape \(N_X \times D_X\).

- Similarity function \(f_{\mbox{att}}\): a scoring rule that measures compatibility between \(q\) and each \(X_i\). Early attention mechanisms used a small MLP (additive/Bahdanau attention), while modern architectures often use dot-product-based scoring.

Regardless of the domain, the attention computation follows the same three-step template:

- 1.

- Compute similarities: \begin {equation} e_i = f_{\mbox{att}}(q, X_i), \quad e \in \mathbb {R}^{N_X}. \end {equation}

- 2.

- Normalize weights: \begin {equation} a_i = \frac {\exp (e_i)}{\sum _j \exp (e_j)}. \end {equation}

- 3.

- Aggregate inputs: \begin {equation} y = \sum _{i} a_i X_i. \end {equation}

The attention layer formalizes this pattern as a standard building block that takes a query and a set of input vectors and returns an attended output. A natural next question is which scoring function \(f_{\mbox{att}}\) provides the best balance of expressivity, efficiency, and optimization stability.

17.4.1 Scaled Dot-Product Attention

In the earlier RNN-based formulations, the similarity function \(f_{\mbox{att}}\) was implemented as a small MLP: \begin {equation} e_i = f_{\mbox{att}}(q, x_i) = v_a^{\top }\tanh (W_a q + U_a x_i), \end {equation} which is known as additive (Bahdanau) attention. This learned scoring network is flexible, but it introduces additional parameters and per-pair nonlinear computation.

Modern attention architectures, most notably the Transformer, simplify this step. They replace the MLP with a direct geometric similarity and add a principled normalization: \begin {equation} e_i = \frac {\mathbf {q} \cdot \mathbf {x}_i}{\sqrt {D_Q}}, \qquad \mathbf {q}\in \mathbb {R}^{D_Q}, \quad \mathbf {x}_i\in \mathbb {R}^{D_Q}. \end {equation} Thus, \begin {equation} f_{\mbox{att}}(q, x_i) = \frac {q^{\top }x_i}{\sqrt {D_Q}}, \end {equation} meaning that the learned MLP used in additive attention is replaced by a simple dot product. The dot product measures how well aligned the two vectors are in the embedding space, and the scaling term controls the magnitude of the resulting logits before softmax.

Why Scale by \(\sqrt {D_Q}\)? At first glance, dividing by \(\sqrt {D_Q}\) may look like a technical detail. In practice, it is crucial for stable training.

1. Why does the dot-product variance grow with dimension? Write the unscaled dot product as a sum of coordinate-wise products: \begin {equation} \mathbf {q}\cdot \mathbf {x}_i \;=\; \sum _{d=1}^{D_Q} q_d\,x_{i,d}. \end {equation} Assume, as a simplifying heuristic, that \(q_d\) and \(x_{i,d}\) are independent, zero-mean, and identically distributed with variance \(\sigma ^2\). Then each product term has \begin {equation} \mathbb {E}[q_d x_{i,d}] = 0, \qquad \mbox{Var}(q_d x_{i,d}) = \mathbb {E}[q_d^2 x_{i,d}^2] = \mathbb {E}[q_d^2]\mathbb {E}[x_{i,d}^2] = \sigma ^4. \end {equation} If we further assume these product terms are approximately independent across \(d\), then the variance of the sum grows linearly: \begin {equation} \mbox{Var}[\,\mathbf {q}\cdot \mathbf {x}_i\,] \;\approx \; D_Q \sigma ^4. \end {equation} Thus, the standard deviation of the raw dot product scales like \(\sqrt {D_Q}\). In high-dimensional models (e.g., \(D_Q = 512\) or \(1024\)), this naturally produces larger-magnitude logits.

2. Why are large magnitudes a problem for softmax? After computing scores, we normalize them with softmax: \begin {equation} a_i \;=\; \frac {\exp (e_i)}{\sum _{j=1}^{N_X} \exp (e_j)}. \end {equation} When one score is much larger than the others, \(\exp (e_i)\) overwhelms the denominator. The resulting attention distribution becomes nearly one-hot.

A small numerical example makes this concrete. With modest logits, \[ \mbox{softmax}([2,1]) \approx [0.73,\,0.27], \] the distribution is “soft,” so gradients can meaningfully adjust both scores. But if the logits are scaled up, \[ \mbox{softmax}([20,10]) \approx [0.99995,\,0.00005], \] softmax effectively behaves like an \(\texttt{argmax}\). In this saturated regime, the gradient of softmax becomes extremely small. Consequently, the model stops learning because the error signal cannot backpropagate through these saturated regions.

3. How does scaling fix this? Dividing by \(\sqrt {D_Q}\) counteracts the growth in logit magnitude: \begin {equation} e_i \;=\; \frac {\mathbf {q}\cdot \mathbf {x}_i}{\sqrt {D_Q}} \quad \Longrightarrow \quad \mbox{Var}[\,e_i\,] \;\approx \; \sigma ^4, \end {equation} so the typical scale of the scores remains roughly stable as \(D_Q\) increases. This keeps softmax in a regime where the attention distribution is neither too sharp nor too flat, preserving healthy gradients and improving optimization.

Scaling and softmax temperature Softmax is invariant to adding a constant to all logits, but it is not invariant to scaling. We can interpret the normalization by \(\sqrt {D_Q}\) as a principled temperature control: \begin {equation} a_i \;=\; \frac {\exp (e_i / T)}{\sum _{j} \exp (e_j / T)}. \end {equation} If the effective temperature is too low, attention becomes overly peaked and brittle. If it is too high, attention becomes nearly uniform and loses discriminative power. The \(\sqrt {D_Q}\) scaling is a robust default that keeps attention well-calibrated across model widths.

Why dot product? Replacing additive attention with scaled dot-product attention offers several advantages:

- Compute efficiency: Dot products can be implemented as batched matrix multiplications, which are highly optimized on modern accelerators [644].

- Parameter efficiency: The scoring step introduces no additional MLP parameters, unlike additive attention [22].

- Competitive accuracy: Despite its simplicity, scaled dot-product attention matches or surpasses MLP-based scoring in large-scale sequence modeling benchmarks [22, 644].

In summary, scaled dot-product attention replaces the learned MLP similarity function of additive attention with a simpler inner product, while using \(\sqrt {D_Q}\) scaling to maintain stable, trainable softmax behavior. This combination of efficiency and stability is a key reason it became the default scoring rule in modern attention layers.

In the next part, we will extend this single-query formulation to multiple query vectors computed in parallel, enabling a compact matrix form of attention that directly sets up self-attention and Transformer blocks.

From a single query to many queries So far, we have described attention for a single query vector \(q\). In practice, we almost always need to process multiple queries in parallel. In the next part, we will generalize this formulation to a set of queries \( Q \in \mathbb {R}^{N_Q \times D_Q} \) attending over an input set \( X \in \mathbb {R}^{N_X \times D_Q}, \) and we will express the entire computation as efficient matrix operations: \[ \mbox{Attention}(Q, X) \;=\; \mbox{softmax}\!\left (\frac {QX^{\top }}{\sqrt {D_Q}}\right )X. \] This multi-query view is the direct bridge to self-attention and the Transformer blocks we will develop next.

17.4.2 Extending to Multiple Query Vectors

Given a query matrix \( Q \in \mathbb {R}^{N_Q \times D_Q} \), we compute attention scores in parallel:

\begin {equation} E = \frac {Q X^T}{\sqrt {D_Q}}, \quad E \in \mathbb {R}^{N_Q \times N_X}. \end {equation}

We then apply the softmax function along the input dimension to normalize attention scores:

\begin {equation} A = \mbox{softmax}(E), \quad A \in \mathbb {R}^{N_Q \times N_X}. \end {equation}

The final attention-weighted output is obtained by computing: \begin {equation} Y = A X, \quad Y \in \mathbb {R}^{N_Q \times D_X}. \end {equation}

- Parallel Computation: Multiple queries benefit from the efficient processing through matrix-matrix multiplications of scaled-dot product self-attention. Hence, we can use them to increase our per-layer representational capacity.

- Richer Representations: Allows capturing diverse relationships between inputs and queries.

- Token-Wise Attention: Essential for self-attention layers (covered later), where each token in a sequence attends to others independently.

17.4.3 Introducing Key and Value Vectors

In early formulations, the input vectors \( X \) were used both to compute attention scores and to generate outputs. However, these two functions serve distinct purposes:

- Keys (\( K \)) determine how queries interact with different input elements.

- Values (\( V \)) contain the actual information retrieved by attention.

Instead of using \( X \) directly, we introduce learnable weight matrices (transformations):

\begin {equation} K = X W_K, \quad K \in \mathbb {R}^{N_X \times D_Q}, \quad V = X W_V, \quad V \in \mathbb {R}^{N_X \times D_V}. \end {equation}

The attention computation is then reformulated as:

\begin {equation} E = \frac {Q K^T}{\sqrt {D_Q}}, \quad A = \mbox{softmax}(E), \quad Y = A V. \end {equation}

- Decouples Retrieval from Output Generation: Keys optimize for similarity matching, while values store useful information.

- Increased Expressiveness: Independent key-value transformations improve model flexibility.

- Efficient Memory Access: Enables retrieval-like behavior, where queries search for relevant information rather than being constrained by input representations.

17.4.4 An Analogy: Search Engines

Attention mechanisms can be understood through the analogy of a search engine, which retrieves relevant information based on a user query. In this analogy:

- Query: The search phrase entered by the user.

- Keys: The indexed metadata linking queries to stored information.

- Values: The actual content retrieved in response to the query.

Just as a search engine compares a query to indexed keys but returns values, attention mechanisms compute query-key similarities to determine which values contribute to the final output.

Empire State Building Example

Consider the query "How tall is the Empire State Building?":

- 1.

- The search engine identifies relevant terms from the query.

- 2.

- It retrieves indexed keys, such as "Empire State Building" and "building height".

- 3.

- It selects pages containing the most relevant values, such as "The Empire State Building is 1,454 feet tall, including its antenna.".

Similarly, attention mechanisms:

- Use query vectors to determine information needs.

- Compare them to key vectors to identify relevant input.

- Retrieve value vectors to generate the final output.

Why This Separation Matters

Separating queries, keys, and values provides:

- Efficiency: Enables fast retrieval without processing all inputs sequentially.

- Flexibility: Allows different queries to focus on various input aspects.

- Generalization: Adapts across tasks without modifying the entire model.

17.4.5 Bridging to Visualization and Further Understanding

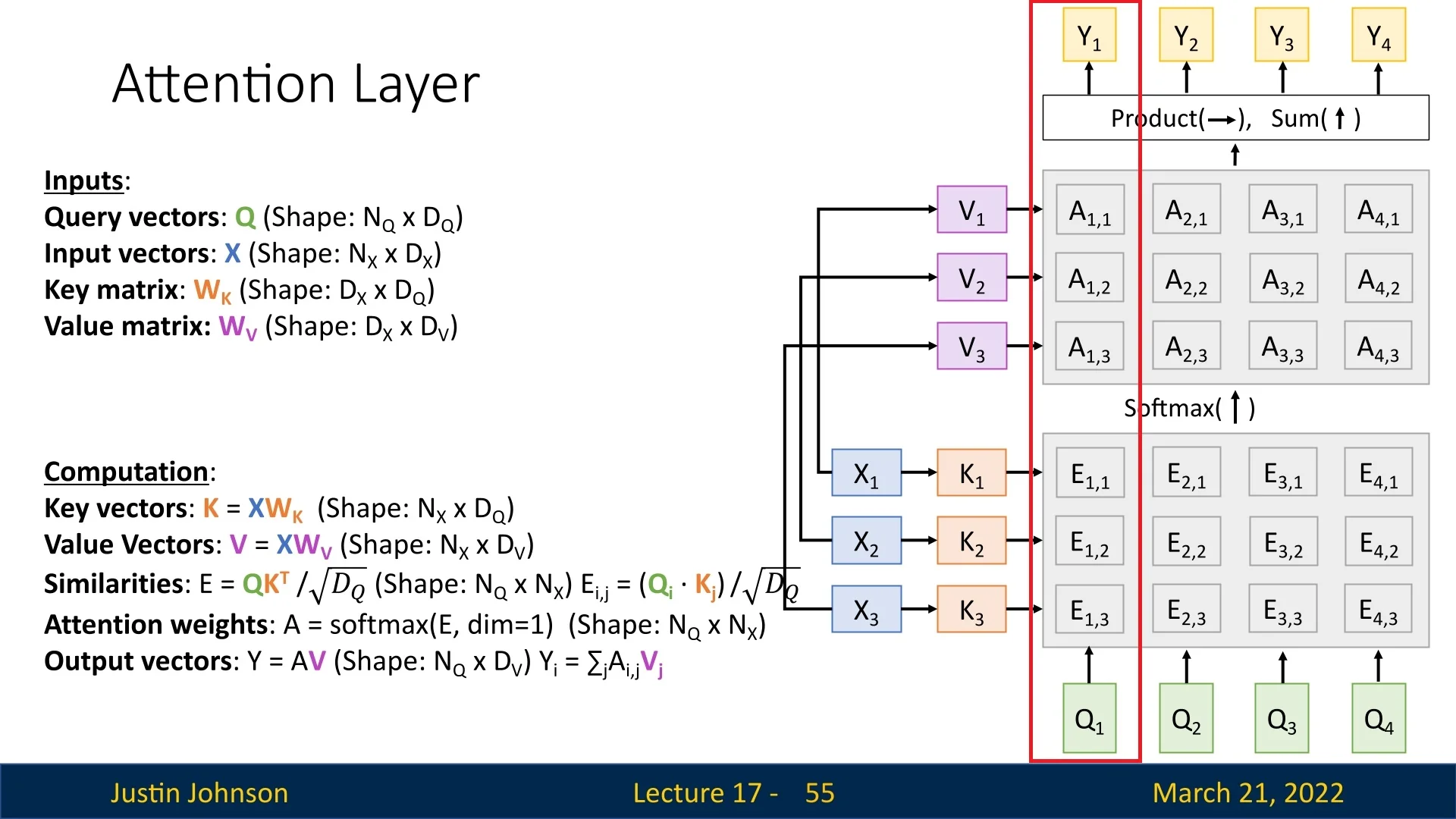

Visualizing attention enhances understanding. Below, we outline the steps of the Attention Layer and explain how its structure facilitates interpretation.

Overview of the Attention Layer Steps

The attention mechanism follows a structured sequence of computations:

- 1.

- Inputs to the Layer: The layer receives a set of query vectors \( Q \) and a set of input vectors \( X \). In our example: \begin {equation} Q = \{Q_1, Q_2, Q_3, Q_4\}, \quad X = \{X_1, X_2, X_3\}. \end {equation}

- 2.

- Computing Key Vectors: Each input vector \( X_i \) is transformed into a key vector \( K_i \) using the learnable key matrix \( W_K \): \begin {equation} K = X W_K, \quad K \in \mathbb {R}^{N_X \times D_Q}. \end {equation} In our example, we obtain: \begin {equation} K = \{K_1, K_2, K_3\}, \quad \mbox{where } K_i = X_i W_K. \end {equation}

- 3.

- Computing Similarities: Each query vector is compared to all key vectors using the scaled dot product: \begin {equation} E = \frac {QK^T}{\sqrt {D_Q}}, \quad E \in \mathbb {R}^{N_Q \times N_X}. \end {equation} The resulting matrix \( E \) contains unnormalized similarity scores, where each row corresponds to a query vector and each column corresponds to a key vector: \begin {equation} E = \begin {bmatrix} E_{1,1} & E_{1,2} & E_{1,3} \\ E_{2,1} & E_{2,2} & E_{2,3} \\ E_{3,1} & E_{3,2} & E_{3,3} \\ E_{4,1} & E_{4,2} & E_{4,3} \end {bmatrix}. \end {equation} Here, \( E_{i,j} \) represents the similarity between query \( Q_i \) and key \( K_j \).

- 4.

- Computing Attention Weights: Since \( E \) is unnormalized, we apply softmax over each row to produce attention probabilities: \begin {equation} A = \mbox{softmax}(E, \mbox{dim} = 1), \quad A \in \mathbb {R}^{N_Q \times N_X}. \end {equation} This ensures that each row of \( A \) forms a probability distribution over the input keys. Using Justin’s visualization, we represent \( E \) and \( A \) in their transposed form: \begin {equation} E^T = \begin {bmatrix} E_{1,1} & E_{2,1} & E_{3,1} & E_{4,1} \\ E_{1,2} & E_{2,2} & E_{3,2} & E_{4,2} \\ E_{1,3} & E_{2,3} & E_{3,3} & E_{4,3} \end {bmatrix}, \quad A^T = \begin {bmatrix} A_{1,1} & A_{2,1} & A_{3,1} & A_{4,1} \\ A_{1,2} & A_{2,2} & A_{3,2} & A_{4,2} \\ A_{1,3} & A_{2,3} & A_{3,3} & A_{4,3} \end {bmatrix}. \end {equation} In this notation, each column corresponds to a single query \( Q_i \). This makes visualization easier because the column of \( A^T \) directly represents the probability distribution over the keys that contribute to computing the output vector \( Y_i \).

- 5.

- Computing Value Vectors: We transform the input vectors into value vectors using a learnable value matrix \( W_V \): \begin {equation} V = X W_V, \quad V \in \mathbb {R}^{N_X \times D_V}. \end {equation}

- 6.

- Computing the Final Output: The final output is obtained by computing a weighted sum of the value vectors using the attention weights: \begin {equation} Y = A V, \quad Y \in \mathbb {R}^{N_Q \times D_V}. \end {equation} Using Justin’s visualization approach, the final output for each query is: \begin {equation} Y_i = \sum _{j} A_{j,i} V_j. \end {equation} Since each column in \( A^T \) corresponds to a query vector \( Q_i \), it aligns visually with the computation of \( Y_i \). The values in the column determine how each value vector \( V_j \) contributes to forming \( Y_i \).

In Figure 17.10, the red-boxed column highlights the computations associated with the query vector \(\textcolor [rgb]{0.471,0.694,0.318}{Q_1}\). The process follows these steps:

- 1.

- Generating Key and Value Vectors: The input vectors \(\textcolor [rgb]{0.267,0.447,0.769}{X} = \{\textcolor [rgb]{0.267,0.447,0.769}{X_1}, \textcolor [rgb]{0.267,0.447,0.769}{X_2}, \textcolor [rgb]{0.267,0.447,0.769}{X_3}\}\) are transformed into key and value vectors using learnable projection matrices: \begin {equation} \textcolor [rgb]{0.929,0.502,0.212}{K} = \textcolor [rgb]{0.267,0.447,0.769}{X} \textcolor [rgb]{0.929,0.502,0.212}{W_K}, \quad \textcolor [rgb]{0.769,0.369,0.800}{V} = \textcolor [rgb]{0.267,0.447,0.769}{X} \textcolor [rgb]{0.769,0.369,0.800}{W_V}. \end {equation} This results in \(\textcolor [rgb]{0.929,0.502,0.212}{K} = \{\textcolor [rgb]{0.929,0.502,0.212}{K_1}, \textcolor [rgb]{0.929,0.502,0.212}{K_2}, \textcolor [rgb]{0.929,0.502,0.212}{K_3}\}\) and \(\textcolor [rgb]{0.769,0.369,0.800}{V} = \{\textcolor [rgb]{0.769,0.369,0.800}{V_1}, \textcolor [rgb]{0.769,0.369,0.800}{V_2}, \textcolor [rgb]{0.769,0.369,0.800}{V_3}\}\).

- 2.

- Computing Similarity Scores: The query vector \(\textcolor [rgb]{0.471,0.694,0.318}{Q_1}\) is compared against all key vectors \(\textcolor [rgb]{0.929,0.502,0.212}{K}\) using the scaled dot product, yielding the corresponding column of \(\textcolor [rgb]{0.236,0.236,0.236}{E^T}\), containing unnormalized alignment scores: \begin {equation} [\textcolor [rgb]{0.236,0.236,0.236}{E_{1,1}}, \textcolor [rgb]{0.236,0.236,0.236}{E_{1,2}}, \textcolor [rgb]{0.236,0.236,0.236}{E_{1,3}}]^T. \end {equation} Each \(\textcolor [rgb]{0.236,0.236,0.236}{E_{1,j}}\) represents the similarity between \(\textcolor [rgb]{0.471,0.694,0.318}{Q_1}\) and key \(\textcolor [rgb]{0.929,0.502,0.212}{K_j}\).

- 3.

- Normalizing Attention Weights: Applying the softmax function converts these scores into a probability distribution over the keys: \begin {equation} [\textcolor [rgb]{0.236,0.236,0.236}{A_{1,1}}, \textcolor [rgb]{0.236,0.236,0.236}{A_{1,2}}, \textcolor [rgb]{0.236,0.236,0.236}{A_{1,3}}]^T. \end {equation}

- 4.

- Computing the Output Vector: The final output \(\textcolor [rgb]{1.0,0.816,0.267}{Y_1}\) is obtained as a weighted sum of the value vectors \(\textcolor [rgb]{0.769,0.369,0.800}{V}\) using the attention weights: \begin {equation} \textcolor [rgb]{1.0,0.816,0.267}{Y_1} = \textcolor [rgb]{0.236,0.236,0.236}{A_{1,1}} \textcolor [rgb]{0.769,0.369,0.800}{V_1} + \textcolor [rgb]{0.236,0.236,0.236}{A_{1,2}} \textcolor [rgb]{0.769,0.369,0.800}{V_2} + \textcolor [rgb]{0.236,0.236,0.236}{A_{1,3}} \textcolor [rgb]{0.769,0.369,0.800}{V_3}, \quad \textcolor [rgb]{1.0,0.816,0.267}{Y_1} \in \mathbb {R}^{D_V}. \end {equation}

This structured visualization clarifies the relationship between \(\textcolor [rgb]{0.471,0.694,0.318}{Q}\), \(\textcolor [rgb]{0.929,0.502,0.212}{K}\), and \(\textcolor [rgb]{0.769,0.369,0.800}{V}\), reinforcing how attention dynamically selects relevant information. The same process is applied to each query vector and set of input vectors to produce the rest of the outputs: \(\textcolor [rgb]{1.0,0.816,0.267}{Y} = \{\textcolor [rgb]{1.0,0.816,0.267}{Y_1}, ..., \textcolor [rgb]{1.0,0.816,0.267}{Y_{N_Q}}\}.\)

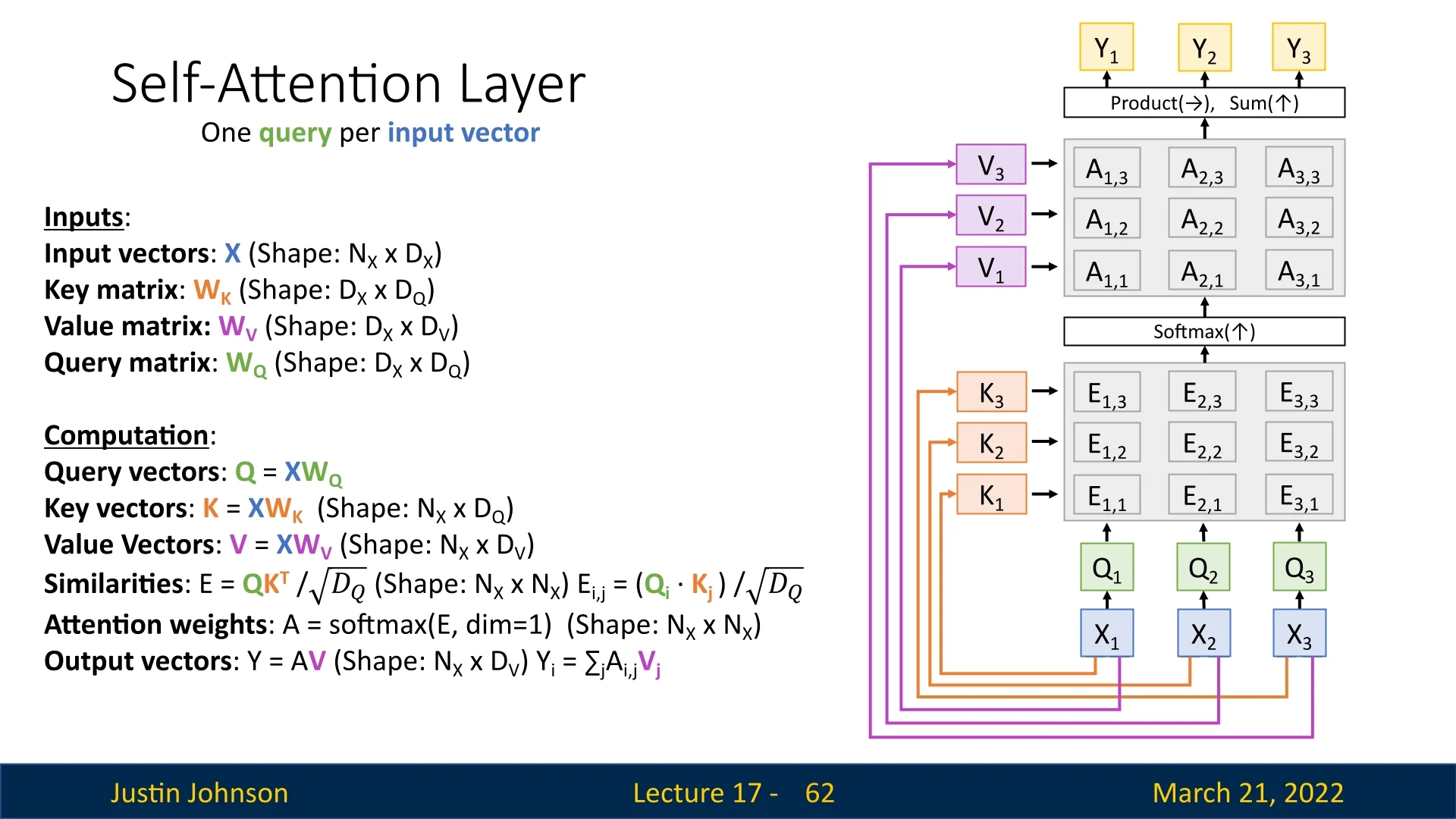

17.4.6 Towards Self-Attention

The Attention Layer provides a flexible mechanism to focus on the most relevant information in a given input. However, in previous sections, the queries \(\textcolor [rgb]{0.471,0.694,0.318}{Q}\) and inputs \(\textcolor [rgb]{0.267,0.447,0.769}{X}\) originated from different sources.

A particularly powerful case emerges when we apply attention within the same sequence, allowing each element to attend to all others, including itself. This special configuration is known as Self-Attention, where queries, keys, and values are all derived from the same input sequence:

\[ \textcolor [rgb]{0.471,0.694,0.318}{Q} = \textcolor [rgb]{0.267,0.447,0.769}{X} \textcolor [rgb]{0.471,0.694,0.318}{W_Q}, \quad \textcolor [rgb]{0.929,0.502,0.212}{K} = \textcolor [rgb]{0.267,0.447,0.769}{X} \textcolor [rgb]{0.929,0.502,0.212}{W_K}, \quad \textcolor [rgb]{0.769,0.369,0.800}{V} = \textcolor [rgb]{0.267,0.447,0.769}{X} \textcolor [rgb]{0.769,0.369,0.800}{W_V}. \]

This transformation enables each element in the sequence to selectively aggregate information from all others, facilitating a global receptive field. Unlike recurrence-based models, self-attention allows relationships between distant elements to be captured efficiently while supporting highly parallelized computation.

The ability of self-attention to process entire sequences in parallel has made it foundational to modern architectures such as the Transformer [644]. In the next section, we formally define self-attention mathematically, detailing its computation and role in deep learning architectures.

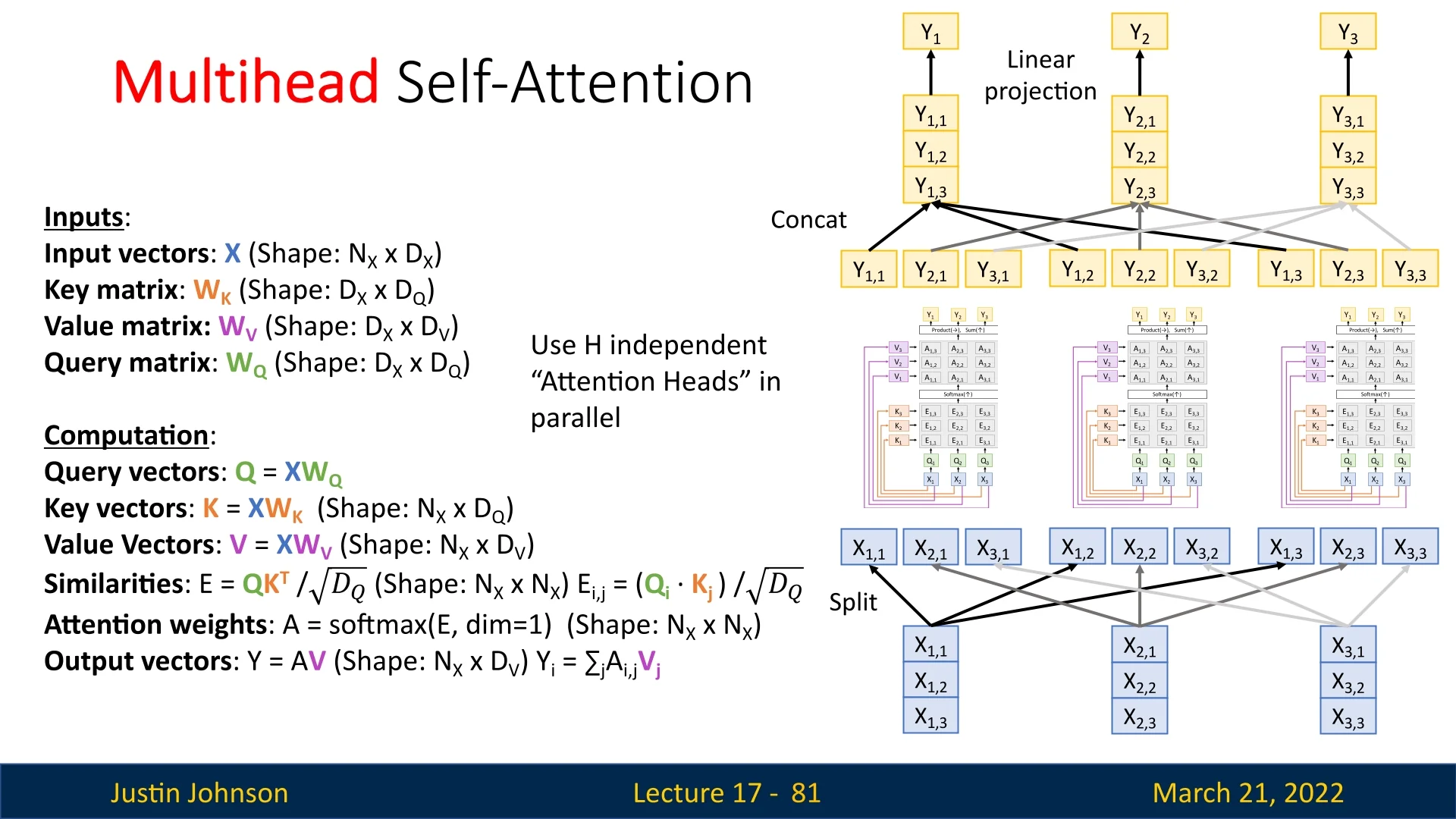

17.5 Self-Attention

The Self-Attention Layer extends the attention mechanism by enabling each element in an input sequence to compare itself with every element in the sequence. Unlike the Attention Layer described in subsection 17.4.5, where queries and inputs could originate from different sources, self-attention generates its queries, keys, and values from the same input set.

This formulation retains the same structure as the regular attention layer, with one key modification: instead of externally provided query vectors, we now predict them using a learnable transformation \( \textcolor [rgb]{0.471,0.694,0.318}{W_Q} \). The rest of the computations—including key and value transformations, similarity computations, and weighted summation—remain unchanged.

17.5.1 Mathematical Formulation of Self-Attention

Given an input set of vectors \( \textcolor [rgb]{0.267,0.447,0.769}{X} = \{\textcolor [rgb]{0.267,0.447,0.769}{X_1}, \dots , \textcolor [rgb]{0.267,0.447,0.769}{X_{N_X}}\} \), self-attention computes:

- Query Vectors: \( \textcolor [rgb]{0.471,0.694,0.318}{Q} = \textcolor [rgb]{0.267,0.447,0.769}{X} \textcolor [rgb]{0.471,0.694,0.318}{W_Q} \)

- Key Vectors: \( \textcolor [rgb]{0.929,0.502,0.212}{K} = \textcolor [rgb]{0.267,0.447,0.769}{X} \textcolor [rgb]{0.929,0.502,0.212}{W_K} \)

- Value Vectors: \( \textcolor [rgb]{0.769,0.369,0.800}{V} = \textcolor [rgb]{0.267,0.447,0.769}{X} \textcolor [rgb]{0.769,0.369,0.800}{W_V} \)

The computations proceed as follows:

\begin {equation} \textcolor [rgb]{0.236,0.236,0.236}{E} = \frac {\textcolor [rgb]{0.471,0.694,0.318}{Q} \textcolor [rgb]{0.929,0.502,0.212}{K^T}}{\sqrt {D_Q}}, \quad \textcolor [rgb]{0.236,0.236,0.236}{A} = \mbox{softmax}(\textcolor [rgb]{0.236,0.236,0.236}{E}, \mbox{dim} = 1), \quad \textcolor [rgb]{1.0,0.816,0.267}{Y} = \textcolor [rgb]{0.236,0.236,0.236}{A} \textcolor [rgb]{0.769,0.369,0.800}{V}. \end {equation}

As before, the output vector for each input \( \textcolor [rgb]{1.0,0.816,0.267}{Y_i} \) is computed as a weighted sum:

\begin {equation} \textcolor [rgb]{1.0,0.816,0.267}{Y_i} = \sum _j \textcolor [rgb]{0.236,0.236,0.236}{A_{i,j}} \textcolor [rgb]{0.769,0.369,0.800}{V_j}. \end {equation}

17.5.2 Non-Linearity in Self-Attention

At first glance, self-attention might appear to be a mostly linear mechanism—performing dot products between \(\mathbf {Q}\) and \(\mathbf {K}\), then using those results to weight \(\mathbf {V}\). However, there is an important source of non-linearity that makes self-attention more expressive than purely linear transformations:

- Softmax Non-Linearity: Once we compute the raw attention scores \(\mathbf {E} = \mathbf {Q}\mathbf {K}^\top / \sqrt {D_Q}\), we normalize each row (per-query) using a softmax: \[ \mathbf {A} = \mathrm {softmax}(\mathbf {E}, \mbox{dim} = 1). \] This softmax operation is an explicit non-linear function [644], ensuring adaptive weighting of each key-value pair and preventing the raw dot products from dominating the final distribution. Unlike purely linear layers that weigh inputs in a fixed manner, softmax-based weighting can concentrate or diffuse attention in a data-dependent way.

- Context-Dependent Weighting: In convolution, filters are fixed spatial kernels that move over the input. In contrast, self-attention dynamically alters how each token (or feature element) attends to every other element [22, 644]. The weighting depends on both the query vector \(\mathbf {Q}\) and the key vectors \(\mathbf {K}\), reflecting learned interactions. This “context dependence” is another key source of non-linearity because the softmax weighting is not a simple linear map but a function of pairwise similarities.

Hence, while self-attention does not apply an explicit activation function (like ReLU) within the dot product, the softmax normalization and token-by-token dynamic weighting create a highly flexible, non-linear transformation of the input \(\mathbf {V}\). In practice, self-attention layers are often combined with additional feedforward networks (including ReLU-like activations) in architectures such as the Transformer [644], further increasing their representational power.

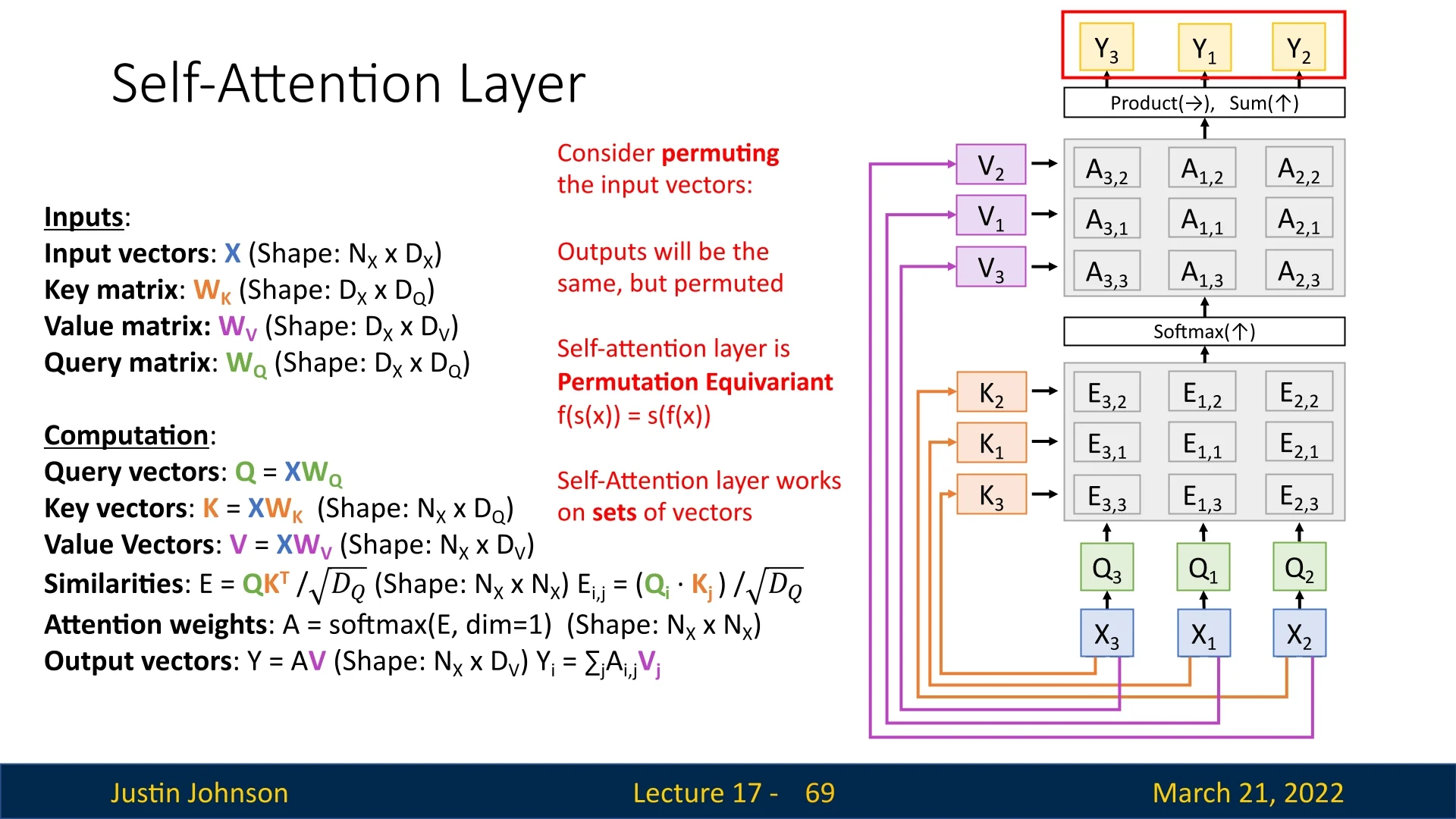

17.5.3 Permutation Equivariance in Self-Attention

Self-attention is inherently permutation equivariant, meaning that permuting the input sequence results in the outputs being permuted in the same way. Formally, let:

- \( f \) be the self-attention function mapping inputs to outputs.

- \( s \) be a permutation function reordering the input sequence.

The property is expressed as:

\begin {equation} f(s(\textcolor [rgb]{0.267,0.447,0.769}{X})) = s(f(\textcolor [rgb]{0.267,0.447,0.769}{X})). \end {equation}

This means that permuting the inputs and then applying self-attention yields the same result as applying self-attention first and then permuting the outputs.

For instance, in Figure 17.12, permuting the input sequence from \(\{X_1, X_2, X_3\}\) to \(\{X_3, X_2, X_1\}\) results in the outputs being permuted to \(\{Y_3, Y_2, Y_1\}\).

When is Permutation Equivariance a Problem?

While permutation equivariance is desirable in tasks that operate on unordered sets (e.g., point cloud processing, certain graph-based tasks), it poses challenges in tasks where sequence order is essential:

- Natural Language Processing (NLP): Word order carries critical meaning. The sentence ”The cat chased the mouse” conveys a different meaning than ”The mouse chased the cat.” A purely permutation-equivariant model would fail to differentiate these cases.

- Image Captioning: The order in which words are generated is crucial. If self-attention does not respect positional information, a model could struggle to generate coherent descriptions.

- Time-Series Analysis: Sequential dependencies (e.g., stock market trends, weather forecasting) require an understanding of past-to-future relationships, which are lost if order is ignored.

To address this, we introduce positional encodings, which explicitly encode sequence order into self-attention models, ensuring that position-dependent tasks retain meaningful structure. Hence, we’ll now explore how positional encodings are designed and integrated into self-attention mechanisms.

17.5.4 Positional Encodings: Introduction

Self-Attention layers, unlike RNNs, do not have an inherent sense of sequence order since they process all tokens in parallel. To incorporate positional information, positional encodings are added to input embeddings before passing them into the model. Two common approaches exist: fixed sinusoidal positional encodings and learnable positional embeddings. Below, we examine the key differences and motivations for using each approach.

Why Not Use Simple Positional Indices?

- Simple Positional Indexing. \begin {equation} \label {eq:simple_index} P_t = t, \quad t \in [1, N], \end {equation} where \( N \) is the sequence length.

-

Drawbacks of Simple Indexing.

- Numerical Instability. Large positional indices may lead to gradient explosion or saturation in deep networks, and can dominate the scale of content embeddings.

- Poor Generalization. If training sequences are shorter than test sequences, the model may fail to generalize to unseen positions.

- Lack of Relative Positioning. Absolute indexing requires the model to learn how index differences influence attention from scratch. The model does not inherently recognize distance relationships, making it inefficient for learning locality-sensitive patterns.

- Normalized Positional Indexing.

As an alternative to simple positional indices, one could normalize indices to a fixed range. This approach keeps positional values bounded: \begin {equation} \label {eq:normalized_index} P_t = \frac {t}{N-1}, \quad t \in [0, N-1]. \end {equation} -

Drawbacks of Normalized Indexing.

- Inconsistency Across Lengths. The same absolute position \(t\) will map to different normalized values depending on \(N\). Consequently, a model cannot associate a stable representation with “token 10” across inputs of varying length.

-

Variable Relative Distances (The “Rubber Band” Effect). Normalization also distorts relative geometry. The normalized distance between adjacent positions is \begin {equation} \label {eq:normalized_step} P_{t+1} - P_t = \frac {1}{N-1}. \end {equation} Thus, the meaning of “one step forward” depends on the total sequence length. For example:

- If \(N=4\), then \(P_t = [0, \tfrac {1}{3}, \tfrac {2}{3}, 1]\), so adjacent tokens differ by \(\tfrac {1}{3}\).

- If \(N=10\), then \(P_t = [0, \tfrac {1}{9}, \tfrac {2}{9}, \dots , 1]\), so adjacent tokens differ by \(\tfrac {1}{9}\).

In other words, the positional space is stretched or compressed as \(N\) changes. This forces the attention mechanism to relearn what “local” means for each length, complicating the learning of reusable patterns such as “attend strongly to the immediate neighbor” or “favor a fixed offset of \(k\) tokens”.

These issues motivate a more principled positional scheme whose functional form is independent of \(N\) and whose structure makes relative offsets easier to recover. This intuition leads naturally to the sinusoidal positional encodings introduced in the original Transformer, which are bounded, length-agnostic, and designed so that fixed offsets correspond to predictable transformations in the encoding space.

17.5.5 Sinusoidal Positional Encoding

When Vaswani et al. introduced the Transformer, they faced a fundamental structural limitation of self-attention: without recurrence or convolution, the layer has no built-in notion of token order. A sentence such as “The dog bit the man” contains the same set of tokens as “The man bit the dog”, yet the meaning changes because the order changes. Hence, the model must be given an explicit and systematic notion of position.

The original solution is sinusoidal positional encoding, a fixed, parameter-free function that maps each position index \(t\) to a vector \(\mathbf {p}_t \in \mathbb {R}^{d}\). This vector is added to the content embedding before the first attention layer: \begin {equation} \label {eq:chapter17_pe_addition} \mathbf {x}'_t = \mathbf {x}_t + \mathbf {p}_t, \end {equation} so that every subsequent layer can condition jointly on what the token is and where it appears.

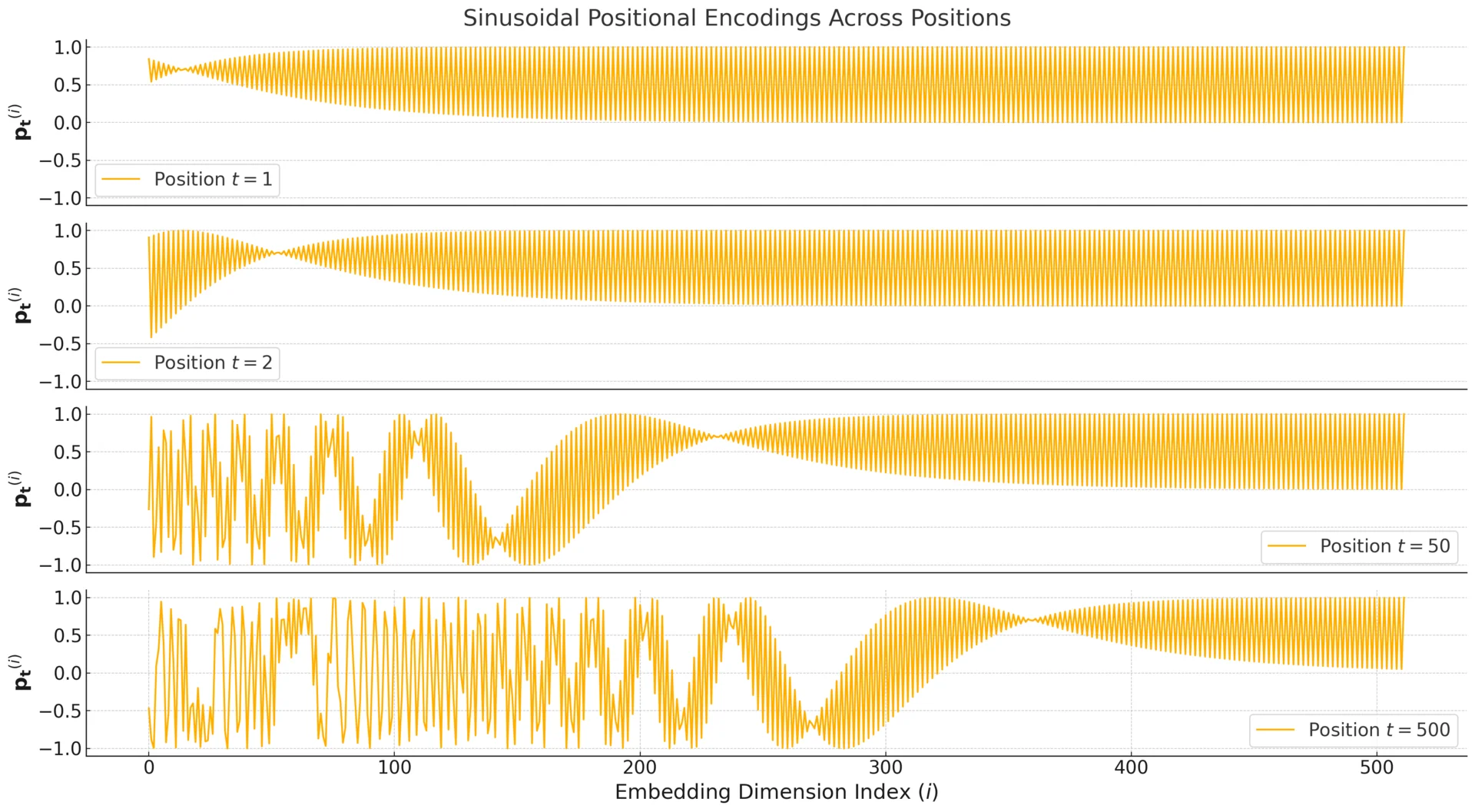

Mathematical Definition and Frequency Bands

Let \(d\) denote the embedding dimension (and the positional encoding dimension), typically matching the model width (e.g., \(d=512\) in the original Transformer). The sinusoidal encoding partitions the \(d\) coordinates into \(\frac {d}{2}\) frequency bands. Each band contributes exactly two dimensions: one sine and one cosine component at the same angular frequency. This pairing is why \(d\) is chosen to be even in practice.

For each band index \(k \in \{0,1,\dots ,\frac {d}{2}-1\}\), define: \begin {equation} \label {eq:chapter17_sinusoidal_encoding} \begin {aligned} \mathbf {p}_t(2k) &= \sin (\omega _k t),\\ \mathbf {p}_t(2k+1) &= \cos (\omega _k t), \end {aligned} \qquad \omega _k = \frac {1}{10000^{\frac {2k}{d}}}. \end {equation}

Equivalently, for a dimension index \(i \in \{0,1,\dots ,d-1\}\), the definition pairs sine and cosine in adjacent dimensions by setting \(i=2k\) and \(i=2k+1\). The key symbols are:

- \(\displaystyle t\): the absolute position index within the sequence (e.g., \(0,1,2,\dots \)).

- \(\displaystyle d\): the encoding dimension, equal to the token embedding dimension.

- \(\displaystyle k\): the frequency-band index that groups each \(\sin \)–\(\cos \) pair.

- \(\displaystyle \omega _k\): the angular frequency assigned to band \(k\).

The band index \(k\) therefore controls how quickly the corresponding two coordinates change with \(t\). Small \(k\) yields large \(\omega _k\) (rapid oscillations), while large \(k\) yields small \(\omega _k\) (slow oscillations). Stacking \(\frac {d}{2}\) such bands gives a single vector \(\mathbf {p}_t\) that can represent both fine-grained and coarse positional structure simultaneously.

Intuition: A Multi-Scale, Continuous Counter

A helpful way to interpret sinusoidal positional encoding is to treat it as a multi-scale representation of the integer index \(t\). Instead of describing position with a single scalar (such as \(t\) or a normalized \(\tfrac {t}{N}\)), the Transformer assigns each position a vector \(\mathbf {p}_t \in \mathbb {R}^d\) that combines \(\tfrac {d}{2}\) frequency bands. Each band \(k\) contributes a two-dimensional coordinate that records the phase of \(\omega _k t\): \[ \mathbf {b}^{(k)}_t = \begin {bmatrix} \sin (\omega _k t)\\ \cos (\omega _k t) \end {bmatrix}, \qquad \mathbf {p}_t = \bigl [ \mathbf {b}^{(0)}_t \; \mathbf {b}^{(1)}_t \; \cdots \; \mathbf {b}^{(\frac {d}{2}-1)}_t \bigr ]. \] Thus, \(\mathbf {p}_t\) can be viewed as a snapshot of many “position sensors” operating at different temporal resolutions.

This resembles the behavior of a binary counter: low-order bits change frequently, whereas high-order bits change slowly and encode coarse location. Here, the discrete flips are replaced by smooth oscillations. The key advantage over naive indexing is scale coverage: \(\mathbf {p}_t\) does not compress position into a single number whose meaning depends on sequence length. Instead, it distributes positional information across many frequencies, so the model can reliably detect both small and large positional changes.

This structure supplies two complementary signals:

- Local sensitivity. High-frequency bands (small \(k\)) respond strongly to \(t \rightarrow t+1\), so adjacent tokens receive clearly different positional vectors. This makes short-range order patterns easy to learn.

- Global context. Low-frequency bands (large \(k\)) change slowly, so they separate far-apart positions even when high-frequency bands have already cycled through many periods. This helps the model distinguish broad regions of a long sequence.

Why Sine and Cosine Pairs?

A single sinusoid is periodic and therefore ambiguous when used alone. For instance, \(\sin (\pi /6)=\sin (5\pi /6)\), so two distinct angles can share the same sine value. By pairing \(\sin (\omega _k t)\) with \(\cos (\omega _k t)\), each band \(k\) becomes a point on the unit circle that specifies the phase of \(\omega _k t\) in two dimensions: \[ \mathbf {b}^{(k)}_t = \bigl (\sin (\omega _k t), \cos (\omega _k t)\bigr ). \] Even if two positions produce similar sine values for a given band, their cosine values typically differ. Across \(\tfrac {d}{2}\) bands, the concatenated vector \(\mathbf {p}_t\) becomes a high-dimensional positional signature without requiring learned parameters.

Why the Base \(10000\)? Wavelength Coverage

The constant \(10000\) controls the range of frequencies and thus the positional “resolutions” available to the model. Because the exponent \(\frac {2k}{d}\) grows linearly with \(k\), the frequencies \(\omega _k\) decrease exponentially. This yields a geometric progression of wavelengths. For band \(k\), the wavelength is: \begin {equation} \label {eq:chapter17_wavelength} \lambda _k = \frac {2\pi }{\omega _k} = 2\pi \cdot 10000^{\frac {2k}{d}}. \end {equation}

This construction ensures that the encoding contains:

- Very short wavelengths in early bands, which sharply separate adjacent positions.

- Very long wavelengths in late bands, which change meaningfully only across large distances.

In typical settings (e.g., \(d=512\)), this spans a wide but numerically stable spectrum of positional scales while keeping all coordinates bounded in \([-1,1]\).

Concrete Frequency Micro-Example For a toy dimension \(d=8\), the four frequency bands are: \[ \omega _0 = 1, \quad \omega _1 = 10000^{-0.25} = \frac {1}{10}, \quad \omega _2 = 10000^{-0.5} = \frac {1}{100}, \quad \omega _3 = 10000^{-0.75} = \frac {1}{1000}. \] The first pair thus changes rapidly with \(t\), while the last pair changes very slowly, illustrating how the construction allocates both fine and coarse positional resolution.

Frequency Variation and Intuition

A clearer way to interpret the frequency sweep is to view each band as a positional sensor defined by a distinct angular frequency \(\omega _k\). For small \(k\), \(\omega _k\) is large and the band is highly sensitive to single-step changes. For large \(k\), \(\omega _k\) is small and the band changes slowly, so it provides stable separation over long distances.

This layered design prevents two complementary failure modes:

- If only slow bands existed, \(\mathbf {p}_t\) and \(\mathbf {p}_{t+1}\) would be nearly identical, weakening the model’s ability to detect fine-grained order.

- If only fast bands existed, the positional patterns would repeat frequently, and far-apart positions could become difficult to distinguish reliably.

The geometric spacing of \(\omega _k\) is therefore essential: it distributes sensitivity smoothly across scales. As a result, the full vector \(\mathbf {p}_t\) retains information about both small offsets (e.g., neighbor relations, short phrases) and large offsets (e.g., clause-level or document-level structure), which aligns with the range of dependencies that attention must model.

Concrete Example: “I Can Buy Myself Flowers”

To make the multi-scale behavior tangible, consider a simplified case with \(d=4\), which yields two bands: a high-frequency pair with \(\omega _0=1\) and a low-frequency pair with \[ \omega _1=\frac {1}{10000^{2/4}}=\frac {1}{100}=0.01. \] Their wavelengths differ sharply: \[ \lambda _0 = 2\pi \approx 6.28, \qquad \lambda _1 = \frac {2\pi }{0.01} = 200\pi \approx 628. \] Thus, Band 0 completes a full cycle every few tokens, while Band 1 changes perceptibly only over hundreds of tokens.

Concretely, the positional vector is \[ \mathbf {p}_t = \bigl [ \sin (t), \cos (t), \sin (0.01t), \cos (0.01t) \bigr ]. \] For early positions, the first pair provides strong local separation: moving from \(t=0\) to \(t=1\) produces a large, easily detectable change. The second pair provides a slow baseline: it changes very little across \(t=0,1,2\), but would meaningfully differentiate positions that are hundreds of tokens apart.

| Token | Pos (\(t\)) | Band 0 (\(\omega _0=1\)) | Band 1 (\(\omega _1=0.01\))

| ||

|---|---|---|---|---|---|

| \(\sin (t)\) | \(\cos (t)\) | \(\sin (0.01t)\) | \(\cos (0.01t)\) | ||

| I | 0 | \(0\) | \(1\) | \(0\) | \(1\) |

| Can | 1 | \(\approx 0.84\) | \(\approx 0.54\) | \(\approx 0.01\) | \(\approx 1.00\) |

| Buy | 2 | \(\approx 0.91\) | \(\approx -0.42\) | \(\approx 0.02\) | \(\approx 1.00\) |

This toy case mirrors the full design: a real Transformer allocates many more bands, so that this fast-to-slow coverage becomes dense across the embedding space. The resulting \(\mathbf {p}_t\) is therefore not a single-scale counter, but a multi-scale positional fingerprint.

How Relative Position Awareness Emerges

The value of sinusoidal encoding is not only that it labels absolute positions, but also that it makes relative distance visible to attention computations in a systematic way. This can be seen by examining a single band \(k\). Define the 2D band vector: \[ \mathbf {b}_t^{(k)} = \begin {bmatrix} \sin (\omega _k t)\\ \cos (\omega _k t) \end {bmatrix}. \] Using trigonometric identities, the inner product between two positions separated by an offset \(o\) satisfies: \begin {equation} \label {eq:chapter17_band_inner_product} \left (\mathbf {b}_t^{(k)}\right )^\top \mathbf {b}_{t+o}^{(k)} = \cos (\omega _k o). \end {equation}

Crucially, \(\cos (\omega _k o)\) depends only on the offset \(o\), not on the absolute position \(t\). Thus, within each band, the similarity of two positional codes is a direct, frequency-specific function of distance. Short offsets and long offsets produce different similarity signatures.

Because the full positional vector \(\mathbf {p}_t\) concatenates \(\frac {d}{2}\) such bands, the aggregate positional similarity becomes a multi-scale signature of distance: \[ \mathbf {p}_t^\top \mathbf {p}_{t+o} = \sum _{k=0}^{\frac {d}{2}-1} \cos (\omega _k o). \] Self-attention relies on dot products between linearly projected token representations. After \(\mathbf {p}_t\) is added to content embeddings, these dot-product comparisons can naturally incorporate this structured distance signal. As a result, the model can learn attention patterns that respond differently to short-range and long-range offsets using the same positional construction, without depending on a separate learned table of relative positions.

Does Positional Information Vanish in Deeper Layers?

One might worry that adding positional information only at the input could cause it to be diluted in deep models. In practice, Transformer design choices mitigate this concern:

- Residual connections. These preserve positional and content cues across layers.

- Layer normalization. This maintains stable signal scales and training dynamics, keeping positional information usable throughout depth.

Why Sinusoidal Encoding Addresses Simpler Schemes

Compared with naive approaches such as raw indices or single-scale normalizations, sinusoidal encodings provide:

- Multi-scale coverage. Different frequencies simultaneously support local order resolution and long-range separation.

- A consistent distance signal. Within each frequency band, similarity depends directly on the relative offset.

- Parameter-free generality. The encoding is fixed, stable, and defined for arbitrary \(t\), enabling principled use on longer sequences.

Conclusion on Sinusoidal Positional Encoding

Sinusoidal positional encoding is an effective, parameter-free solution to the permutation-insensitivity of self-attention. Its carefully spaced frequency bands provide a single representation that preserves information about both small and large positional differences, and the resulting dot-product structure makes relative distance naturally visible to attention computations. However, because the geometry is fixed, it cannot adapt to dataset-specific structure. We therefore next consider learnable positional embeddings, which trade this fixed formulation for increased flexibility.

17.5.6 Learned Positional Encodings: An Alternative Approach

While sinusoidal encodings provide a fixed and mathematically structured scheme, another widely used strategy is to learn positional representations directly from data. In this approach, position is not generated by a function \(f(t)\). Instead, the model allocates a separate trainable vector to each absolute index up to a predefined maximum length. Concretely, every position \( t \in \{0,\dots ,N_{\max }-1\} \) is assigned a learnable embedding \( \mathbf {P}_t \in \mathbb {R}^d \), which is added to the token embedding before the first self-attention block. This section explains the mechanism, motivates when learned absolute encodings are a natural fit, and highlights their core limitations relative to sinusoidal encodings.

Definition and Mechanics: A Trainable Embedding Matrix Implementation-wise, learned absolute positional encodings are realized as a parameter matrix \( \mathbf {P} \in \mathbb {R}^{N_{\max }\times d} \), analogous to the token embedding matrix. Calling this structure a “lookup table” is accurate in the engineering sense: at inference time, the model simply selects the row corresponding to index \(t\). Formally, \[ \mathbf {P}_t = \mathbf {P}[t], \qquad \mathbf {P}_t \in \mathbb {R}^d. \] These vectors are initialized randomly and updated via backpropagation alongside all other Transformer parameters. The input representation at position \(t\) becomes \[ \mathbf {x}'_t = \mathbf {x}_t + \mathbf {P}_t, \] so the network can learn how much and in what direction each absolute index should bias the content embedding. If the task repeatedly rewards a distinctive behavior at a specific index, gradients can directly sculpt \(\mathbf {P}_t\) into a strong positional marker for that role.

What This Design Assumes Learned absolute embeddings implicitly assume a known or stable maximum length \(N_{\max }\). Unlike sinusoids, which define \(\mathbf {p}_t\) for all integers \(t\), the learned scheme only stores parameters for indices \(0\) through \(N_{\max }-1\). Thus, the representation is flexible within the trained window, but not inherently designed for arbitrary-length extrapolation.

Examples of Learned Absolute Positional Encodings

- BERT (Bidirectional Encoder Representations from Transformers) [120]: BERT uses learned absolute positional embeddings within a fixed maximum length. This choice aligns with the model’s emphasis on sentence- and segment-level structure, where certain absolute locations, boundary-adjacent tokens, and special markers (e.g., [CLS] and [SEP]) are consistently meaningful. A learned table can assign these frequently used indices distinctive vectors that are directly optimized for the downstream objectives.

- GPT (Generative Pre-trained Transformer) [496]: Early GPT models also adopt learned absolute positional embeddings for causal sequence modeling, allowing the model to tune position-specific vectors for next-token prediction within a fixed context window. This is especially natural when training and inference contexts are closely matched.

- Vision Transformers (ViT) [133]: ViT uses learned positional embeddings for sequences of image patches. Here the “sequence” is derived from a (roughly) fixed spatial grid of patches, so learning a distinct embedding per patch index can help the model capture dataset-specific regularities about spatial layout. In practice, when transferring across image resolutions, these embeddings are commonly resized by interpolation, which preserves a useful initialization but also underscores that the method is tied to an assumed maximum grid size.

Notably, some later designs replace learned absolute embeddings with explicitly relative mechanisms. For example, T5 uses relative position biases rather than input-added learned absolute vectors [501]. We discuss such relative schemes separately when comparing absolute and relative approaches.

Where Learned Embeddings Can Be Especially Useful? Learned absolute embeddings tend to be most attractive when two conditions hold: the domain has a stable maximum length and the data exhibits index-specific conventions. Several examples illustrate this pattern:

- Fixed-format text and documents. In structured templates, forms, or code-like formats, particular indices can reliably mark headers, separators, or standardized fields. A learned table can store a strong, position-specific marker that the model can exploit immediately, rather than requiring deeper layers to amplify a smooth functional signal.

- Patch-based vision. When images are represented as a consistent grid of patches, absolute index can correlate with stable spatial priors introduced by dataset construction (e.g., object-centered crops). Learned embeddings can absorb such priors directly into the position space.

- Well-bounded biological or symbolic sequences. If sequences follow standardized length conventions or contain known anchor regions, learned embeddings can encode these regularities as explicit positional signatures.

Intuition: A “Ruler” Versus a “Trainable Index Map”. A concise way to contrast learned and sinusoidal approaches is the following analogy:

- Sinusoidal encoding resembles a ruler. It provides a smooth, multi-scale coordinate system. Because it is a function of \(t\), it naturally defines encodings beyond the lengths seen during training and imposes a consistent notion of distance-related structure.

- Learned encoding resembles a trainable index map. The model assigns a dedicated vector to each slot and can tune these slots to match the data distribution. If a particular index plays a special role, the model can encode that role directly in \(\mathbf {P}_t\), without requiring downstream layers to “sharpen” a smooth wave into a boundary signal.

Handling Longer Sequences Than Seen in Training A natural concern is how learned absolute embeddings handle sequences longer than the maximum length used to define the table. The strict answer is that they do not generalize automatically: if \(L > N_{\max }\), there is no learned vector \(\mathbf {P}_{L-1}\) to retrieve. Common practical responses include:

- Truncation (common in NLP). Inputs are clipped to \(N_{\max }\), which preserves correctness but discards long-range context.

- Table extension with further training. One can expand \(\mathbf {P}\) to a larger \(N_{\max }\), initialize new rows (often randomly or by copying/interpolating existing patterns), and continue training. This is an engineering fix rather than a principled extrapolation guarantee.

- Interpolation (common in ViT). When changing patch grid size, existing 2D positional embeddings are resized to the new resolution, providing a smooth transfer initialization but not the same kind of length-agnostic behavior offered by functional encodings.

These workarounds underscore the central trade-off: learned absolute encodings are highly adaptable within the trained regime but are weaker for robust length generalization.

Pros & Cons of Learned Positional Embeddings

Pros:

- Adaptable to complex positional semantics. Since each position \(t\) has its own vector \(\mathbf {P}_t\), the model can represent idiosyncratic index-dependent patterns that do not follow a smooth or periodic trend. This is valuable when the data contains sharp, index-specific roles (e.g., template headers, special tokens, or standardized fields).

- Task-specific optimization. The embeddings \(\{\mathbf {P}_0,\dots ,\mathbf {P}_{N_{\max }-1}\}\) are learned jointly with the rest of the model, allowing the network to calibrate how absolute indices shape local and global dependencies in the target domain. This can be a capacity-efficient way to encode “positional special cases,” reducing the need for deeper layers to derive the same emphasis from a fixed functional pattern.

- Empirical gains in some settings. Several studies report that learned absolute embeddings can match or outperform fixed encodings in tasks where sequence lengths are well-bounded and positional conventions are strong [284, 561].

Cons:

- Limited extrapolation to longer sequences. If training and inference lengths exceed \(N_{\max }\), the model has no learned vectors for unseen positions without modifying or extending the table. By contrast, sinusoidal encodings define \(\mathbf {p}_t\) for all integer \(t\).

- Increased parameterization and memory. A unique vector per position yields \(N_{\max }\times d\) additional parameters. This overhead is modest for small windows but grows linearly with context length.

- Reduced structural transparency. Unlike sinusoidal encodings, learned absolute embeddings do not impose a guaranteed functional relationship between distance \(|i-j|\) and positional similarity. Understanding how \(\mathbf {P}_i\) and \(\mathbf {P}_j\) relate typically requires post-hoc analysis, and behavior at rarely observed indices may be less predictable.

Conclusion on Learned Positional Embeddings Learned absolute positional encodings offer a flexible alternative to sinusoidal functions. They are particularly attractive when sequence length is well-bounded and the domain exhibits index-specific structure that benefits from explicit positional markers, as seen in early LLMs and patch-based vision models [120, 496, 133]. However, this flexibility comes with limited extrapolation to longer sequences, additional parameters, and weaker built-in structure across distances. In practice, the choice between fixed sinusoidal encodings and learned absolute embeddings depends on whether the application prioritizes robust length generalization or domain-specific positional specialization [283].

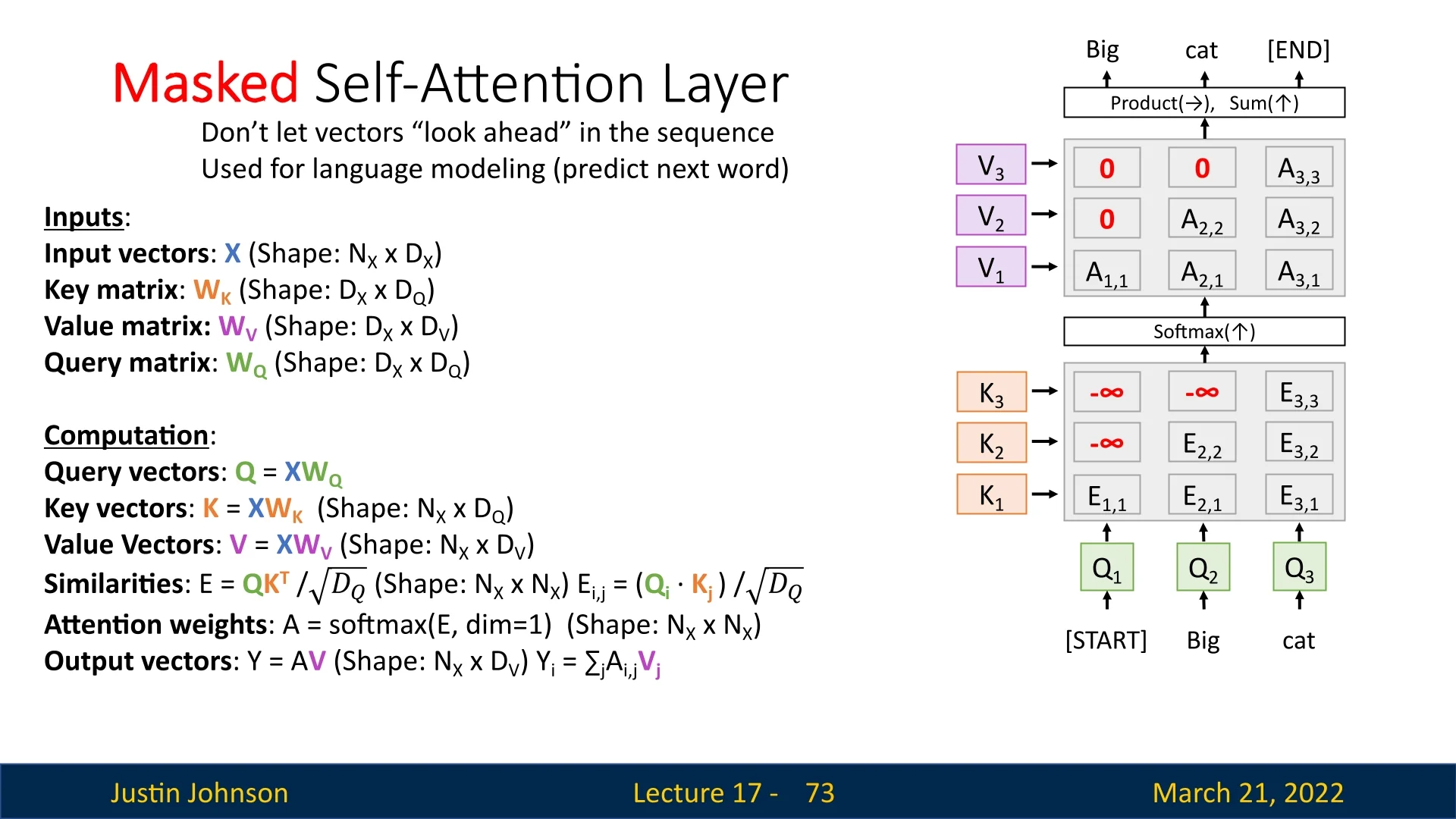

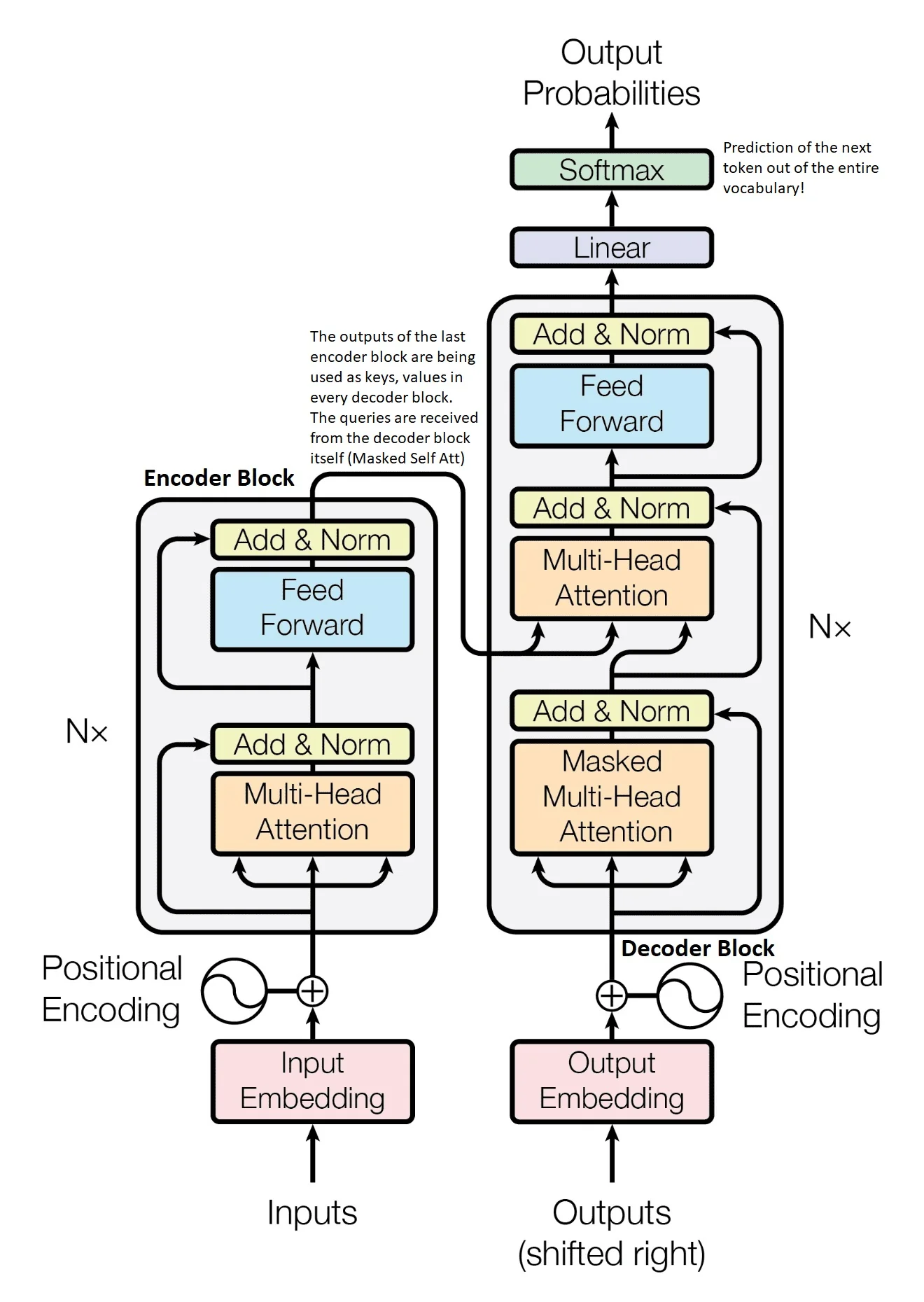

17.5.7 Masked Self-Attention Layer

While standard self-attention allows each token to attend to every other token in the sequence, there are many tasks where we need to enforce a constraint that prevents tokens from ”looking ahead” at future elements. This is particularly important in auto-regressive models, such as language modeling, where each token should be predicted solely based on previous tokens. Without such constraints, the model could trivially learn to predict future tokens by directly attending to them, preventing it from developing meaningful contextual representations.

Why Do We Need Masking?

Consider a language modeling task, where we predict the next word in a sequence given the previous words. If the attention mechanism allows tokens to attend to future positions, the model can directly ”cheat” by looking at the next word instead of learning meaningful dependencies. This would make training ineffective for real-world applications.

In a standard self-attention layer, the attention mechanism computes a set of attention scores for each query vector \( Q_i \), allowing it to interact with all key vectors \( K_j \), including future positions. To prevent this, we introduce a mask that selectively blocks future positions in the similarity matrix \( E \).

Applying the Mask in Attention Computation

The core modification to self-attention is to introduce a mask \( M \) that forces the model to only attend to previous and current positions. The modified similarity computation is:

\begin {equation} E = \frac {Q K^T}{\sqrt {D_Q}} + M, \end {equation}

where \( M \) is a lower triangular matrix with \(-\infty \) in positions where future tokens should be ignored:

\[ M_{i,j} = \begin {cases} 0, & \mbox{if } j \leq i \\ -\infty , & \mbox{if } j > i \end {cases} \]

This ensures that for each token \( Q_i \), attention scores \( E_{i,j} \) for future positions \( j > i \) are set to \(-\infty \), effectively preventing any influence from those tokens.

How Masking Affects the Attention Weights

After computing the masked similarity scores, we apply the softmax function:

\begin {equation} A = \mbox{softmax}(E, \mbox{dim}=1). \end {equation}

Since the softmax function normalizes exponentiated values, setting an element of \( E \) to \(-\infty \) ensures that its corresponding attention weight becomes zero:

\[ A_{i,j} = \begin {cases} \frac {e^{E_{i,j}}}{\sum _{k \leq i} e^{E_{i,k}}}, & \mbox{if } j \leq i \\ 0, & \mbox{if } j > i \end {cases} \]

This guarantees that tokens only attend to previous or current tokens, enforcing the desired auto-regressive structure.

Example of Masking in a Short Sequence

Consider the example sentence [START] Big cat. We want to enforce the following constraints:

- \( Q_1 \) (corresponding to [START]) should only depend on itself, meaning it does not attend to future tokens.

- \( Q_2 \) (corresponding to Big) can see the previous word but not the future one.

- \( Q_3 \) (corresponding to cat) has access to all previous tokens but no future ones.

As a result, in the normalized attention weights matrix \( A \), all masked positions will have values of zero, ensuring that no information from future tokens influences the current prediction.

Handling Batches with Variable-Length Sequences

Another crucial application of masking in self-attention is handling batches with sequences of varying lengths. In many real-world tasks, sentences or input sequences in a batch have different lengths, meaning that shorter sequences need to be padded to match the longest sequence in the batch. However, self-attention naively treats all inputs equally, including the padding tokens, which can introduce noise into the attention computations.

To prevent this, we introduce a second type of mask: the padding mask, which ensures that attention does not consider padded tokens.

- Efficient Batch Processing: Modern hardware (e.g., GPUs) processes inputs as fixed-size tensors. Padding ensures that all sequences in a batch fit within the same tensor dimensions.

- Avoiding Attention to Padding Tokens: Without masking, the model could mistakenly assign attention weights to padding tokens, distorting the learned representations.

The padding mask is a binary mask \( P \) defined as:

\[ P_{i,j} = \begin {cases} 0, & \mbox{if } j \mbox{ is a real token} \\ -\infty , & \mbox{if } j \mbox{ is a padding token} \end {cases} \]

The modified similarity computation incorporating both autoregressive masking and padding masking is:

\begin {equation} E = \frac {Q K^T}{\sqrt {D_Q}} + M + P. \end {equation}

This ensures that both future tokens and padding tokens are ignored, allowing self-attention to operate effectively on batched data.

Moving on to Input Processing with Self-Attention

The introduction of masked self-attention allows us to process sequences in a parallelized manner while ensuring the integrity of auto-regressive constraints and variable-length handling. In the next part, we explore how batched inputs are processed efficiently using self-attention, applying these masking techniques in practice.

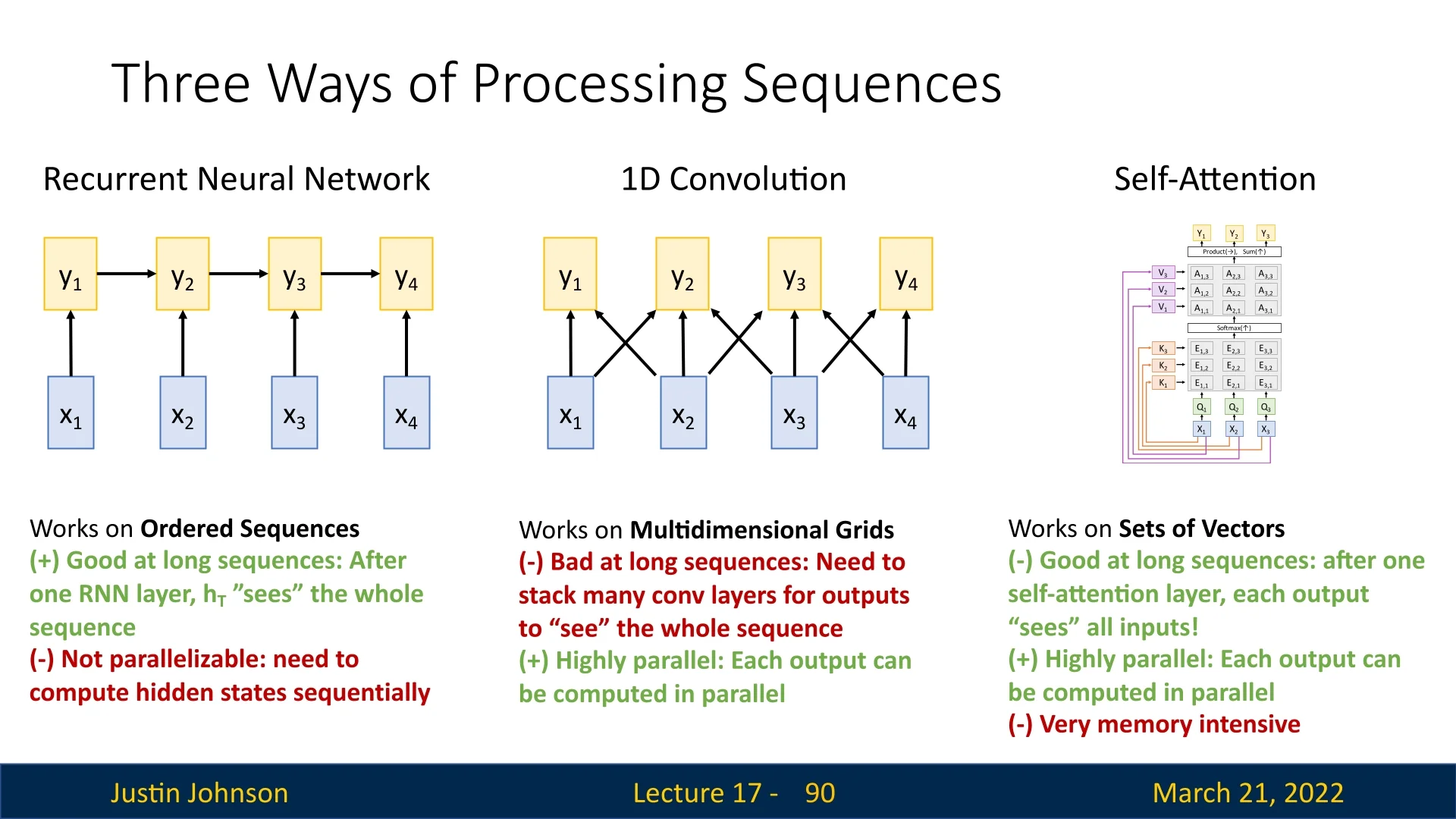

17.5.8 Processing Inputs with Self-Attention

One of the key advantages of self-attention is its ability to process inputs in parallel, unlike recurrent neural networks (RNNs), which require sequential updates. This parallelization is made possible because self-attention computes attention scores between all input elements simultaneously using matrix multiplications. Instead of iterating step-by-step through a sequence, the self-attention mechanism allows each element to attend to all others in a single pass, dramatically improving computational efficiency.

Parallelization in Self-Attention

Unlike RNNs, which maintain a hidden state and process tokens sequentially, self-attention operates on the entire input sequence simultaneously. Consider the following PyTorch implementation of self-attention:

import torch

import torch.nn.functional as F

def self_attention(X, W_q, W_k, W_v):

"""

Computes self-attention for input batch X.

X: Input tensor of shape (batch_size, seq_len, d_x)

W_q, W_k, W_v: Weight matrices for queries, keys, and values

(each of shape (d_x, d_q), (d_x, d_q), (d_x, d_v))

"""

Q = torch.matmul(X, W_q) # Shape: (batch_size, seq_len, d_q)

K = torch.matmul(X, W_k) # Shape: (batch_size, seq_len, d_q)

V = torch.matmul(X, W_v) # Shape: (batch_size, seq_len, d_v)

# Compute scaled dot-product attention

d_q = K.shape[-1] # Dimensionality of queries

E = torch.matmul(Q, K.transpose(-2, -1)) / (d_q ** 0.5) # (batch_size, seq_len, seq_len)

A = F.softmax(E, dim=-1) # Normalize attention weights

Y = torch.matmul(A, V) # Compute final outputs, shape: (batch_size, seq_len, d_v)

return Y, A # Returning attention outputs and weights

# Example batch processing

batch_size, seq_len, d_x, d_q, d_v = 2, 5, 32, 64, 64

X = torch.randn(batch_size, seq_len, d_x) # Random input batch

W_q, W_k, W_v = torch.randn(d_x, d_q), torch.randn(d_x, d_q), torch.randn(d_x, d_v)

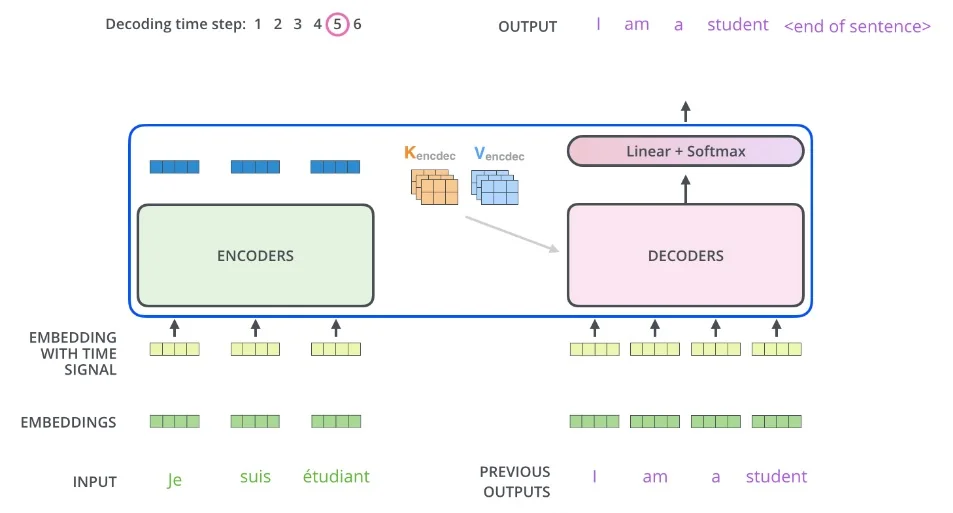

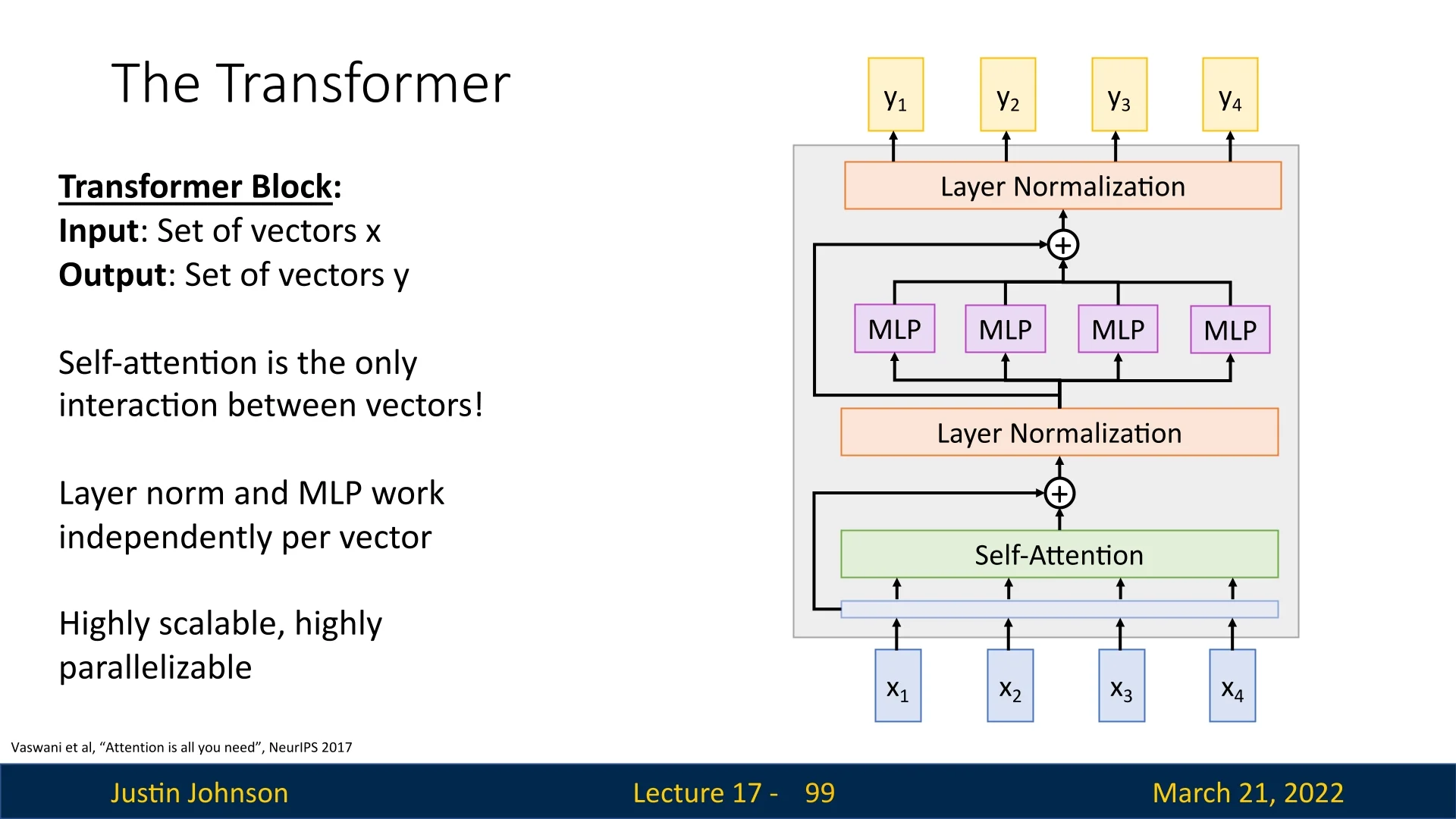

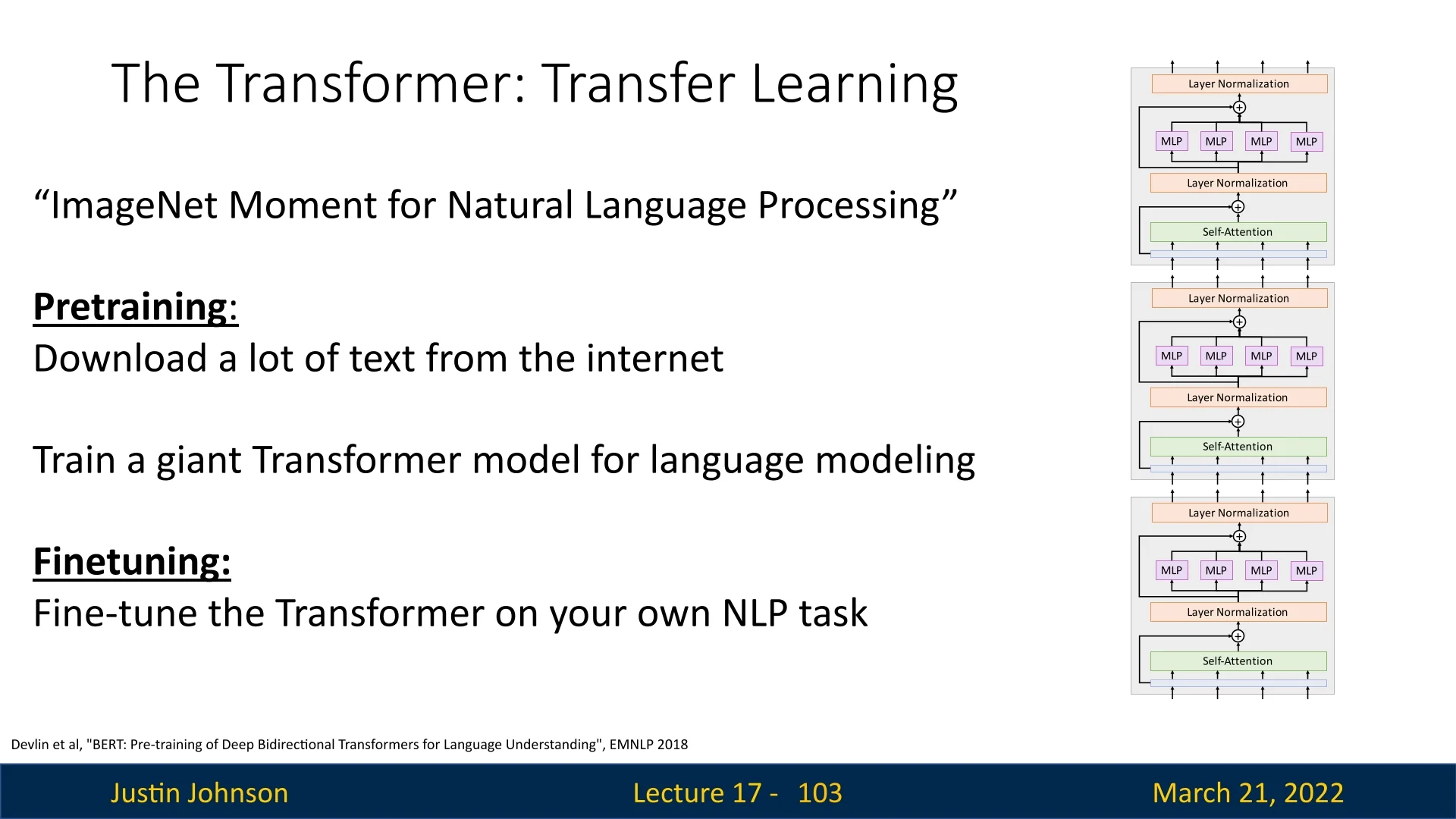

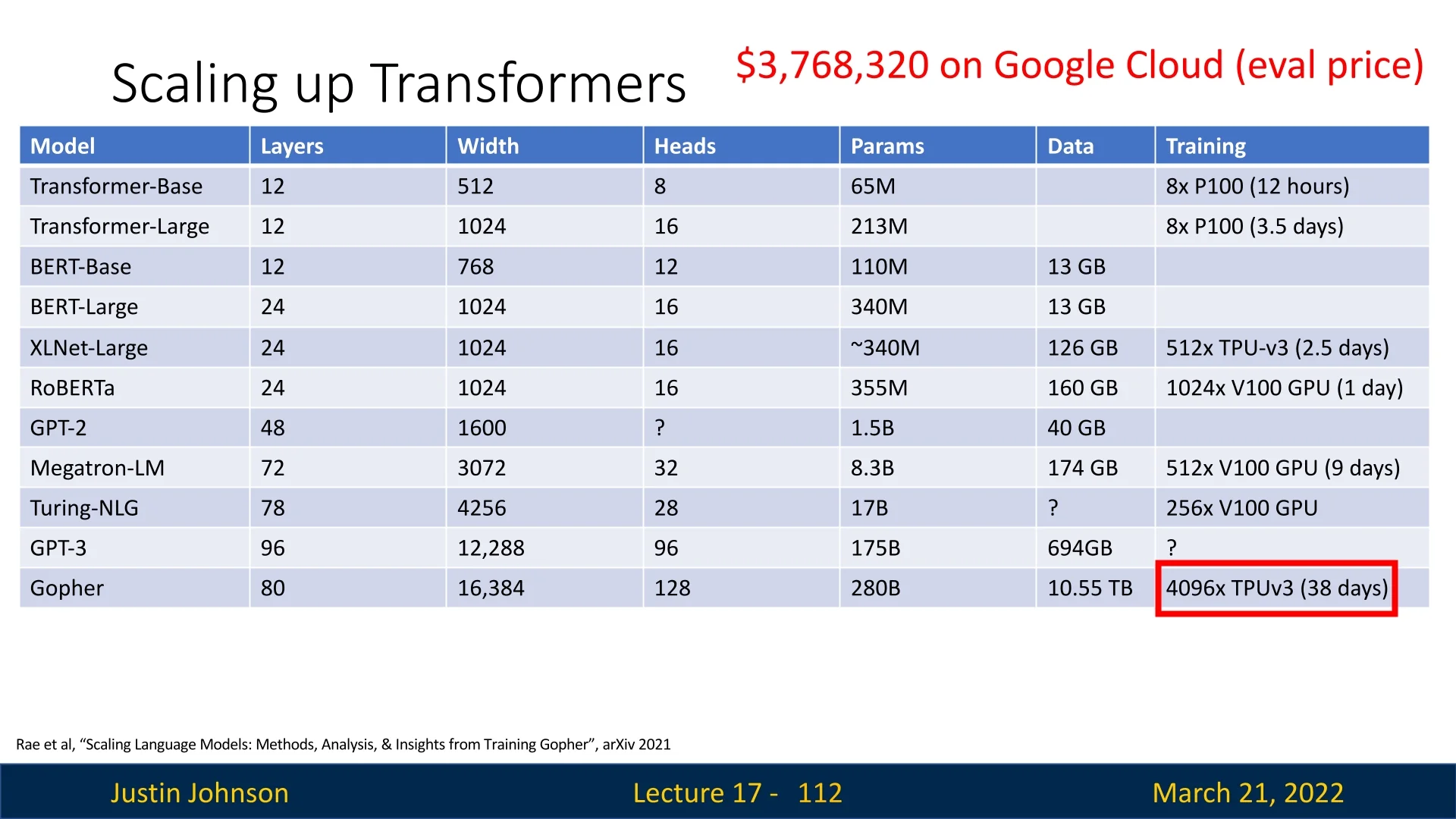

Y, A = self_attention(X, W_q, W_k, W_v) # Parallelized computation