Lecture 16: Recurrent Networks

16.1 Introduction to Recurrent Neural Networks (RNNs)

Many real-world problems involve sequential data, where information is not independent but instead follows a temporal or ordered structure. Traditional neural networks, such as fully connected (FC) networks and convolutional neural networks (CNNs), assume that inputs are independent of each other, making them ineffective for tasks where past information influences future outcomes. Recurrent Neural Networks (RNNs) are specifically designed to handle such problems by incorporating memory through recurrent connections, enabling them to process sequences of variable length.

16.1.1 Why Study Sequential Models?

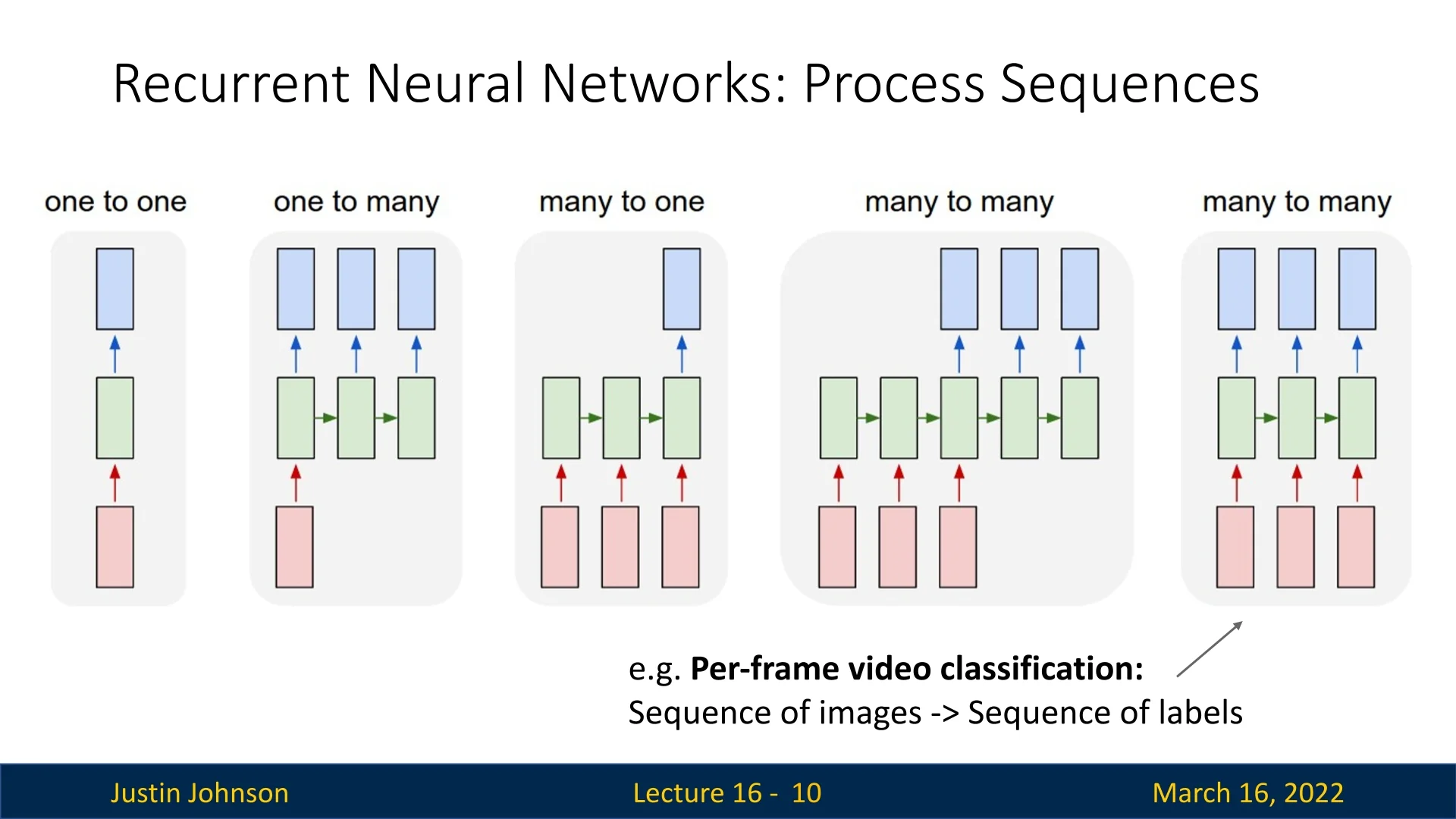

Sequential modeling is crucial for various applications where past observations influence future predictions. Without specialized architectures, we cannot effectively solve tasks such as:

- Image Captioning (One-to-Many): Generating a sequence of words to describe an image requires understanding both spatial and sequential dependencies [648].

- Video Classification (Many-to-One): Classifying an action or event in a video requires processing frames as a sequence, capturing motion and context [277].

- Machine Translation (Many-to-Many): Translating sentences from one language to another requires modeling sequential dependencies across different languages [595].

- Time-Series Forecasting: Financial market predictions, weather forecasting, and power grid monitoring depend on capturing trends and long-term dependencies.

- Sequence Labeling: Named entity recognition, part-of-speech tagging, and handwriting recognition require assigning labels to elements of a sequence while maintaining context.

- Autoregressive Generation: Music composition, text generation, and speech synthesis involve generating outputs where each step depends on previous ones.

16.1.2 RNNs as a General-Purpose Sequence Model

Unlike traditional models that require a fixed input size, RNNs provide a unified architecture for handling sequences of arbitrary length. This flexibility allows RNNs to process short and long sequences using the same model, making them suitable for tasks ranging from speech processing to video analysis.

Although RNNs are designed for sequential data, they can also be applied to non-sequential tasks by processing an input sequentially. For instance, instead of analyzing an image in a single forward pass, an RNN can take a series of glimpses and make a decision based on accumulated information.

16.1.3 RNNs for Visual Attention and Image Generation

Recurrent Neural Networks are traditionally used for sequence modeling, but they can also be leveraged to process images in a sequential manner. Two notable applications include:

- Visual Attention Mechanisms: Instead of processing an entire image at once, an RNN can take a series of glimpses, deciding where to focus next based on previous observations.

- Autoregressive Image Generation: Instead of generating an image in one step, an RNN can incrementally refine an output, painting it sequentially over time.

Visual Attention: Sequential Image Processing

A compelling use case of RNNs in non-sequential tasks is visual attention, where an RNN dynamically determines where to focus within an image. This approach is exemplified by [20], which uses an RNN to sequentially analyze different parts of an image before making a classification decision.

- At each timestep, the network decides which region of the image to examine based on all previously acquired information.

- This process continues over multiple timesteps, accumulating evidence before making a final classification decision.

- A practical example is using RNNs for MNIST digit classification, where instead of viewing the full image at once, the network sequentially attends to different regions before determining the digit.

Autoregressive Image Generation with RNNs

Another fascinating application of RNNs is in image generation, as demonstrated by [187]. Instead of generating an entire image in one step, the model incrementally constructs it over multiple timesteps:

- The model ”draws” small portions of the image sequentially, refining details at each step.

- At each timestep, the RNN decides where to modify the canvas and what details to add.

- This mimics the human drawing process, where an artist sequentially sketches and refines different parts of an image.

The DRAW model [187] exemplifies this approach, using recurrent layers to iteratively generate and improve an image.

These examples illustrate that RNNs are not limited to temporal sequences—they can also be used in spatially structured tasks by treating an image as a sequence of observations or drawing steps.

16.1.4 Limitations of Traditional Neural Networks for Sequential Data

The inability of FC networks and CNNs to capture temporal dependencies leads to major limitations when dealing with sequential tasks. The following table highlights the key differences:

| Characteristic | FC Networks | CNNs | RNNs |

|---|---|---|---|

| Handles Sequential Data | No | No | Yes |

| Shares Parameters Across Time | No | No | Yes |

| Captures Long-Term Dependencies | No | No | Partially (with LSTMs/GRUs) |

| Suitable for Variable-Length Input | No | Partially (1D CNNs) | Yes |

16.1.5 Overview of Recurrent Neural Networks (RNNs) and Their Evolution

Many tasks in modern machine learning involve sequential or time-dependent data, where the observation at time \(t\) depends on the history of inputs \(x_1,\dots ,x_{t-1}\). Classical feedforward networks (fully connected or convolutional) typically assume that inputs are independent and identically distributed (i.i.d.), so they struggle to model such temporal dependencies. Recurrent Neural Networks (RNNs) address this limitation by introducing a hidden state that is passed from one timestep to the next, allowing the model to accumulate information over sequences of (in principle) arbitrary length.

Enrichment 16.1.5.1: How to read this overview

This subsection is intentionally a high-level roadmap of sequence modeling architectures, from basic recurrence to modern attention-based models. Our goal here is to explain why each step in this evolution was introduced and how it addresses the limitations of the previous step. We only sketch the core ideas and equations; rigorous derivations (including Backpropagation Through Time, gating equations, and attention mechanisms), implementation details, and additional examples will follow in dedicated subsections later in this chapter and in the subsequent chapter on Transformers.

RNN progression: from vanilla units to gated architectures

Vanilla RNNs: the basic recurrent idea The simplest recurrent architecture, often called an Elman RNN, maintains a hidden state \(\mathbf {h}_t\) that is updated at each timestep \(t\) via

\begin {equation} \mathbf {h}_t = \tanh \Bigl ( \mathbf {W}_{hh}\,\mathbf {h}_{t-1} + \mathbf {W}_{xh}\,\mathbf {x}_t + \mathbf {b} \Bigr ), \label {eq:chapter16_vanilla_rnn_recurrence} \end {equation}

where \(\mathbf {x}_t\) is the input at time \(t\), \(\mathbf {h}_t\) is the hidden state, and the same parameters \(\mathbf {W}_{hh}\), \(\mathbf {W}_{xh}\), and \(\mathbf {b}\) are reused for all timesteps. This weight sharing is what gives RNNs their ability to generalize across sequence length.

However, as we will see in detail when we derive Backpropagation Through Time (BPTT), repeatedly multiplying by \(\mathbf {W}_{hh}\) causes gradients to either shrink to zero or explode in magnitude over long sequences. This is the classical vanishing/exploding gradient problem [36, 471]. In practice:

- Gradients often vanish, making it hard for vanilla RNNs to learn dependencies beyond roughly 10–50 timesteps.

- Gradients can also explode when \(\|\mathbf {W}_{hh}\|\) is too large or activations allow unbounded growth, which is typically mitigated with gradient clipping.

Later in this chapter we will revisit Vanilla RNN and formally analyze why these issues arise and how techniques such as truncated BPTT partially alleviate them.

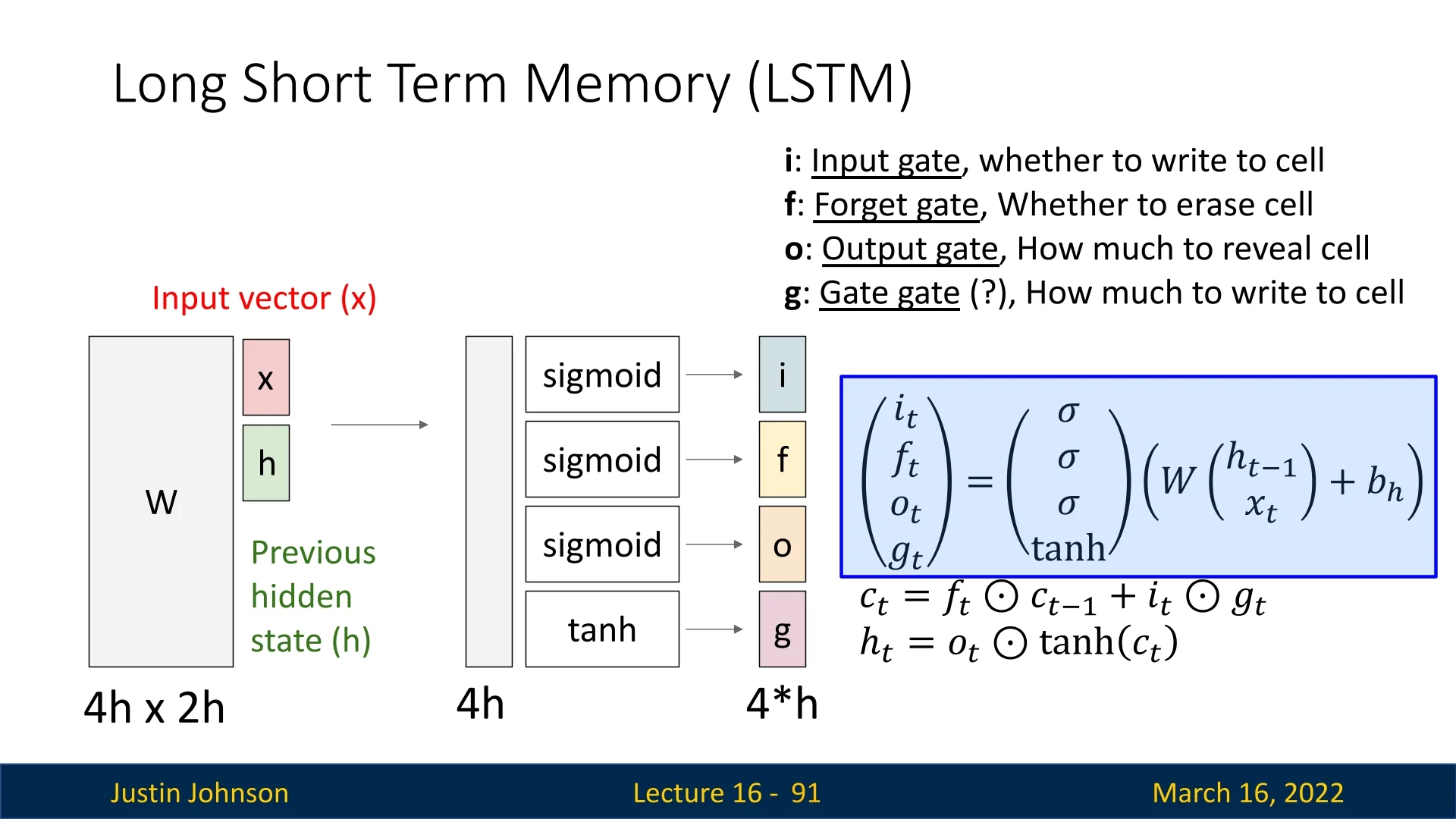

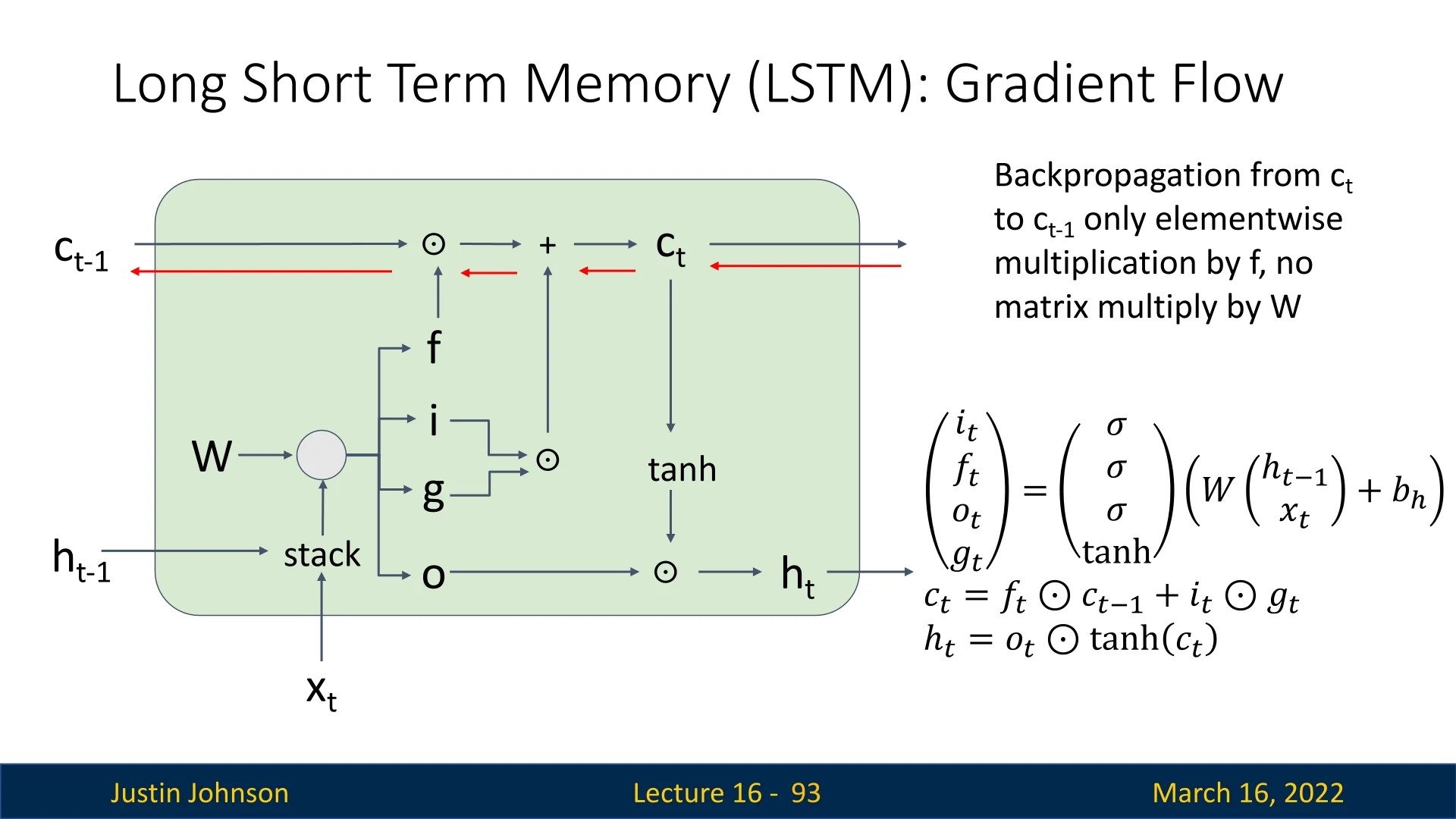

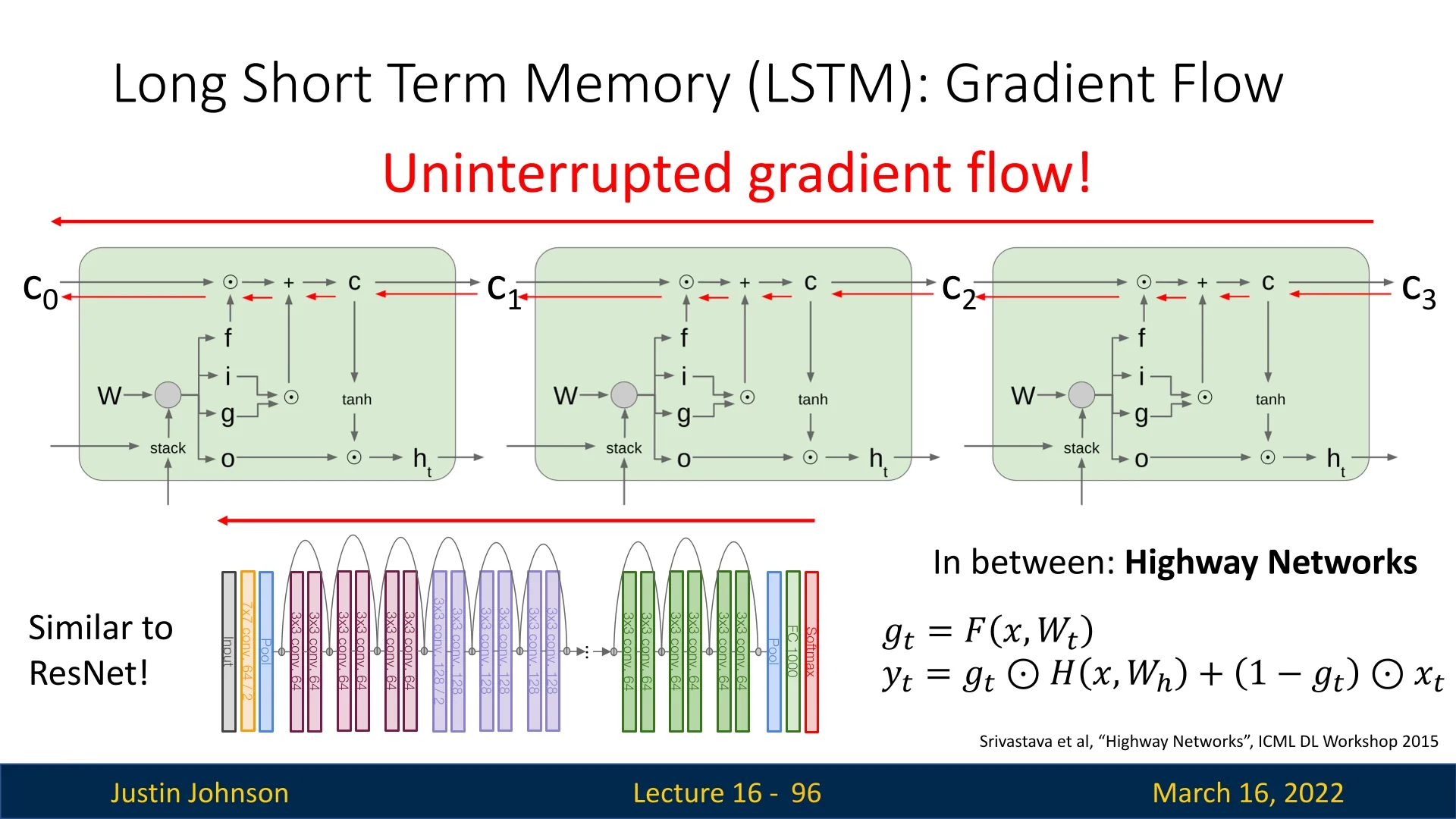

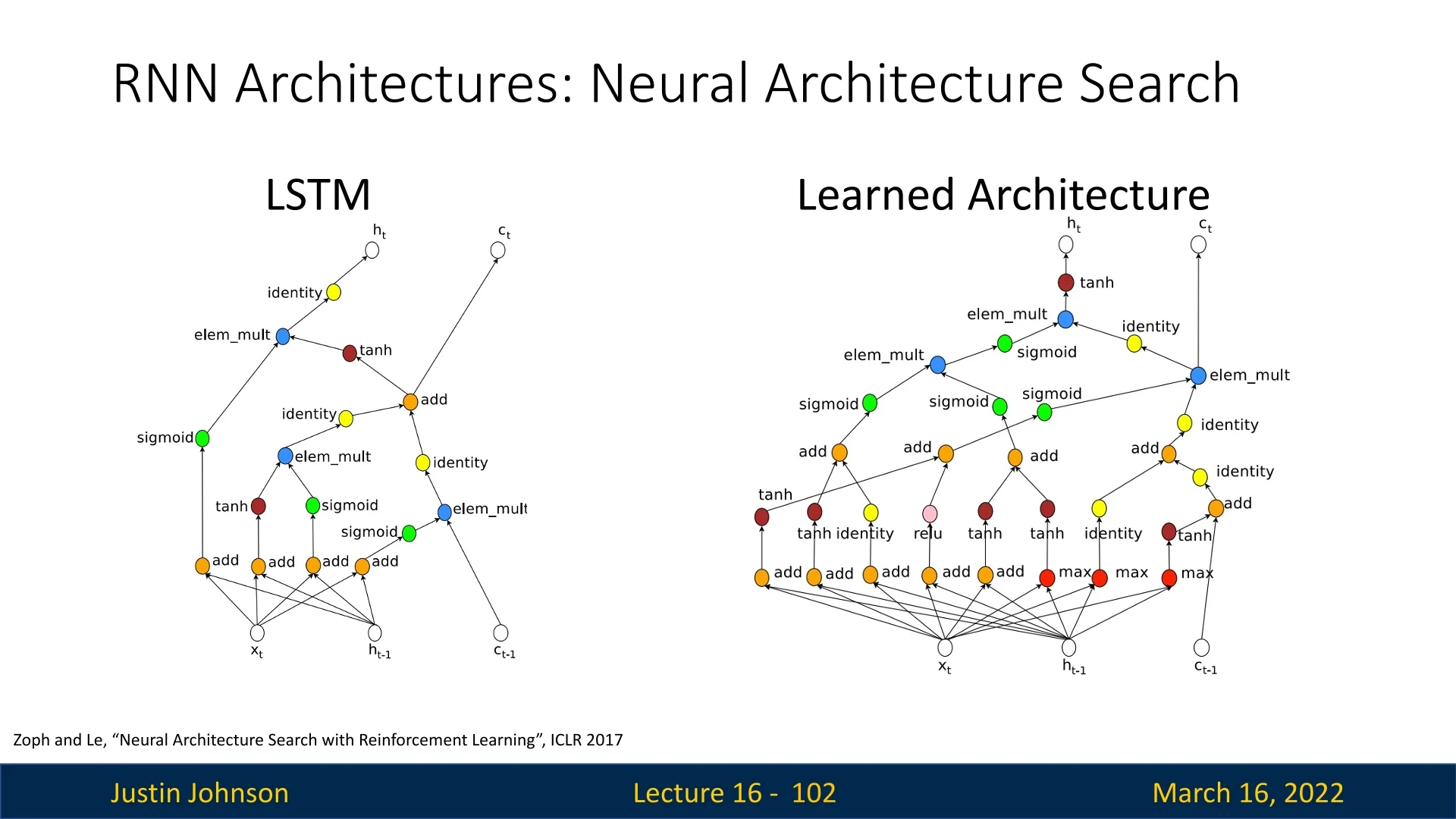

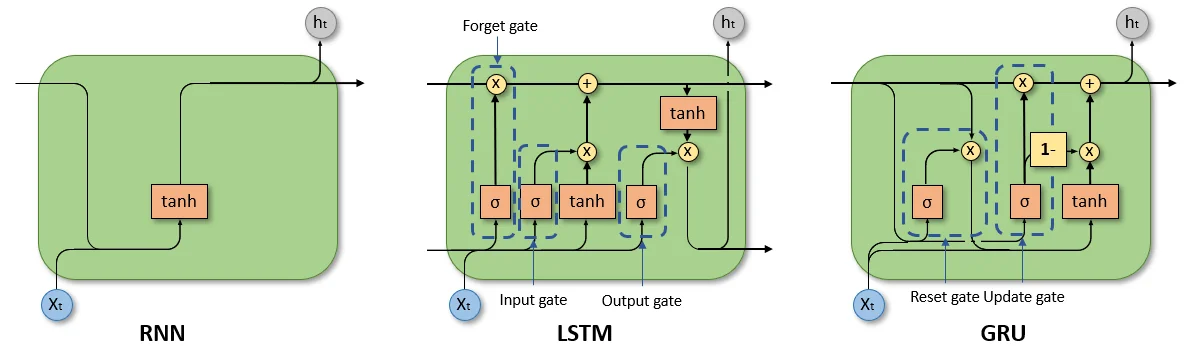

LSTMs: gating and additive memory for long-term dependencies To handle much longer temporal dependencies (hundreds of steps), Long Short-Term Memory (LSTM) networks [227] modify the recurrence in two crucial ways:

- 1.

- They maintain a separate cell state that is updated additively, creating a path where information and gradients can flow over many timesteps with minimal attenuation.

- 2.

- They introduce gates (input, forget, and output) that learn when to write new information to the cell state, when to erase old information, and when to expose the cell state to the hidden state.

Intuitively, the LSTM turns the hidden dynamics into a differentiable memory system that can learn to “remember” and “forget” over long horizons. This largely solves the vanishing gradient problem for many practical sequence lengths and made LSTMs the dominant architecture for years in speech recognition, language modeling, and other temporal tasks. The trade-off is increased complexity: each LSTM cell contains several interacting affine transformations and gates, increasing parameter count and compute cost relative to vanilla RNNs.

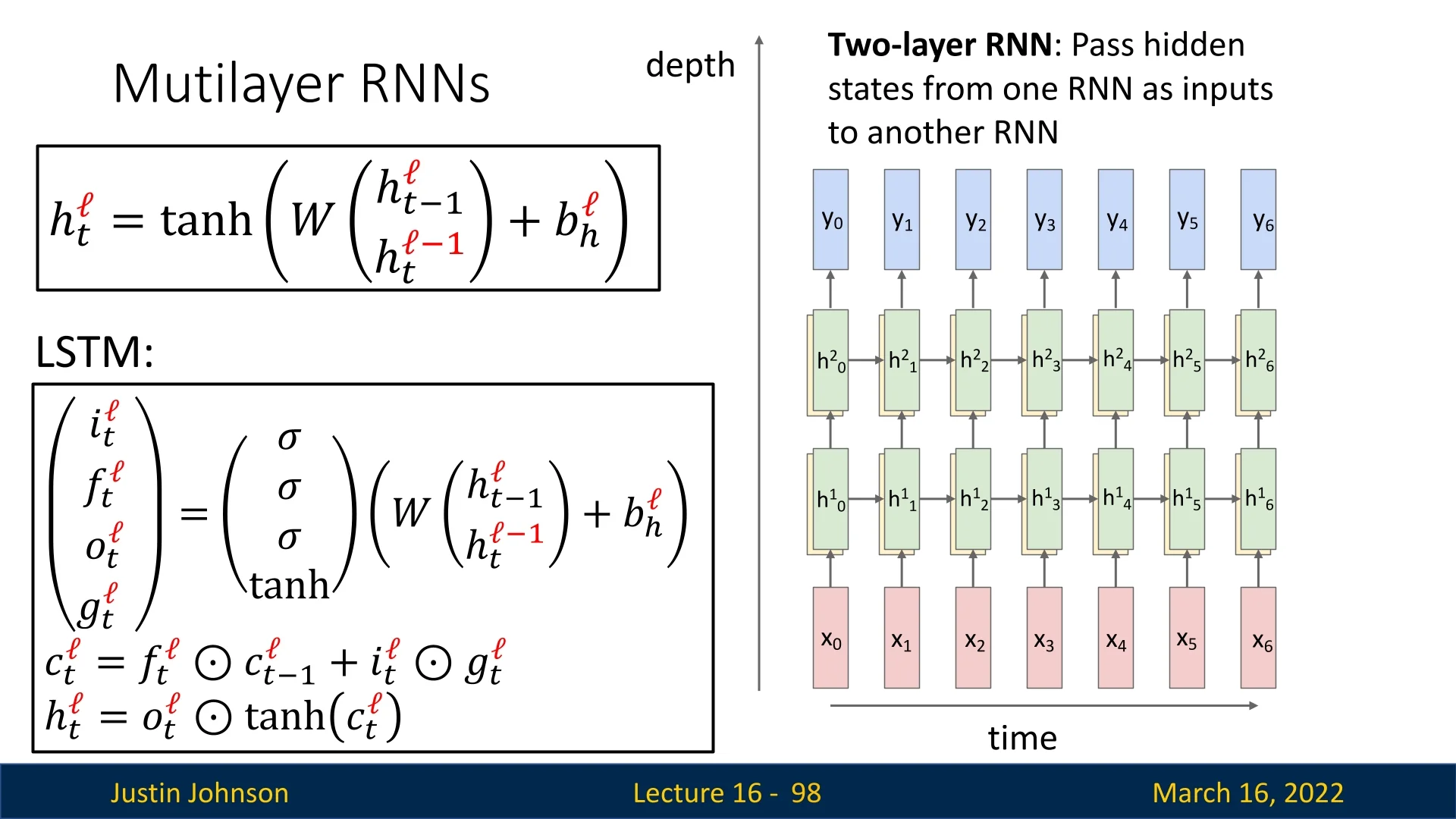

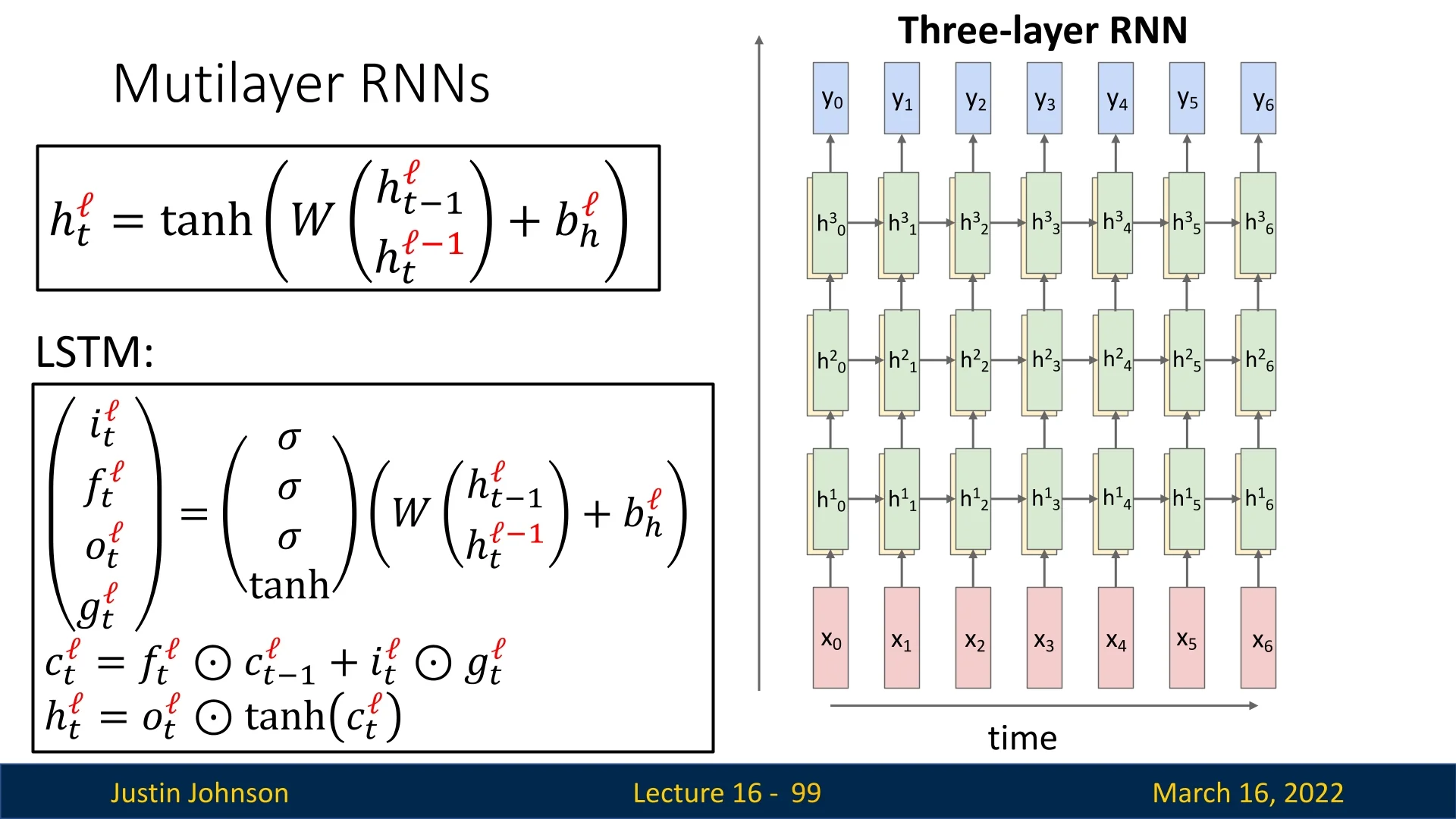

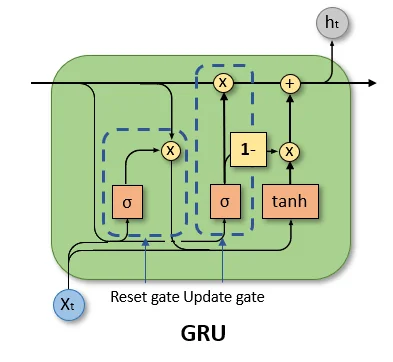

GRUs: simplifying the LSTM while keeping most benefits Gated Recurrent Units (GRUs) [105] were proposed as a streamlined alternative to LSTMs. GRUs merge the LSTM’s input and forget gates into a single update gate and remove the explicit cell state, directly updating the hidden state instead. This yields:

- Fewer parameters and simpler computation compared to LSTMs.

- Empirically similar performance to LSTMs on many language and sequence modeling benchmarks, especially for moderate sequence lengths.

From an evolutionary perspective, GRUs are motivated by a design question: how much of the LSTM’s complexity is truly necessary to combat vanishing gradients? GRUs show that a simpler gating mechanism can capture much of the benefit, which is attractive in resource-limited or latency-sensitive settings.

Bidirectional RNNs: using both past and future Vanilla RNNs, LSTMs, and GRUs as defined above are causal: at time \(t\), the model only has access to the past and current inputs \((x_1,\dots ,x_t)\). For many applications, however, the entire sequence is available at once. Bidirectional RNNs address this by running one RNN forward in time and another backward, then combining their hidden states (e.g., by concatenation) at each timestep.

This evolution is motivated by disambiguation through context: for the token “bank” in the sentence “He went to the bank to fish”, a backward RNN that sees “to fish” can help decide that “bank” refers to the side of a river rather than a financial institution. Bidirectional RNNs therefore excel in tasks like text classification, named entity recognition, and offline speech transcription, but they are not suitable for real-time streaming applications where future inputs are not yet observed.

Motivation toward Transformers and attention-based models

The sequential bottleneck and fixed-size state Despite the success of LSTMs, GRUs, and bidirectional variants, all RNN-based models share two structural limitations:

- 1.

- Sequential computation across time: To compute \(\mathbf {h}_t\), we must first compute \(\mathbf {h}_{t-1}\). This dependency chain prevents parallelization across timesteps, making training and inference less efficient on modern accelerators for very long sequences.

- 2.

- Fixed-size hidden state: The hidden state \(\mathbf {h}_t\) is a vector of fixed dimension that must compress all past information. For extremely long contexts (thousands of tokens), this global bottleneck can limit the model’s capacity to selectively remember detailed information.

These limitations motivated architectures that could (i) process all positions in a sequence in parallel, and (ii) dynamically allocate capacity by letting each position attend to the most relevant parts of the sequence.

Transformers: replacing recurrence with self-attention The Transformer architecture [644] removes recurrence altogether and instead uses self-attention layers: each token computes weighted combinations of all other tokens in the sequence. At a high level:

- All timesteps can be processed in parallel within a layer, dramatically improving training efficiency on GPUs and TPUs.

- Long-range dependencies are handled naturally, since attention weights can connect arbitrarily distant positions without repeatedly multiplying by a transition matrix.

However, this shift introduces new trade-offs:

- The memory and compute cost of self-attention scales quadratically as \(O(T^2)\) with sequence length \(T\), which becomes challenging for very long inputs.

- For autoregressive generation (e.g., language modeling), outputs are still typically produced token by token, and each new token requires computing attention over the growing context. This can be slow for extremely long outputs, although techniques such as speculative decoding [729] and non-autoregressive models [192] aim to alleviate this by partially parallelizing generation or reducing the number of decoding steps.

Later, when we discuss attention mechanisms in depth, we will connect these design decisions back to the limitations of RNNs described above.

Roadmap for the rest of the chapter

The remainder of this chapter builds on this evolutionary story and revisits each model family in more depth:

- 1.

- Vanilla RNNs and BPTT: We begin by formalizing vanilla RNNs, deriving Backpropagation Through Time, and precisely characterizing why and when vanishing and exploding gradients occur.

- 2.

- LSTMs and GRUs: We then introduce LSTMs and GRUs from first principles, writing out their gating equations and explaining how additive memory paths and learned gates mitigate vanishing gradients, along with their remaining limitations (sequential computation, fixed-size state).

- 3.

- Beyond RNNs: Finally, we use the insights from gated RNNs to motivate attention-based architectures and Transformers, which replace recurrent hidden states with self-attention, enabling highly parallel training and more flexible modeling of long-range dependencies. Detailed coverage of Transformer variants and attention mechanisms appears in the following chapter.

By first presenting this high-level overview and then returning to each model class in detail, we aim to make the connections between architectures explicit: each new design (gating, bidirectionality, attention) can be understood as an attempt to systematically overcome the optimization and representation bottlenecks of its predecessors.

16.2 Recurrent Neural Networks (RNNs) - How They Work

Recurrent Neural Networks (RNNs) process sequential data by maintaining an internal state that evolves over time. Unlike feedforward neural networks that process inputs independently, RNNs retain memory through recurrent connections, enabling them to model dependencies across time steps.

At each timestep \( t \), a new input \( x_t \) is provided to the RNN. The network updates its hidden state \( h_t \) based on both the current input and the previous hidden state \( h_{t-1} \), producing an output \( y_t \): \[ h_t = f_W(h_{t-1}, x_t), \] where \( f_W \) is the recurrence function, typically a non-linear function such as \(\tanh \). A key property of RNNs is that the same function and parameters are used at every time step. The weights \( W \) are shared across all time steps, allowing the model to process sequences of arbitrary length.

Expanding this, a simple or ”vanilla” RNN is formally defined as: \[ h_t = \tanh (W_{hh}h_{t-1} + W_{xh}x_t + b), \] \[ y_t = W_{hy}h_t. \] This architecture, sometimes called a vanilla RNN or Elman RNN after Prof. Jeffrey Elman, efficiently processes sequences by applying the same weight matrices repeatedly. Note: we’ll often omit the bias from the notation for simplicity, but don’t forget it when you implement RNNs.

16.2.1 RNN Computational Graph

Since RNNs process sequences iteratively, we can represent their computation graph by unrolling the network over time. The computational graph depends on how inputs and outputs are structured, leading to different sequence processing scenarios.

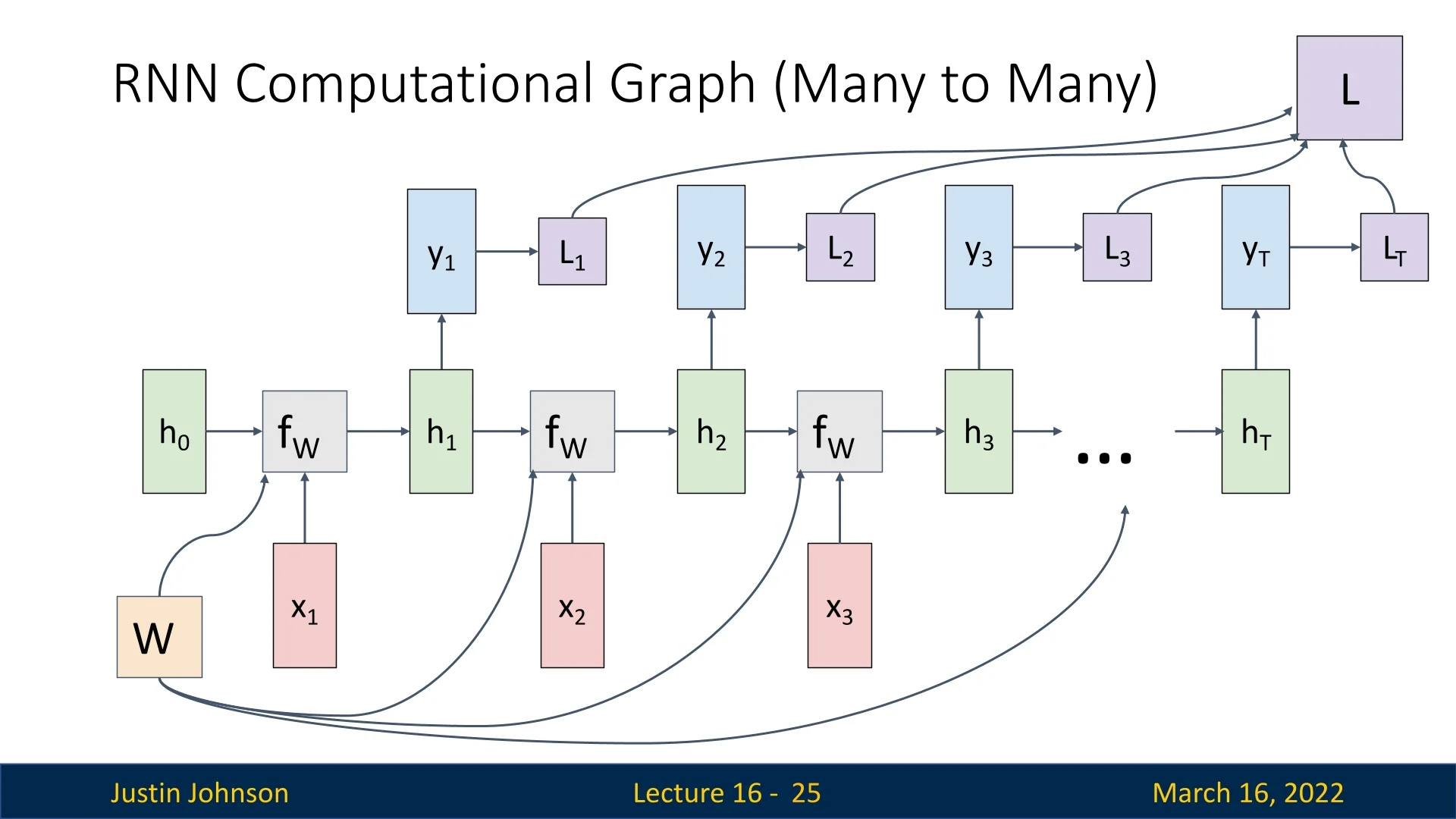

Many-to-Many

In a many-to-many setup, an RNN processes a sequence of inputs and generates a sequence of outputs. Each hidden state depends on the previous state and the current input: \[ h_t = f_W(h_{t-1}, x_t), \quad y_t = W_{hy}h_t. \]

The initial hidden state \( h_0 \) is typically initialized as a zero vector or sampled from a normal distribution. However, in some architectures, \( h_0 \) is treated as a learnable parameter, allowing it to be optimized during training. This can be beneficial when early time steps contain little useful information.

The network processes each input sequentially:

- \( x_1 \) is combined with \( h_0 \) using \( f_W \), producing \( h_1 \) and output \( y_1 \).

- \( h_1 \) is used with \( x_2 \) to compute \( h_2 \), which generates \( y_2 \).

- This process repeats until reaching the final time step \( T \).

Since the same weight matrix is reused at every time step, the computational graph is unrolled for as long as the sequence continues. During backpropagation, gradients must be summed across all timesteps (As we use the same node in multiple parts of the computation graph).

Training an RNN involves applying a loss function at each timestep: \[ L = \sum _{t=1}^{T} L_t, \] where \( L_t \) is the loss at time \( t \). The summed loss is then used for backpropagation.

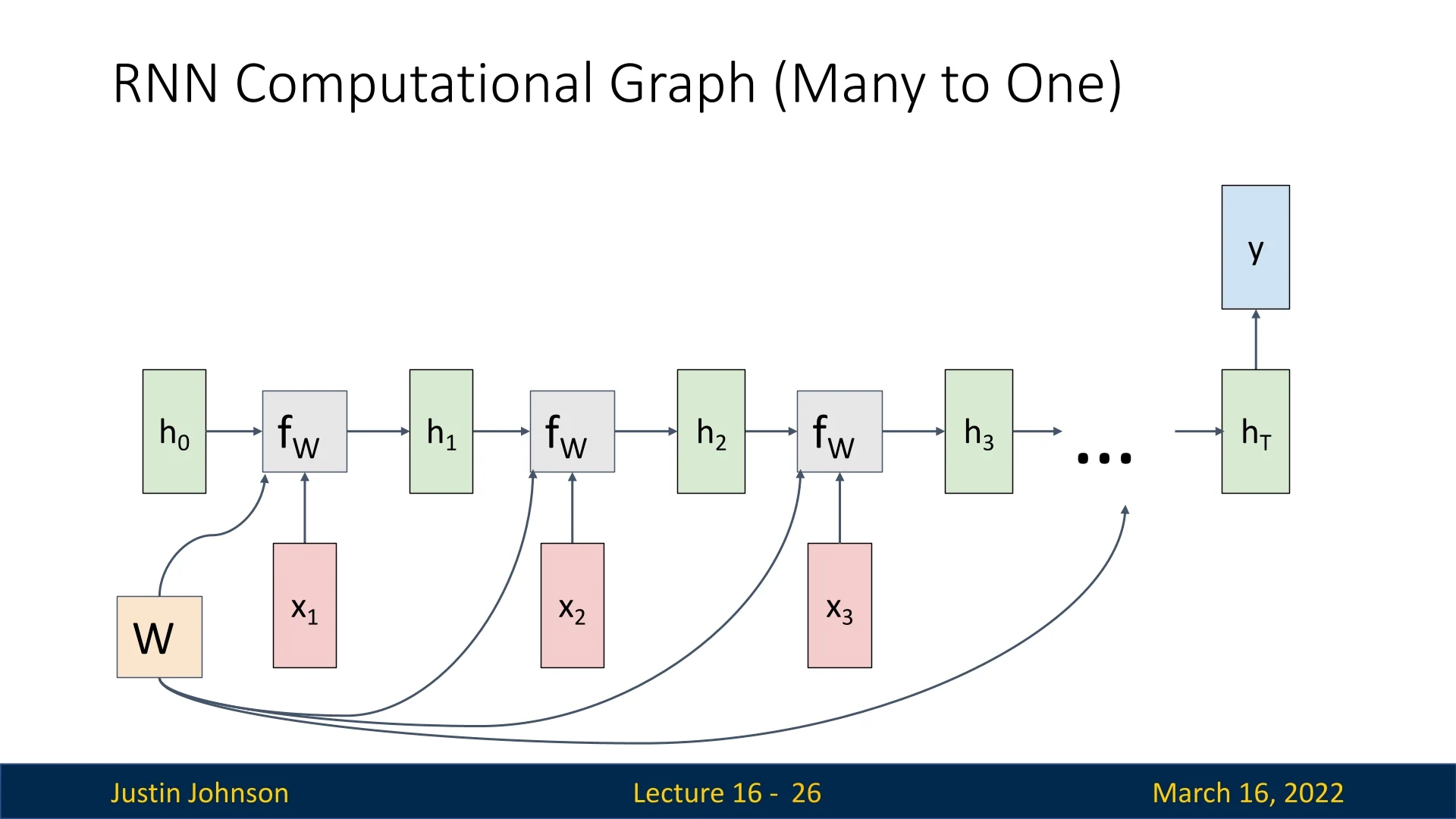

Many-to-One

Some tasks require processing a sequence of inputs but generating only a single output at the final time step. This many-to-one setting is common in applications such as video classification, where the entire sequence is used to predict one label.

Instead of computing outputs at each timestep, the RNN produces a final output at step \( T \), based on the last hidden state \( h_T \): \[ y = W_{hy}h_T. \] The loss function is then computed using only the final output \( y_T \), such as cross-entropy (CE) loss for classification.

This structure is particularly useful when the full context of the sequence is needed to make an informed decision, such as recognizing an action from a video or predicting sentiment from a passage of text.

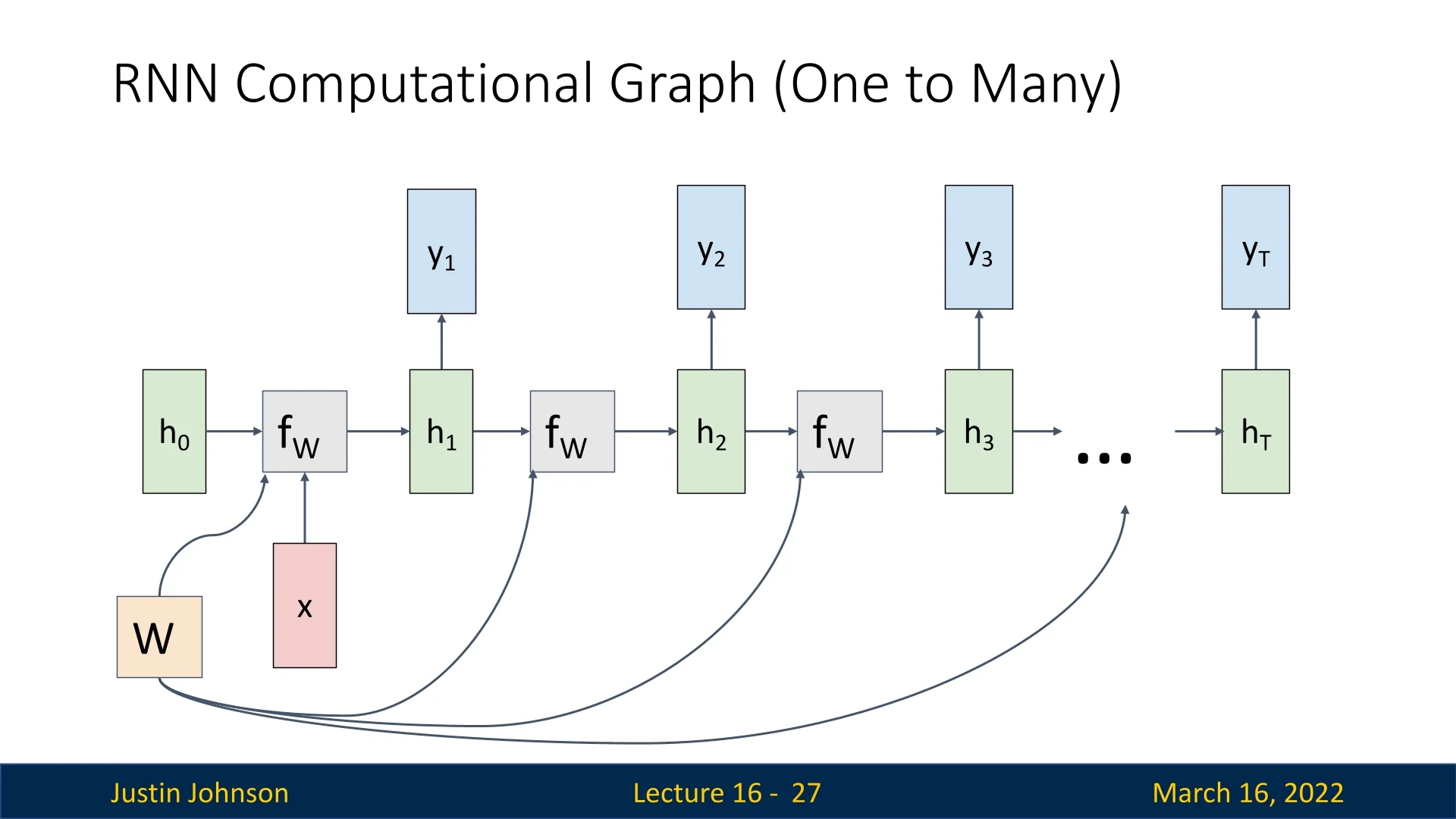

One-to-Many

In contrast, one-to-many architectures take a single input and generate a sequence of outputs. This is commonly used in generative tasks such as image captioning, where the network produces a sequence of words based on an input image.

The RNN is initialized with an input \( x \) and generates outputs iteratively: \[ h_1 = f_W(h_0, x), \quad y_1 = W_{hy}h_1. \] The output \( y_1 \) is then fed as input at the next timestep: \[ h_2 = f_W(h_1, y_1), \quad y_2 = W_{hy}h_2. \]

The sequence continues until a special END token is produced, signaling termination.

The network must learn to balance sequential coherence while ensuring that the generated sequence remains contextually relevant.

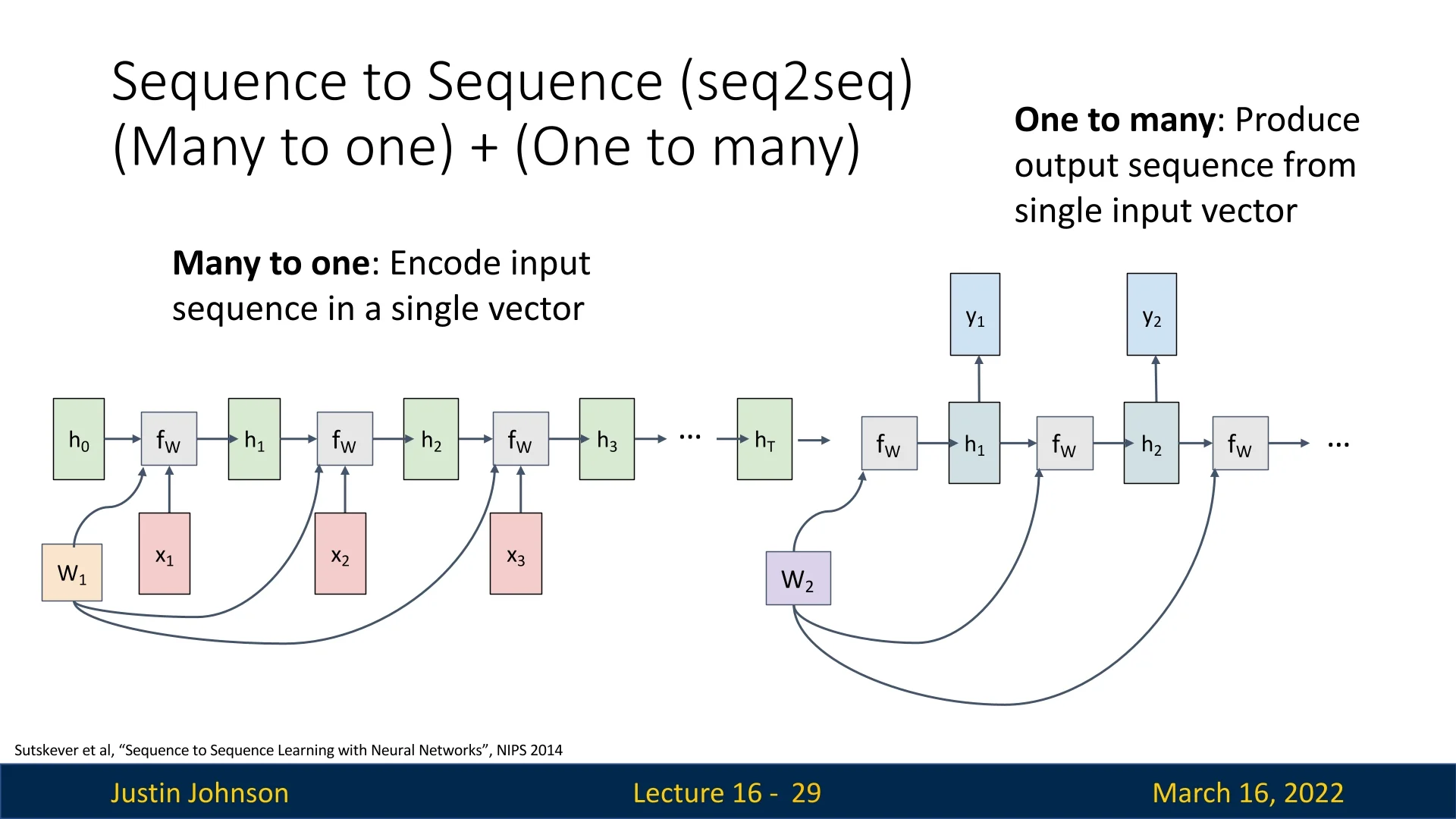

16.2.2 Seq2Seq: Sequence-to-Sequence Learning

Many real-world problems involve mapping an input sequence to an output sequence where the lengths can differ arbitrarily (\(T \neq M\)). A canonical example is machine translation, where an input sentence in one language (e.g. English) is converted into a sentence in another language (e.g. French); the two sentences may have different lengths and word orders.

To handle this setting, Sequence-to-Sequence (Seq2Seq) models [595] use a composite architecture consisting of two Recurrent Neural Networks with separate parameters:

- Encoder (many-to-one). Uses weights \(W_1\) to read the input sequence and compress it into a single vector.

- Decoder (one-to-many). Uses weights \(W_2\) to expand this single vector into an output sequence.

In Justin Johnson’s slides (see the below figure), this is summarized as \[ \mbox{Seq2Seq} = (\mbox{many-to-one}) + (\mbox{one-to-many}). \]

The encoder–decoder architecture

Let the input sequence be \(\mathbf {x} = (x_1,\dots ,x_T)\) and the output sequence be \(\mathbf {y} = (y_1,\dots ,y_M)\).

- 1.

- Encoder (many-to-one, weights \(W_1\)). The encoder RNN processes the input sequence step by step: \begin {equation} h^{\mbox{enc}}_t = f_W\!\bigl (h^{\mbox{enc}}_{t-1}, x_t; W_1\bigr ), \qquad t = 1,\dots ,T, \label {eq:chapter16_seq2seq_encoder} \end {equation} where \(f_W\) denotes the recurrent update (RNN, LSTM, GRU, etc.) and \(h^{\mbox{enc}}_0\) is typically initialized to the zero vector. The final encoder state \begin {equation} h^{\mbox{enc}}_T \end {equation} serves as a fixed-size context vector summarizing the entire input sequence. In the figure, these encoder states are drawn as \(h_0, h_1, \dots , h_T\).

- 2.

- Information transfer (many-to-one \(\to \) one-to-many). The decoder is initialized from the encoder’s final state: \begin {equation} h^{\mbox{dec}}_0 = h^{\mbox{enc}}_T, \label {eq:chapter16_seq2seq_handover} \end {equation} which corresponds to the arrow from \(h_T\) into the first decoder cell in the below figure. This vector \(h^{\mbox{enc}}_T\) is the single “input” to the decoder side.

- 3.

- Decoder (one-to-many, weights \(W_2\)). Starting from \(h^{\mbox{dec}}_0\) and a special <START> token \(y_0\), the decoder generates the output sequence autoregressively using its own parameters \(W_2\): \begin {equation} h^{\mbox{dec}}_t = f_W\!\bigl (h^{\mbox{dec}}_{t-1}, y_{t-1}; W_2\bigr ), \qquad t = 1,\dots ,M, \label {eq:chapter16_seq2seq_decoder_state} \end {equation} \begin {equation} p(y_t \mid y_{<t}, \mathbf {x}) = \mathrm {softmax}\bigl (W_{\mbox{out}} h^{\mbox{dec}}_t\bigr ), \label {eq:chapter16_seq2seq_decoder_output} \end {equation} where \(W_{\mbox{out}}\) maps hidden states to vocabulary logits. During training, we typically use teacher forcing, feeding the ground-truth token \(y_{t-1}\) into (16.5); at inference time, \(y_{t-1}\) is the token predicted at the previous step (e.g. \(\arg \max \) of (16.6)). In the figure, the decoder hidden states \(h^{\mbox{dec}}_1, h^{\mbox{dec}}_2,\dots \) are drawn as \(h_1, h_2,\dots \), each producing outputs \(y_1, y_2,\dots \).

Decoding continues until the model emits a special <END> token, indicating that the output sequence is complete. In this way, a single encoded vector \(h^{\mbox{enc}}_T\) is “unrolled” into an output of arbitrary length \(M\).

Significance and the information bottleneck

Seq2Seq models extend RNNs from fixed-size input/output settings to a general framework for transforming one sequence into another, enabling applications such as:

- Machine translation: Converting text between languages (e.g. English \(\to \) French).

- Speech recognition: Mapping acoustic feature sequences to text.

- Text summarization: Compressing long documents into shorter summaries.

- Conversational AI: Generating responses in dialog systems.

At the same time, the basic encoder–decoder design introduces a fundamental information bottleneck:

- All information about the input sequence \(\mathbf {x}\) must be packed into the single vector \(h^{\mbox{enc}}_T\) in (16.4).

- For long inputs, early tokens \((x_1, x_2, \dots )\) may have only a weak influence on \(h^{\mbox{enc}}_T\) due to vanishing gradients and limited capacity, leading to degraded translation or generation quality.

This limitation motivates several extensions that we will develop later in the chapter and in subsequent chapters:

- Gated recurrent units (LSTMs, GRUs) improve how information and gradients propagate through time, making the context vector \(h^{\mbox{enc}}_T\) more robust for longer sequences.

- Attention mechanisms allow the decoder to look back at all encoder states \((h^{\mbox{enc}}_1,\dots ,h^{\mbox{enc}}_T)\) instead of relying solely on \(h^{\mbox{enc}}_T\), thereby softening the bottleneck.

In the next parts, we will connect this generic Seq2Seq template to concrete tasks such as language modeling, derive Backpropagation Through Time (BPTT) for training these models, and then revisit the roles of LSTMs, GRUs, and attention in improving sequence-to-sequence learning.

16.3 Example Usage of Seq2Seq: Language Modeling

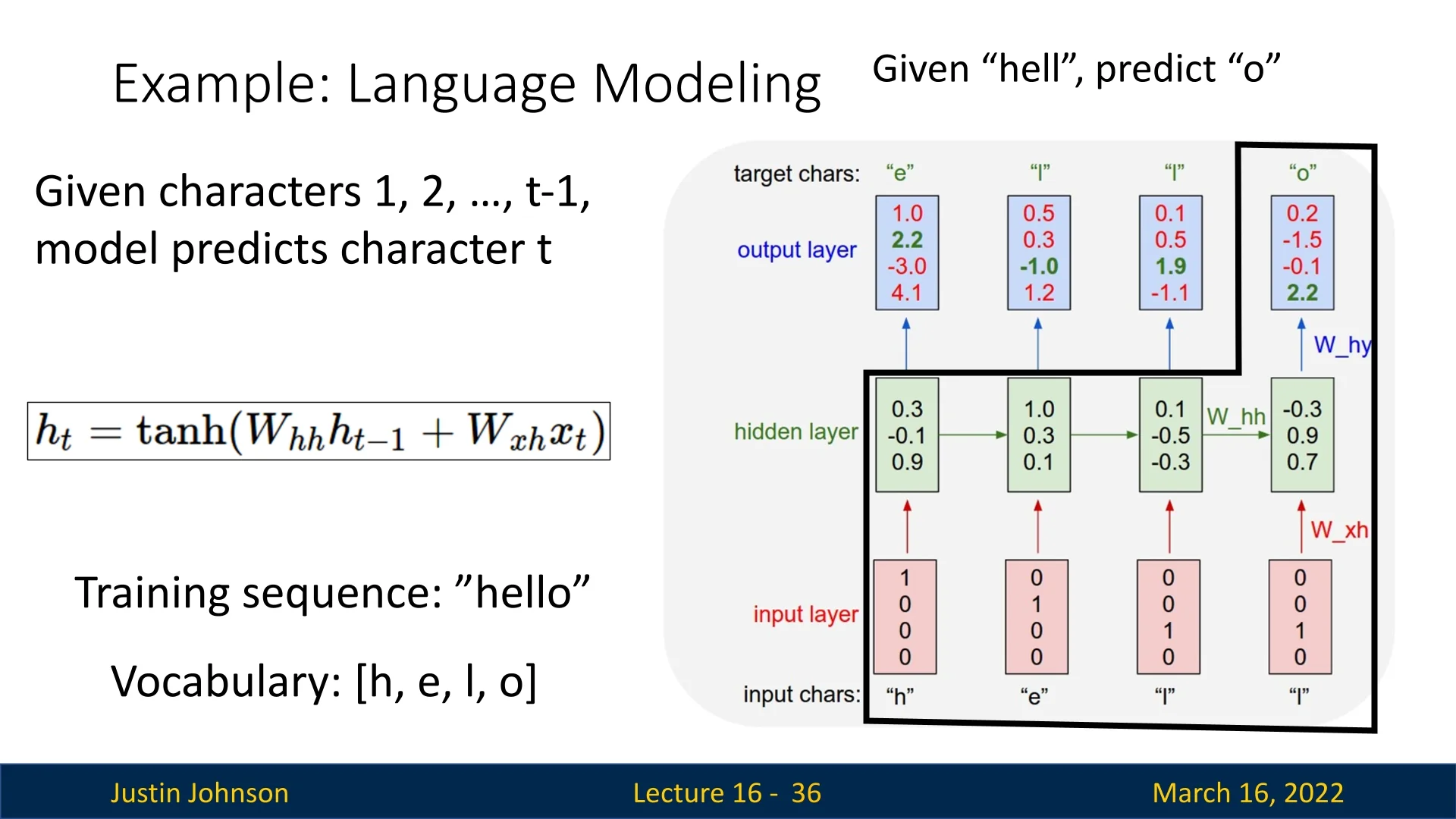

A concrete example of how recurrent networks operate in practice is a character-level language model. The goal is to process a stream of input characters and, at each timestep, predict the next character in the sequence. By learning the conditional distribution \[ p(x_t \mid x_1,\dots ,x_{t-1}), \] the model captures the statistical structure of the training text and can later be used to generate new text.

16.3.1 Formulating the problem

Consider the toy training sequence “hello” with vocabulary \[ \mathcal {V} = \{\mbox{h}, \mbox{e}, \mbox{l}, \mbox{o}\}. \] We view this as a supervised learning problem where, at each timestep, the input is the current character and the target is the next character:

| t | Input | Target |

| 1 | “h” | “e” |

| 2 | “e” | “l” |

| 3 | “l” | “l” |

| 4 | “l” | “o” |

Each character is represented as a one-hot vector in \(\mathbb {R}^{|\mathcal {V}|}\), for example: \[ \mathbf {x}_{\mbox{h}} = [1\;0\;0\;0]^\top ,\quad \mathbf {x}_{\mbox{e}} = [0\;1\;0\;0]^\top ,\quad \mathbf {x}_{\mbox{l}} = [0\;0\;1\;0]^\top ,\quad \mathbf {x}_{\mbox{o}} = [0\;0\;0\;1]^\top . \]

16.3.2 Forward pass through time

A simple RNN with hidden state dimension \(H\) maintains a hidden vector \(\mathbf {h}_t \in \mathbb {R}^H\) and updates it according to \[ \mathbf {h}_t = \tanh \!\bigl (\mathbf {W}_{hh}\mathbf {h}_{t-1} + \mathbf {W}_{xh}\mathbf {x}_t + \mathbf {b}_h\bigr ), \] where \(\mathbf {h}_0\) is an initial state (often the zero vector), \(\mathbf {W}_{xh}\) maps inputs to the hidden layer, \(\mathbf {W}_{hh}\) maps the previous hidden state to the new one, and \(\mathbf {b}_h\) is a bias term shared across time.

From the hidden state, the network produces unnormalized scores (logits) over the next character: \[ \mathbf {y}_t = \mathbf {W}_{hy}\mathbf {h}_t + \mathbf {b}_y, \] with \(\mathbf {W}_{hy}\) and \(\mathbf {b}_y\) shared at all timesteps. Applying a softmax gives a probability distribution over the vocabulary: \[ \mathbf {p}_t = \mathrm {softmax}(\mathbf {y}_t), \qquad (\mathbf {p}_t)_k = \frac {\exp ((\mathbf {y}_t)_k)}{\sum _{j}\exp ((\mathbf {y}_t)_j)}. \]

For example, for the first character “h”, we feed \(\mathbf {x}_1 = \mathbf {x}_{\mbox{h}}\) into the RNN to obtain a hidden state and logits: \[ \mathbf {h}_1 = [0.3,\,-0.1,\,0.9]^\top ,\qquad \mathbf {y}_1 = [1.0,\,2.2,\,-3.0,\,4.1]^\top , \] so that \(\mathbf {p}_1 = \mathrm {softmax}(\mathbf {y}_1)\) assigns high probability to the correct next character “e”. The same computation is repeated for “e”, “l”, and “l”, with the hidden state carrying information about the context seen so far (“h”, “he”, “hel”, “hell”).

Figure 16.6 illustrates this process: given characters up to time \(t-1\) (for example, “he”), the model predicts character \(t\) (“l”); then the new hidden state is forwarded to the next timestep.

16.3.3 Training: losses and gradient flow through time

To train the model, we compare its predictions with the ground-truth next characters and update the shared weights \[ \Theta = \{\mathbf {W}_{xh}, \mathbf {W}_{hh}, \mathbf {W}_{hy}, \mathbf {b}_h, \mathbf {b}_y\}. \]

Per-timestep loss

At each timestep \(t\), the target next character \(x_{t+1}\) is represented as a one-hot vector \(\mathbf {t}_{t+1} \in \mathbb {R}^{|\mathcal {V}|}\). We compute the cross-entropy loss between \(\mathbf {p}_t\) and \(\mathbf {t}_{t+1}\): \[ L_t = - \sum _{k=1}^{|\mathcal {V}|} (\mathbf {t}_{t+1})_k \log (\mathbf {p}_t)_k = - \log (\mathbf {p}_t)_{k^\star }, \] where \(k^\star \) is the index of the true next character at time \(t+1\). For the sequence “hello” we obtain losses \(L_1,\dots ,L_4\) corresponding to the four training pairs listed above.

Sequence loss and gradient

The total loss for the sequence is the sum of per-timestep losses: \[ \mathcal {L} = \sum _{t=1}^{T} L_t. \] Because the same parameters \(\Theta \) are reused at every timestep, the gradient of the sequence loss with respect to any parameter (for example, \(\mathbf {W}_{hh}\)) is the sum of its contributions from each timestep: \[ \frac {\partial \mathcal {L}}{\partial \mathbf {W}_{hh}} = \sum _{t=1}^{T} \frac {\partial L_t}{\partial \mathbf {W}_{hh}}. \]

Each term \(\partial L_t / \partial \mathbf {W}_{hh}\) is itself a chain of derivatives that passes backward through time. For instance, the loss \(L_4\) (predicting “o” given the prefix “hell”) depends on \(\mathbf {h}_4\), which depends on \(\mathbf {h}_3\), which depends on \(\mathbf {h}_2\), and so on back to \(\mathbf {h}_0\). Computing the gradient therefore requires propagating error signals through the entire sequence of hidden states: \[ \mathbf {h}_4 \rightarrow \mathbf {h}_3 \rightarrow \mathbf {h}_2 \rightarrow \mathbf {h}_1 \rightarrow \mathbf {h}_0. \]

Once the gradient \(\nabla _{\Theta } \mathcal {L}\) has been computed, we update the parameters using gradient descent or a variant such as Adam: \[ \Theta \leftarrow \Theta - \eta \,\nabla _{\Theta } \mathcal {L}, \] where \(\eta \) is the learning rate and the negative gradient gives the direction of steepest decrease of the loss.

The procedure for computing these gradients by explicitly following the chain of dependencies backward in time is called Backpropagation Through Time (BPTT). In the next section, we will make this precise by unrolling the RNN across timesteps and deriving the gradient expressions. This will naturally expose numerical issues such as vanishing and exploding gradients when sequences become long.

16.3.4 Inference: generating text

After training, we can use the model to generate new text, one character at a time:

- 1.

- Initialize the hidden state \(\mathbf {h}_0\) (e.g. zeros) and feed an initial character or a special <START> symbol \(\mathbf {x}_1\).

- 2.

- Compute \(\mathbf {h}_1\), logits \(\mathbf {y}_1\), and probabilities \(\mathbf {p}_1 = \mathrm {softmax}(\mathbf {y}_1)\).

- 3.

- Sample or choose the most likely next character from \(\mathbf {p}_1\) (e.g. by \(\arg \max \)), obtaining a character \(x_2\).

- 4.

- Feed the one-hot encoding of \(x_2\) back in as the next input \(\mathbf {x}_2\) and repeat.

This autoregressive loop continues until the model produces a special <END> token or a maximum length is reached.

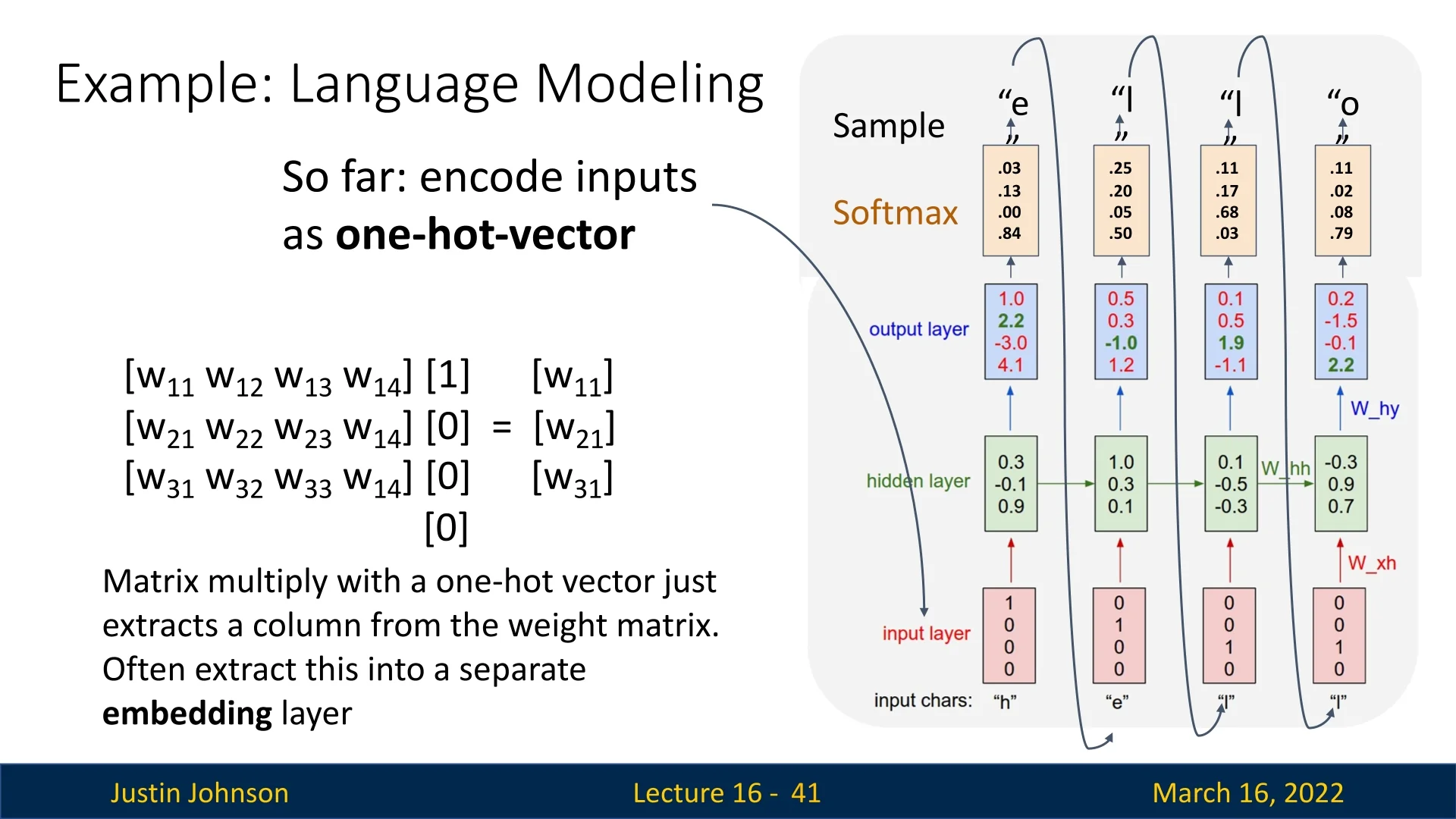

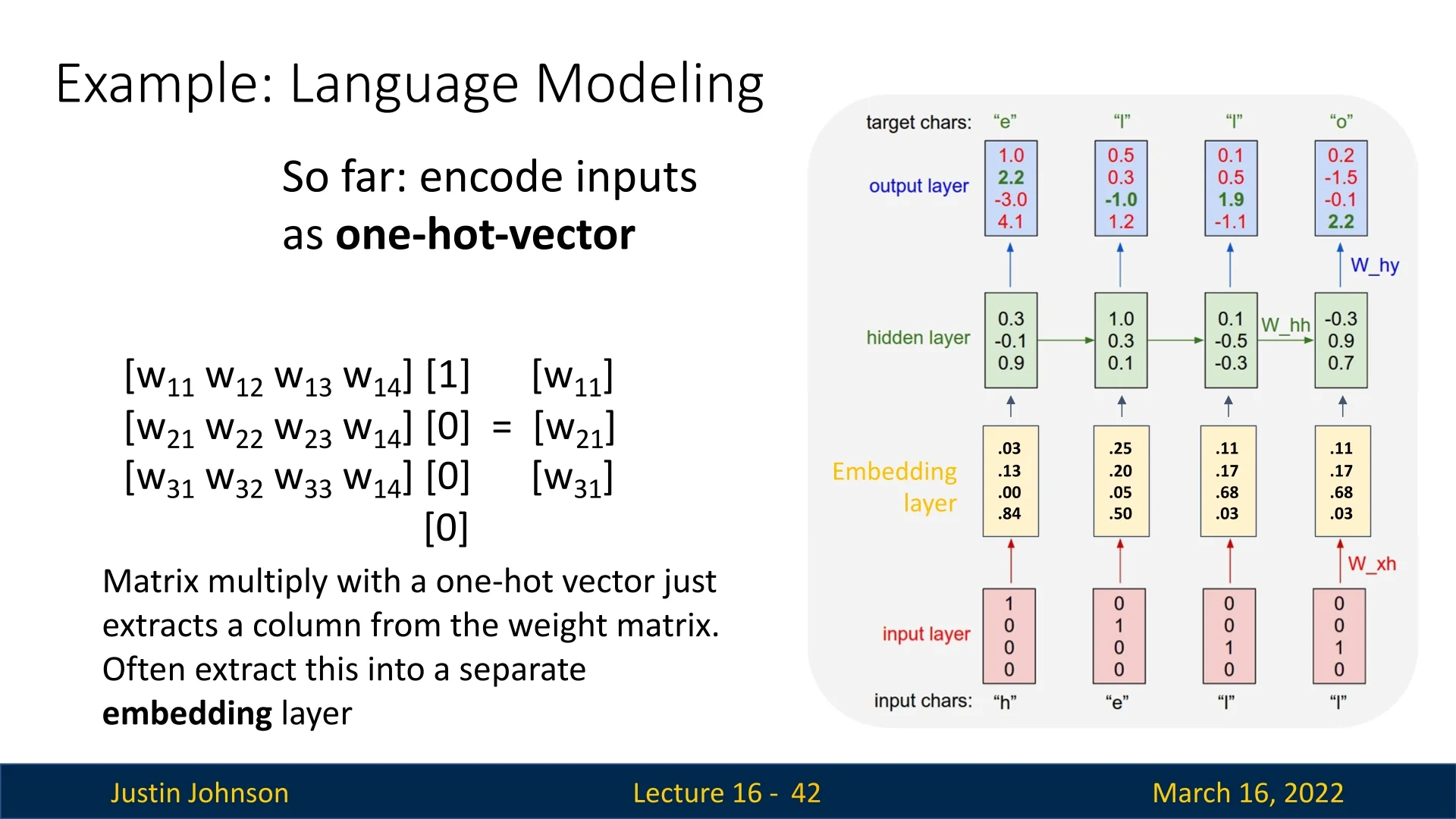

16.3.5 From one-hot vectors to embeddings

So far we have used one-hot vectors as inputs. Multiplying a weight matrix \(\mathbf {W}_{xh}\) by a one-hot vector simply selects one column of \(\mathbf {W}_{xh}\), which can be interpreted as a learned embedding of that character. Modern implementations therefore introduce an explicit embedding layer that maps character indices to dense vectors: \[ \mathbf {e}_t = \mathrm {Embedding}(x_t), \qquad \mathbf {h}_t = \tanh \!\bigl (\mathbf {W}_{hh}\mathbf {h}_{t-1} + \mathbf {W}_{eh}\mathbf {e}_t + \mathbf {b}_h\bigr ), \] where \(\mathbf {W}_{eh}\) plays the role of \(\mathbf {W}_{xh}\) but acts on lower-dimensional embeddings.

This has several advantages:

- Efficiency. We avoid explicitly storing and multiplying large sparse one-hot vectors; indexing into an embedding table is cheaper.

- Learned similarity structure. Characters (or words) with similar usage patterns can acquire similar embedding vectors, helping the model generalize.

- Flexible dimensionality. The embedding dimension can be chosen independently of the vocabulary size, controlling the capacity and computational cost.

16.3.6 Summary and motivation for BPTT

In this example, we have seen how an RNN processes a character sequence like “hello”, predicts the next character at each timestep, aggregates per-timestep cross-entropy losses into a sequence loss, and uses gradients of this loss to update a set of shared parameters. The key difficulty is that the loss at later timesteps depends on a long chain of hidden states and repeated applications of the same weight matrices. Computing and propagating gradients through this temporal chain is precisely the job of Backpropagation Through Time. In the next section we will unroll the RNN formally, derive these gradients, and use that derivation to understand why naïve RNNs suffer from vanishing and exploding gradients on long sequences.

16.4 Backpropagation Through Time (BPTT)

In a Recurrent Neural Network (RNN), the hidden state at time \(t\) depends on the hidden state at time \(t-1\), so unrolling the network over a sequence of length \(T\) yields a deep computational graph with \(T\) repeated applications of the same parameters. Training therefore requires computing gradients not only “through layers” (as in feedforward networks) but also through time. This procedure is known as Backpropagation Through Time (BPTT).

16.4.1 Full BPTT as Backprop on an Unrolled RNN

Consider a simple (vanilla) RNN processing a sequence of length \(T\) with inputs \(\mathbf {x}_1, \dots , \mathbf {x}_T\). The forward dynamics are \begin {align} \mathbf {h}_t &= \phi \!\bigl (\mathbf {W}_{hh}\mathbf {h}_{t-1} + \mathbf {W}_{xh}\mathbf {x}_t + \mathbf {b}_h\bigr ), \qquad t = 1,\dots ,T, \label {eq:chapter16_rnn_forward_h}\\ \mathbf {y}_t &= \mathbf {W}_{hy}\mathbf {h}_t + \mathbf {b}_y, \label {eq:chapter16_rnn_forward_y} \end {align}

where \(\mathbf {h}_0\) is an initial hidden state (often the zero vector or a learned parameter), \(\phi \) is a pointwise activation function (typically \(\tanh \) in classical RNNs), and \(\mathbf {W}_{xh}, \mathbf {W}_{hh}, \mathbf {W}_{hy}, \mathbf {b}_h, \mathbf {b}_y\) are shared across all timesteps.

Let \(\mathcal {L}_t = \ell (\mathbf {y}_t, \mathbf {y}_t^{\mbox{target}})\) denote the loss at timestep \(t\), and define the total sequence loss \[ \mathcal {L} = \sum _{t=1}^{T} \mathcal {L}_t. \] Because parameters are shared in time, the gradient of the total loss with respect to any parameter \(\theta \in \{\mathbf {W}_{xh}, \mathbf {W}_{hh}, \mathbf {W}_{hy}, \mathbf {b}_h, \mathbf {b}_y\}\) decomposes as \[ \frac {\partial \mathcal {L}}{\partial \theta } = \sum _{t=1}^{T} \frac {\partial \mathcal {L}_t}{\partial \theta }. \]

The key difficulty is that \(\mathcal {L}_t\) depends on \(\theta \) not only through the “local” timestep \(t\), but also through the entire history of hidden states \(\mathbf {h}_1,\dots ,\mathbf {h}_t\). For example, for the recurrent weight matrix \(\mathbf {W}_{hh}\) we can write \begin {equation} \frac {\partial \mathcal {L}_t}{\partial \mathbf {W}_{hh}} = \sum _{k=1}^{t} \underbrace {\frac {\partial \mathcal {L}_t}{\partial \mathbf {h}_t}}_{\mbox{error at time }t} \;\underbrace {\frac {\partial \mathbf {h}_t}{\partial \mathbf {h}_k}}_{\mbox{temporal Jacobian }k \rightarrow t} \;\underbrace {\frac {\partial ^+ \mathbf {h}_k}{\partial \mathbf {W}_{hh}}}_{\mbox{local derivative at time }k}, \label {eq:chapter16_bptt_chain} \end {equation} where \(\partial ^+ \mathbf {h}_k / \partial \mathbf {W}_{hh}\) treats \(\mathbf {h}_{k-1}\) as constant.

The temporal Jacobian \(\partial \mathbf {h}_t / \partial \mathbf {h}_k\) itself is a product of one-step Jacobians: \begin {equation} \frac {\partial \mathbf {h}_t}{\partial \mathbf {h}_k} = \prod _{j=k+1}^{t} \frac {\partial \mathbf {h}_j}{\partial \mathbf {h}_{j-1}}, \qquad \frac {\partial \mathbf {h}_j}{\partial \mathbf {h}_{j-1}} = \mathrm {diag}\!\bigl (\phi '(\mathbf {z}_j)\bigr )\,\mathbf {W}_{hh}, \label {eq:chapter16_bptt_jacobian_product} \end {equation} where \(\mathbf {z}_j = \mathbf {W}_{hh}\mathbf {h}_{j-1} + \mathbf {W}_{xh}\mathbf {x}_j + \mathbf {b}_h\) are the pre-activations. Thus, BPTT is standard backpropagation applied to the unrolled computational graph, but its gradients involve products of many Jacobian matrices across time.

Vanishing and Exploding Gradients Revisited

Equation (16.8) is the mathematical origin of the two classic pathologies in RNN training [36, 471]. If we denote the one-step Jacobian at time \(j\) by \[ \mathbf {J}_j = \frac {\partial \mathbf {h}_j}{\partial \mathbf {h}_{j-1}} = \mathrm {diag}\!\bigl (\phi '(\mathbf {z}_j)\bigr )\,\mathbf {W}_{hh}, \] then \(\partial \mathbf {h}_t / \partial \mathbf {h}_k = \mathbf {J}_t \mathbf {J}_{t-1} \cdots \mathbf {J}_{k+1}\). On average, the behavior of this product is controlled by typical singular values of \(\mathbf {J}_j\):

- Vanishing gradients. If the largest singular value of a “typical” Jacobian \(\mathbf {J}_j\) is less than \(1\) on average, then \(\bigl \|\partial \mathbf {h}_t / \partial \mathbf {h}_k\bigr \|\) decays approximately like \(\gamma ^{t-k}\) for some effective contraction factor \(0 < \gamma < 1\). Gradients associated with distant timesteps become numerically negligible, making it extremely hard to learn long-range dependencies [36].

- Exploding gradients. If the largest singular value is greater than \(1\) on average, then \(\bigl \|\partial \mathbf {h}_t / \partial \mathbf {h}_k\bigr \|\) grows approximately like \(\gamma ^{t-k}\) with \(\gamma > 1\). Small errors at late timesteps produce enormous gradients for early timesteps, leading to numerical overflow and unstable optimization [471].

These issues arise even if we ignore the nonlinearity and approximate the dynamics as \(\mathbf {h}_t \approx \mathbf {W}_{hh}\mathbf {h}_{t-1}\). In that case, \(\mathbf {h}_t \approx \mathbf {W}_{hh}^t \mathbf {h}_0\), and both the forward states and the backpropagated gradients are governed by powers of the same matrix \(\mathbf {W}_{hh}\). Unless the spectral properties of \(\mathbf {W}_{hh}\) are carefully controlled, either vanishing or exploding behavior is unavoidable.

Memory Cost of Full BPTT

To compute the exact gradients in (16.7), the forward pass must store all hidden states \(\mathbf {h}_1,\dots ,\mathbf {h}_T\) and pre-activations \(\mathbf {z}_1,\dots ,\mathbf {z}_T\), since the Jacobians depend on these values. The activation memory cost therefore scales as \[ \mathcal {O}(T \cdot d_h), \] where \(d_h\) is the hidden dimension. For long sequences (for example, \(T = 1{,}000\) and \(d_h = 1{,}024\)), storing all activations across many layers and mini-batches can easily require gigabytes of memory, even before accounting for optimizer state and other model parameters. Furthermore, each parameter update requires a full forward and backward pass over the entire sequence, which is computationally expensive.

These considerations motivate an approximation that trades exact long-range gradients for tractable memory and compute: truncated BPTT.

16.4.2 Truncated Backpropagation Through Time

In many applications (language modeling, online speech recognition, reinforcement learning), sequences are effectively unbounded: there is no natural “end of sequence” at which we could run full BPTT. Moreover, as we saw in Section 16.4.1.0, the Jacobian products in full BPTT already suffer from vanishing and exploding gradients even for moderate sequence lengths [36, 471]. Truncated BPTT (often denoted TBPTT-\(\tau \)) addresses both the computational and memory costs by limiting the temporal horizon over which gradients are propagated, at the price of introducing additional bias in credit assignment [694].

Chunked Training with a Finite Horizon

Fix a truncation length (or horizon) \(\tau \ll T\), typically in the range \(\tau \approx 50\)–\(200\). We process the sequence in chunks of length \(\tau \) and backpropagate only within each chunk. Concretely, suppose we process a long sequence in segments \([1,\tau ], [\tau +1, 2\tau ], \dots \). For the \(s\)-th chunk we define \[ b_s = (s-1)\tau , \qquad \mbox{chunk } s: \; t = b_s+1, \dots , b_s + \tau . \] The algorithm proceeds as follows [694, 471]:

- 1.

- Initialize the hidden state. For the first chunk, set \(\mathbf {h}_{0}\) to zeros or a learned initial state. For chunk \(s>1\), set the initial state to the final hidden state of the previous chunk: \(\mathbf {h}_{b_s} = \mathbf {h}_{b_{s-1}+\tau }\).

- 2.

- Forward pass over the chunk. For \(t = b_s+1,\dots ,b_s+\tau \), compute \(\mathbf {h}_t\) and \(\mathbf {y}_t\) using ([ref])–([ref]), and accumulate the chunk loss \[ \mathcal {L}^{(s)} = \sum _{t=b_s+1}^{b_s+\tau } \mathcal {L}_t. \]

- 3.

- Backward pass (truncated in time). Backpropagate gradients from \(\mathcal {L}^{(s)}\) only through the timesteps \(b_s+1,\dots ,b_s+\tau \). In practice, we treat \(\mathbf {h}_{b_s}\) as a constant with respect to the parameters (for example, by calling detach in PyTorch), so no gradient flows into the computations that produced \(\mathbf {h}_{b_s}\).

- 4.

- Parameter update. Use the gradients from this chunk to update the parameters \(\theta \). Then move to the next chunk.

From an optimization point of view, the overall objective remains the sum (or average) of per-timestep losses: \[ \mathcal {L} = \sum _{s=1}^{S} \mathcal {L}^{(s)} = \sum _{s=1}^{S}\;\sum _{t=b_s+1}^{b_s+\tau } \mathcal {L}_t, \] where \(S\) is the number of chunks. Some implementations divide by \(S\) (or by \(T\)) to work with an average loss, but the gradient structure is unchanged: each update only uses gradients originating from the most recent \(\tau \) timesteps.

Interaction with Vanishing and Exploding Gradients

Truncated BPTT changes how vanishing and exploding gradients appear, but it does not remove the underlying pathologies analyzed in Section 16.4.1.0. It shortens the dangerous Jacobian products (helping with explosion on very long sequences) while adding a hard cutoff that exacerbates vanishing for long-range dependencies [694, 471].

Exploding gradients: partial mitigation via shorter chains In full BPTT, the gradient from time \(T\) back to time \(1\) involves a product of \(T-1\) Jacobians, \(\prod _{j=1}^{T-1} \mathbf {J}_j\), whose norm typically behaves like \(\|\mathbf {J}\|^{T-1}\) for some average Jacobian norm \(\|\mathbf {J}\|\) [36, 471]. If the dominant singular value of the recurrent Jacobian is slightly larger than \(1\), say \(\|\mathbf {J}\| \approx 1.1\), the gradient can grow as \(1.1^{T}\), leading to catastrophic explosion on long sequences.

Truncated BPTT caps the length of this product at the truncation horizon \(\tau \) [694]: no gradient ever involves more than \(\tau \) Jacobian factors. In the toy example above, the worst-case growth is now \(1.1^{\tau }\) instead of \(1.1^{T}\). For \(T = 1000\) and \(\tau = 50\), this replaces a factor of roughly \(2.5 \times 10^{41}\) by about \(117\), which is much easier to manage with gradient clipping [471].

However, if the per-step Jacobians are highly unstable (for example, \(\|\mathbf {W}_{hh}\| \gg 1\)), gradients can still explode within the \(\tau \)-step window. Empirically and theoretically, truncated BPTT therefore reduces the risk of catastrophic explosion on very long sequences, but does not guarantee stability; gradient clipping remains necessary in practice [471].

Vanishing gradients: soft decay plus hard truncation For vanishing gradients, truncated BPTT actually makes the situation worse for long-range dependencies by combining two effects: the soft exponential decay inherent in vanilla RNNs and an additional hard algorithmic cutoff at the truncation boundary.

Under full BPTT, the gradient of a loss at time \(T\) with respect to an earlier hidden state \(\mathbf {h}_k\) can be written as \[ \frac {\partial \mathcal {L}_T}{\partial \mathbf {h}_k} = \frac {\partial \mathcal {L}_T}{\partial \mathbf {h}_T} \prod _{j=k+1}^{T} \mathbf {J}_j, \] and the norm of this product typically decays roughly like \(\gamma ^{T-k}\) for some effective contraction factor \(\gamma < 1\) determined by the recurrent Jacobian [36, 471].

With truncated BPTT and horizon \(\tau \), this chain rule is applied differently depending on the distance \(T-k\):

- Within the horizon (\(T-k \le \tau \)). All timesteps from \(k\) to \(T\) lie in the same chunk, so we still form a product of one-step Jacobians \(\prod _{j=k+1}^{T} \mathbf {J}_j\). Because each factor typically has singular values \(< 1\) on average, the gradient decays approximately like \(\gamma ^{T-k}\) just as in full BPTT [36]. In other words, truncation does not improve vanishing locally: signals from \(\tau \) steps ago are already extremely small before truncation is even applied.

- Beyond the horizon (\(T-k > \tau \)). In this case, the computational graph crosses at least one chunk boundary. At each boundary we explicitly treat the incoming hidden state as a constant (for example, via detach in PyTorch), which enforces \[ \frac {\partial \mathbf {h}_{b_s}}{\partial \mathbf {h}_{b_s-1}} = \mathbf {0} \] for the “virtual” edge that would connect the previous chunk to the current one. This inserts a zero matrix into the Jacobian product, so the entire gradient \(\frac {\partial \mathcal {L}_T}{\partial \mathbf {h}_k}\) collapses to exactly zero as soon as the path from \(k\) to \(T\) crosses a truncation boundary [694, 471].

In full BPTT, a distant timestep \(k\) might still exert a tiny but nonzero influence on \(\mathcal {L}_T\), on the order of \(\gamma ^{T-k}\). Under truncated BPTT, any timestep more than \(\tau \) steps away exerts no influence at all: the gradient path is cut off by construction. The effective credit-assignment horizon is therefore limited to \[ \mbox{effective horizon} \;\approx \; \min \bigl (\tau ,\;\mbox{intrinsic vanishing horizon from the recurrent dynamics}\bigr ), \] so truncation preserves vanishing within each window while adding a hard ceiling on learnable temporal dependencies beyond \(\tau \) [36, 471].

Benefits and Limitations of Truncation

Advantages. Truncated BPTT is primarily a computational tool [694, 471]:

- Reduced memory usage. At any point we only need to store activations for \(\tau \) timesteps, so the activation memory scales as \(\mathcal {O}(\tau \cdot d_h)\) instead of \(\mathcal {O}(T \cdot d_h)\). This is essential when \(T\) is very large or effectively unbounded (for example, in streaming text or reinforcement learning).

- Improved throughput. Forward and backward passes over shorter chunks are faster, allowing more frequent parameter updates and better hardware utilization. This is one of the main motivations for TBPTT in practice [471].

- Support for arbitrarily long streams. Because memory and computation per update depend on \(\tau \) rather than on the total stream length, truncated BPTT allows RNNs to be trained on sequences that span millions of timesteps or never terminate.

Fundamental limitations. These computational gains come at a conceptual cost that compounds the vanishing/exploding gradient issues [36, 471]:

- Truncation bias and hard horizon. Dependencies longer than \(\tau \) timesteps receive no gradient signal. The model can see long-range context in the hidden state, but it cannot learn to encode or preserve that context better, because no gradient flows back to the parameters responsible for it. This makes long-term dependencies systematically harder (or impossible) to learn, beyond the intrinsic vanishing-gradient effects.

- Uncorrected hidden state. The initial hidden state of each chunk, \(\mathbf {h}_{b_s}\), is a function of earlier inputs and parameters, but gradients are not allowed to adjust those earlier computations. If those states encode information poorly, no later loss can correct them. Over many chunks, hidden states may drift into saturated regimes (where \(\tanh '\) is near zero) or noisy regimes, further weakening gradient flow even within a window [36].

- Non-stationary optimization landscape. Because the gradient ignores all contributions beyond \(\tau \) steps, the effective loss surface seen by the optimizer depends on the choice of \(\tau \) and on how chunk boundaries align with the data. This makes training more sensitive to learning-rate schedules, initialization, and truncation strategy [471].

In practice, truncation horizons \(\tau \approx 50\)–\(100\) are common compromises. They make training on long or streaming sequences feasible and less prone to catastrophic explosion, but even with TBPTT and gradient clipping, vanilla RNNs remain fundamentally limited on tasks that require precise credit assignment over hundreds or thousands of timesteps [36]. This limitation is a key motivation for gated architectures such as LSTMs and GRUs, which modify the recurrence itself rather than relying solely on truncation.

16.4.3 Why BPTT and TBPTT Struggle on Long Sequences

Putting these pieces together, we can now summarize why both full BPTT and truncated BPTT remain fundamentally limited for long sequences, even when we use \(\tanh \) activations, careful initialization, and gradient clipping. The limitations come from the combination of gradient dynamics, truncation, and the architecture itself.

- Fixed-capacity hidden state. At each timestep, all relevant information from the past must be compressed into a fixed-dimensional vector \(\mathbf {h}_t \in \mathbb {R}^{d_h}\). As the sequence length grows, the amount of information to retain grows, but the capacity of \(\mathbf {h}_t\) does not. Inevitably, older information is overwritten or blurred.

- Exponential decay or growth of influence. In a linearized view, the influence of an input at time \(k\) on the hidden state at time \(t\) is governed by \(\mathbf {W}_{hh}^{t-k}\). As discussed in Section 16.4.1.0, unless the spectral radius of \(\mathbf {W}_{hh}\) is exactly \(1\) under all conditions (which is unrealistic to maintain during training), contributions from the distant past either vanish or explode. This structural property affects both full BPTT and each truncated window in TBPTT.

- Truncation-induced loss of long-range credit assignment. Truncated BPTT adds an explicit horizon: gradients are forcibly cut off after \(\tau \) steps. Even if the hidden state still carries useful information from hundreds of steps ago, the model never receives a learning signal telling it how to encode that information. Thus, TBPTT cannot, by construction, learn dependencies longer than its truncation horizon.

- Sequential computation and limited parallelism. Unlike CNNs or Transformers, which can process all positions in parallel, RNNs must process timesteps sequentially: \(\mathbf {h}_t\) depends on \(\mathbf {h}_{t-1}\). This makes efficient training on very long sequences difficult on modern hardware, even if memory and gradient stability were not an issue.

These limitations are not fully solvable by better regularization, optimizers, or clever truncation strategies alone. They stem from the architectural decision to store all memory in a single evolving state vector updated by repeated application of the same transformation. The natural next step is to make this recurrence adaptive, allowing the network to decide how much of the past to keep, how much to forget, and which information to expose at each timestep.

This is precisely the role of gated architectures such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), which we will introduce after understanding the activation-function trade-offs in vanilla RNNs.

16.5 Why RNNs Use tanh Instead of ReLU

Modern feedforward architectures (ConvNets, Transformers) overwhelmingly favor ReLU-family activations (ReLU, Leaky ReLU, GELU, etc.). Classical vanilla RNNs, by contrast, almost always use \(\tanh \) (or occasionally sigmoid) in their recurrent layers. This is not a historical accident: it follows from the fact that RNNs repeatedly apply the same recurrent matrix over time, and from the gradient behavior analyzed in Section 16.4.1.

At a high level, vanilla RNNs face a harsh trade-off:

- With ReLU-like, unbounded activations, forward activations and gradients are extremely prone to catastrophic explosion.

- With tanh, forward activations are provably bounded, and gradients are strongly damped, which greatly reduces explosion but exacerbates vanishing.

Exploding gradients are typically a fatal failure mode (NaNs, divergence), whereas vanishing gradients are a difficult but manageable limitation (the model still trains on short horizons). This makes \(\tanh \) the “lesser of two evils” in vanilla RNNs. The real fix for long-range credit assignment will come from gated architectures (LSTMs and GRUs), not from swapping \(\tanh \) for ReLU.

16.5.1 Recurrent Dynamics and Gradient Flow

Recall the vanilla RNN update from Section 16.4: \[ \mathbf {h}_t = \phi \!\bigl ( \mathbf {W}_{hh}\mathbf {h}_{t-1} + \mathbf {W}_{xh}\mathbf {x}_t + \mathbf {b}_h \bigr ), \] where \(\phi \) is the activation function. To isolate the recurrent dynamics, ignore inputs and biases: \[ \mathbf {h}_t \approx \phi \!\bigl (\mathbf {W}_{hh}\mathbf {h}_{t-1}\bigr ), \qquad \mathbf {h}_T \approx \phi ^{(T)}\!\bigl (\mathbf {W}_{hh}^T \mathbf {h}_0\bigr ). \] Thus the long-term behavior is governed by powers of the same matrix \(\mathbf {W}_{hh}\).

Spectral radius and forward stability Let \(\lambda _1,\dots ,\lambda _{d_h}\) be the eigenvalues of \(\mathbf {W}_{hh}\), and define the spectral radius \[ \rho (\mathbf {W}_{hh}) = \max _i |\lambda _i|. \] Intuitively, \(\rho (\mathbf {W}_{hh})\) measures how repeated application of \(\mathbf {W}_{hh}\) tends to expand or contract vectors.

- If \(\rho (\mathbf {W}_{hh}) > 1\), some directions in state space are amplified exponentially as \(t\) increases. Without a bounding nonlinearity, the hidden state norm \(\|\mathbf {h}_t\|\) can grow without bound.

- If \(\rho (\mathbf {W}_{hh}) < 1\), all directions contract exponentially. Old information in \(\mathbf {h}_t\) is gradually “forgotten” as it is repeatedly multiplied by a contractive operator.

Initialization schemes (for example, orthogonal or scaled identity matrices) attempt to control \(\rho (\mathbf {W}_{hh})\), but forward stability alone is not enough: we also care about how gradients propagate through time.

Gradient Flow Through Time

Section 16.4.1 showed that gradients through time are controlled by products of one-step Jacobians. For an earlier hidden state \(\mathbf {h}_t\), the gradient of the loss \(\mathcal {L}\) can be written as \[ \frac {\partial \mathcal {L}}{\partial \mathbf {h}_t} = \frac {\partial \mathcal {L}}{\partial \mathbf {h}_T} \prod _{j=t}^{T-1} \frac {\partial \mathbf {h}_{j+1}}{\partial \mathbf {h}_j}, \] with one-step Jacobians \[ \frac {\partial \mathbf {h}_{j+1}}{\partial \mathbf {h}_j} = \mathrm {diag}\!\Bigl ( \phi '\!\bigl (\mathbf {W}_{hh}\mathbf {h}_j + \mathbf {W}_{xh}\mathbf {x}_{j+1} + \mathbf {b}_h\bigr ) \Bigr )\, \mathbf {W}_{hh} \;\equiv \; \mathbf {J}_j. \]

As in Equation (16.8), the gradient norm is governed by the product \[ \prod _{j=t}^{T-1} \mathbf {J}_j. \] Two ingredients matter:

- The spectral norm \(\|\mathbf {W}_{hh}\|_2\), which determines how much \(\mathbf {W}_{hh}\) itself expands vectors;

- The typical magnitude of the activation derivative \(\phi '(\cdot )\), which appears on the diagonal of each \(\mathbf {J}_j\).

Roughly, each factor \(\mathbf {J}_j\) scales gradients by something like \(\|\phi '\|_\infty \cdot \|\mathbf {W}_{hh}\|_2\). If this effective factor is consistently larger than \(1\), gradients explode across many timesteps; if it is consistently smaller than \(1\), they vanish [36, 471]. There is no scalar activation that keeps this product exactly at \(1\) across hundreds of steps in a plain vanilla RNN; we must choose which failure mode is more tolerable.

16.5.2 Why Plain ReLU Is Problematic in RNNs

For ReLU, \[ \phi _{\mbox{ReLU}}(z) = \max (0,z), \qquad \phi _{\mbox{ReLU}}'(z) = \begin {cases} 1, & z > 0,\\ 0, & z \le 0, \end {cases} \] so active units have derivative exactly \(1\). When many units are active, the Jacobian is approximately \[ \frac {\partial \mathbf {h}_{j+1}}{\partial \mathbf {h}_j} \approx \mathbf {W}_{hh}, \quad \mbox{and}\quad \frac {\partial \mathbf {h}_T}{\partial \mathbf {h}_t} \approx \mathbf {W}_{hh}^{T-t}. \]

Two extreme regimes dominate in practice:

- Exploding regime. If we initialize \(\mathbf {W}_{hh}\) so that \(\|\mathbf {W}_{hh}\|_2 \gtrsim 1\) (to avoid immediate vanishing), the gradient norm behaves roughly like \(\|\mathbf {W}_{hh}\|_2^{T-t}\). Even a mild expansion factor such as \(1.1\) leads to \(1.1^{100} \approx 1.4 \times 10^4\); for longer sequences, gradients quickly grow beyond floating-point range, producing numerical overflow and NaNs. Forward activations can explode as well, since ReLU is unbounded on the positive side.

- Dead-neuron / strongly contractive regime. If we initialize \(\mathbf {W}_{hh}\) very small so that \(\|\mathbf {W}_{hh}\|_2 < 1\), many pre-activations become negative, ReLU outputs \(0\), and \(\phi '(z)=0\). Those units stop contributing and stop receiving gradient, effectively shrinking the dimensionality of the hidden state and encouraging rapid vanishing.

Deep feedforward networks mitigate such issues via residual connections, normalization layers, and limited depth. In a vanilla RNN, however, the same transformation is applied hundreds or thousands of times, so small deviations of \(\|\mathbf {W}_{hh}\|_2\) from \(1\) are amplified much more severely. Empirically, vanilla RNNs with ReLU-like activations are extremely fragile and typically require very aggressive gradient clipping and carefully tuned initialization just to avoid immediate divergence [471].

16.5.3 Why tanh Is Safer in Vanilla RNNs

The \(\tanh \) activation, \[ \tanh (x) = \frac {e^x - e^{-x}}{e^x + e^{-x}}, \] changes this picture in three important ways. It does not remove vanishing gradients, but it dramatically reduces the risk of catastrophic explosion and keeps the forward dynamics numerically well behaved.

1. Bounded outputs: forward stability \(\tanh (x)\in (-1,1)\) for all \(x\). Regardless of how large \(\mathbf {W}_{hh}\mathbf {h}_{t-1} + \mathbf {W}_{xh}\mathbf {x}_t + \mathbf {b}_h\) becomes, each hidden unit is clamped into a fixed interval. As a result:

- Hidden states cannot diverge to arbitrarily large magnitudes in the forward pass.

- Inputs to subsequent layers and to the loss remain within a predictable numeric range.

This boundedness removes the forward counterpart of the exploding-gradient problem: even if \(\rho (\mathbf {W}_{hh}) > 1\), activations themselves remain in \((-1,1)\), which makes the overall network much more robust.

2. Derivative bounded by 1: automatic damping of explosions The derivative of \(\tanh \) is \[ \frac {d}{dx}\tanh (x) = 1 - \tanh ^2(x), \] so \(|\tanh '(x)| \le 1\) for all \(x\), with equality only at \(x=0\). In the Jacobians \(\mathbf {J}_j = \mathrm {diag}\bigl (\tanh '(\cdot )\bigr )\mathbf {W}_{hh}\), this derivative acts as a multiplicative damping factor on the singular values of \(\mathbf {W}_{hh}\). Compared with ReLU (whose derivative is exactly \(1\) in the active region), \(\tanh \) has two stabilizing effects:

- When hidden units are in the linear regime (\(x \approx 0\)), \(|\tanh '(x)| \approx 1\), so short-range gradient flow is similar to ReLU.

- When hidden units grow in magnitude, \(|\tanh '(x)|\) shrinks toward \(0\), so the Jacobian factors \(\mathbf {J}_j\) become strongly contractive. This automatically dampens any tendency of \(\mathbf {W}_{hh}\) to amplify gradients.

In other words, \(\tanh \) implements a state-dependent gain control: as activations grow, local derivatives shrink, pushing the effective per-step gain \(\|\mathbf {J}_j\|_2\) back toward or below \(1\). Large gradient explosions become much less common than with ReLU [471].

3. Zero-centered activations The range of \(\tanh \) is symmetric around zero. Hidden states can be positive or negative, so contributions in \(\mathbf {W}_{hh}\mathbf {h}_{t-1}\) can cancel each other. In contrast, ReLU produces nonnegative activations, and with mostly positive weights this can create a “positive feedback loop” in which hidden states grow in the same direction step after step. Zero-centered activations therefore provide an additional bias toward stable dynamics and often lead to smoother optimization.

Caveat: vanishing gradients on long sequences The same mechanisms that prevent explosion also promote vanishing:

- When \(|x|\) is moderate to large, \(\tanh (x)\) saturates near \(\pm 1\), and \(\tanh '(x) \approx 0\).

- Over long sequences, many units spend much of their time in these saturated regimes, so each Jacobian factor \(\mathbf {J}_j\) is strongly contractive, and products of \(\mathbf {J}_j\) quickly drive gradients toward zero.

Thus, \(\tanh \)-RNNs are typically effective on short or medium-length sequences (tens of timesteps), but they struggle when precise credit assignment is required over hundreds or thousands of steps. \(\tanh \) does not fix the vanishing-gradient problem; it trades catastrophic explosion for controlled vanishing [36, 471].

16.5.4 ReLU Variants and Gradient Clipping

Two popular ReLU variants are sometimes suggested for RNNs: ReLU6 (bounded above) and Leaky ReLU (nonzero slope for negative inputs). They address specific ReLU pathologies, but do not fundamentally resolve recurrent stability.

ReLU6: bounded but hard saturation ReLU6 clamps activations to \([0,6]\): \[ \phi (x) = \min \bigl (\max (0,x), 6\bigr ). \] The upper bound prevents unbounded growth of hidden states in the forward pass, but once a unit saturates at \(6\) its derivative becomes zero for all larger inputs. In an RNN, many units can quickly hit this ceiling and then effectively “die”: they contribute a constant value and receive no gradient, shrinking the effective hidden dimensionality and again encouraging vanishing gradients.

Leaky ReLU: softer but still unbounded Leaky ReLU is defined as \[ \phi (x) = \begin {cases} x, & x > 0,\\[3pt] \alpha x, & x \le 0, \end {cases} \qquad 0 < \alpha \ll 1. \] This avoids the “dying ReLU” problem by giving a nonzero gradient for negative inputs and can modestly reduce vanishing. However, the activation remains unbounded on the positive side, and the derivative for positive inputs is still \(1\). If \(\|\mathbf {W}_{hh}\|_2 > 1\) and hidden states remain mostly positive, both forward activations and gradients can still explode over time. Leaky ReLU is therefore only a partial fix and does not remove the need for careful control of \(\mathbf {W}_{hh}\) and heavy gradient clipping in vanilla RNNs.

Why Gradient Clipping Alone Is Insufficient

Gradient clipping [471] is a standard heuristic to curb exploding gradients. Given a gradient vector \(\mathbf {g} = \nabla \mathcal {L}\) and threshold \(c > 0\), global norm clipping replaces \[ \mathbf {g} \;\leftarrow \; \frac {\mathbf {g}}{\max \bigl (1, \|\mathbf {g}\| / c\bigr )}, \] so that update steps never exceed length \(c\). This limits catastrophic jumps in parameter space, but it does not repair the recurrent dynamics that create exploding and vanishing gradients in the first place [471].

Clipping cannot fix unstable recurrence First, clipping acts only in the backward pass. With unbounded activations and expansive recurrence \(\rho (\mathbf {W}_{hh}) > 1\), hidden states grow roughly as \[ \|\mathbf {h}_t\| \approx \|\mathbf {W}_{hh}^t \mathbf {h}_0\|, \] and can reach extremely large magnitudes. Once activations or losses overflow to \(\pm \infty \) or NaN, gradients are undefined and clipping cannot intervene. Thus, “ReLU + clipping” cannot guarantee stability of vanilla RNNs because the primary failure mode (exploding states) remains.

Second, even when the forward pass stays finite, the exploding-gradient problem arises from products of Jacobians as in Equation (16.8): \[ \prod _{j=t}^{T-1} \mathbf {J}_j = \prod _{j=t}^{T-1} \mathrm {diag}\bigl (\phi '(\cdot )\bigr )\,\mathbf {W}_{hh}. \] If these products are strongly expansive, clipping intervenes only after they have amplified the gradient, truncating large vectors to have norm \(c\). When this happens frequently (as in a ReLU-RNN whose gradients would otherwise explode every few steps), optimization is heavily distorted:

- Large gradients are projected onto the sphere of radius \(c\), so the method behaves more like a noisy sign-based optimizer than a faithful first-order method.

- The effective learning rate becomes entangled with how often clipping triggers.

- The underlying Jacobian products remain expansive; clipping only limits their impact on parameter updates, not their origin.

Heavy reliance on clipping with unbounded activations therefore treats the symptom (huge updates) rather than the cause (unstable recurrence).

Why we still clip with tanh With a bounded activation such as \(\tanh \), forward activations lie in \((-1,1)\), so catastrophic state explosion is much less likely. Nevertheless, clipping remains useful even for \(\tanh \)-RNNs [471]:

- Transient spikes. When many units operate near the linear regime (\(x \approx 0\)), the derivatives satisfy \(|\tanh '(x)| \approx 1\), so the Jacobians \(\mathbf {J}_j\) can have singular values \(\gtrsim 1\). Over tens of steps, this can produce occasional gradient spikes before the network settles into a more contractive regime.

- Stacked architectures and large outputs. In multi-layer RNNs or models with large output layers, large gradients can originate from higher layers or the loss, even if the recurrent block itself is relatively stable. Clipping prevents these spikes from destabilizing the recurrent parameters.

- Low-cost insurance. Once bounded activations have removed the worst forward-pass explosions, clipping only rarely activates. It becomes a cheap safety net against rare outliers, instead of a mechanism that fires on most updates.

In practice, then, ReLU + aggressive clipping tries to use clipping as the primary stabilizer, which is fragile and distorting, whereas tanh + light clipping uses clipping as a secondary safeguard on top of already stable forward dynamics.

16.5.5 Summary and Motivation for Gated RNNs

Because a vanilla RNN repeatedly applies the same recurrent transformation, the Jacobian products in Equation (16.8) will, for any smooth activation \(\phi \), tend to either contract or expand exponentially over long horizons [36, 471]. No scalar nonlinearity can simultaneously avoid vanishing and exploding gradients in this setting; different activations simply choose different points on the stability–memory trade-off.

The following table summarizes the main properties of common activations in vanilla RNNs.

| Activation | Bounded? | Max derivative | Zero-centered? | Typical failure mode |

|---|---|---|---|---|

| ReLU | No | \(1\) | No | Exploding hidden states and gradients |

| Leaky ReLU | No (above) | \(1\) (\(x>0\)) | No | Explodes if states stay mostly positive |

| ReLU6 | Yes | \(1\) then \(0\) | No | Units saturate near 6 and stop learning |

| \(\tanh \) | Yes | \(\le 1\) | Yes | Vanishing gradients on long sequences |

| Sigmoid | Yes | \(\le 0.25\) | No | Strong vanishing, even on short sequences |

From this viewpoint, the historical choice of \(\tanh \) in vanilla RNNs is straightforward:

- ReLU family. Unbounded outputs and unit derivative in the active region help mitigate vanishing in deep feedforward networks, but in vanilla RNNs they make both hidden states and gradients highly susceptible to exponential growth. Even with clipping, forward-pass explosions and unstable optimization are common.

- tanh. Bounded, zero-centered outputs and derivatives \(|\tanh '(x)| \le 1\) strongly damp both activations and gradients. This largely eliminates catastrophic explosion and yields numerically stable training, at the price of pronounced vanishing on long sequences: precise credit assignment beyond tens of timesteps becomes very hard.

Exploding gradients are a catastrophic failure mode (training diverges, NaNs appear), whereas vanishing gradients are a limiting failure mode (the model still trains, but only captures short- to medium-range dependencies). Consequently, vanilla RNNs almost universally adopt \(\tanh \) (typically with light gradient clipping) rather than ReLU + heavy clipping: it is better to have a model that learns reliably on short horizons than one that is numerically unstable on most problems.

At the same time, this analysis makes clear that activation choice alone cannot solve long-range credit assignment. As long as gradients must traverse repeated Jacobian products of the form \(\mathrm {diag}\bigl (\phi '(\cdot )\bigr )\mathbf {W}_{hh}\), they will eventually vanish or explode over sufficiently many timesteps [36, 471]. The next step is therefore to change the architecture, not just the nonlinearity.

Gated RNNs such as LSTMs and GRUs address this by introducing:

- Additive memory paths, along which information (and gradients) can flow with gain close to \(1\) across many timesteps, rather than being repeatedly multiplied by \(\mathbf {W}_{hh}\).

- Multiplicative gates, which learn when to write, keep, or erase information, allowing the network to maintain long-term dependencies without sacrificing stability.

These architectures retain \(\tanh \) (and sigmoid) as stable building blocks, but wrap them in a recurrent structure designed to keep gradient norms under control over much longer horizons. In the next parts we will see how this gating mechanism overcomes the limitations of vanilla \(\tanh \)-RNNs while preserving their numerical robustness.

16.6 Example Usages of Recurrent Neural Networks

Recurrent Neural Networks (RNNs) are widely used in various sequential tasks, particularly in text processing and generation. With modern deep learning frameworks such as PyTorch, implementing an RNN requires only a few lines of code, allowing researchers and practitioners to train language models on large text corpora efficiently. In this section, we explore notable applications of RNNs, starting with text-based tasks, including text generation and analyzing what representations RNNs learn from data.

16.6.1 RNNs for Text-Based Tasks

One of the most intriguing applications of RNNs is text generation. By training an RNN on a large corpus of text, the model learns to predict the next character or word based on previous context. Once trained, it can generate text in a similar style to its training data, capturing syntactic and stylistic structures.

Generating Text with RNNs

A simple character-level RNN can be trained on various text corpora, such as Shakespeare’s works, LaTeX source files, or C programming code. Despite its simplicity, an RNN can learn meaningful statistical patterns, including character frequencies, word structures, and even basic grammatical rules.

Some examples of text generation with RNNs:

- Shakespeare-style text: After training on Shakespeare’s works, an RNN can generate text that mimics old-English writing, maintaining proper character names and poetic structure.

- LaTeX code generation: An RNN trained on LaTeX documents can generate LaTeX-like syntax, although the output may not always be valid compilable code.

- C code generation: By training on a dataset of C programming files, the RNN can generate snippets of C-like syntax, capturing programming constructs such as loops and conditionals.

These examples demonstrate that RNNs can capture both structural and stylistic aspects of language, learning dependencies that extend across sequences. However, understanding what representations the RNN has learned from the data remains an open research question.

16.6.2 Understanding What RNNs Learn

Since an RNN produces hidden states at each timestep, it implicitly learns internal representations of the input data. A key research question is: What kinds of representations do RNNs learn from the data they are trained on?

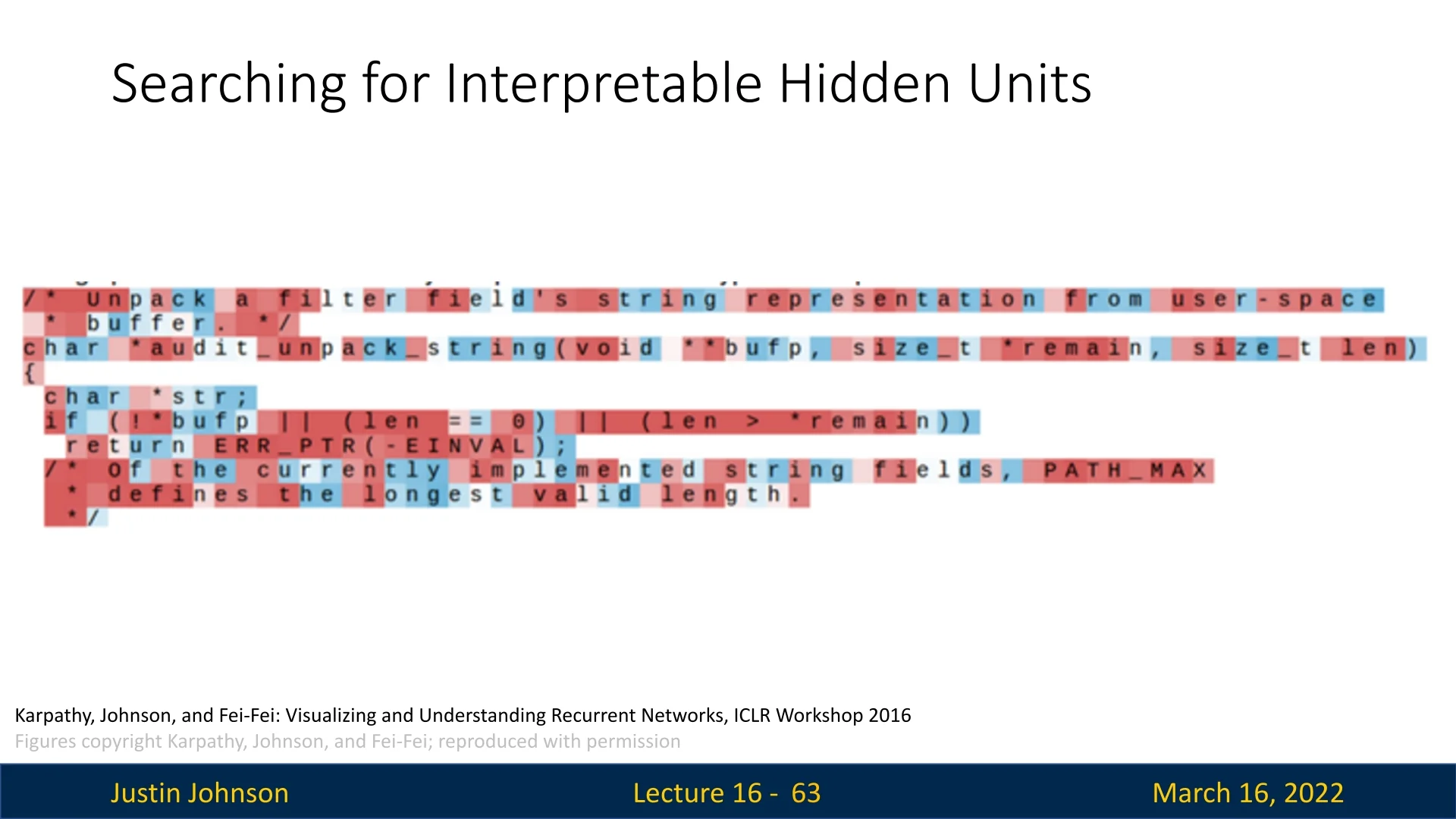

A study by Karpathy, Johnson, and Fei-Fei [275] explored this question by visualizing hidden states of an RNN trained on the Linux kernel source code. Since each hidden state is a vector passed through a \(\tanh \) activation function, every dimension in the hidden state has values in the range \([-1,1]\). The authors examined how different hidden state dimensions responded to specific characters in the sequence.

To interpret what RNN hidden units are learning, the authors colored text based on the activation value of a single hidden state dimension at each timestep:

- Red: Activation close to \(+1\).

- Blue: Activation close to \(-1\).

This visualization method allowed them to analyze whether certain hidden state dimensions captured meaningful patterns in the data.

As shown in Figure 16.9, many hidden unit activations appeared random and did not provide an intuitive understanding of what the RNN was tracking. However, in some cases, individual hidden state dimensions exhibited clear, meaningful behavior.

Interpretable Hidden Units

While many hidden state dimensions appear uninterpretable, some exhibit structured activation patterns corresponding to meaningful aspects of the data. Below are a few examples:

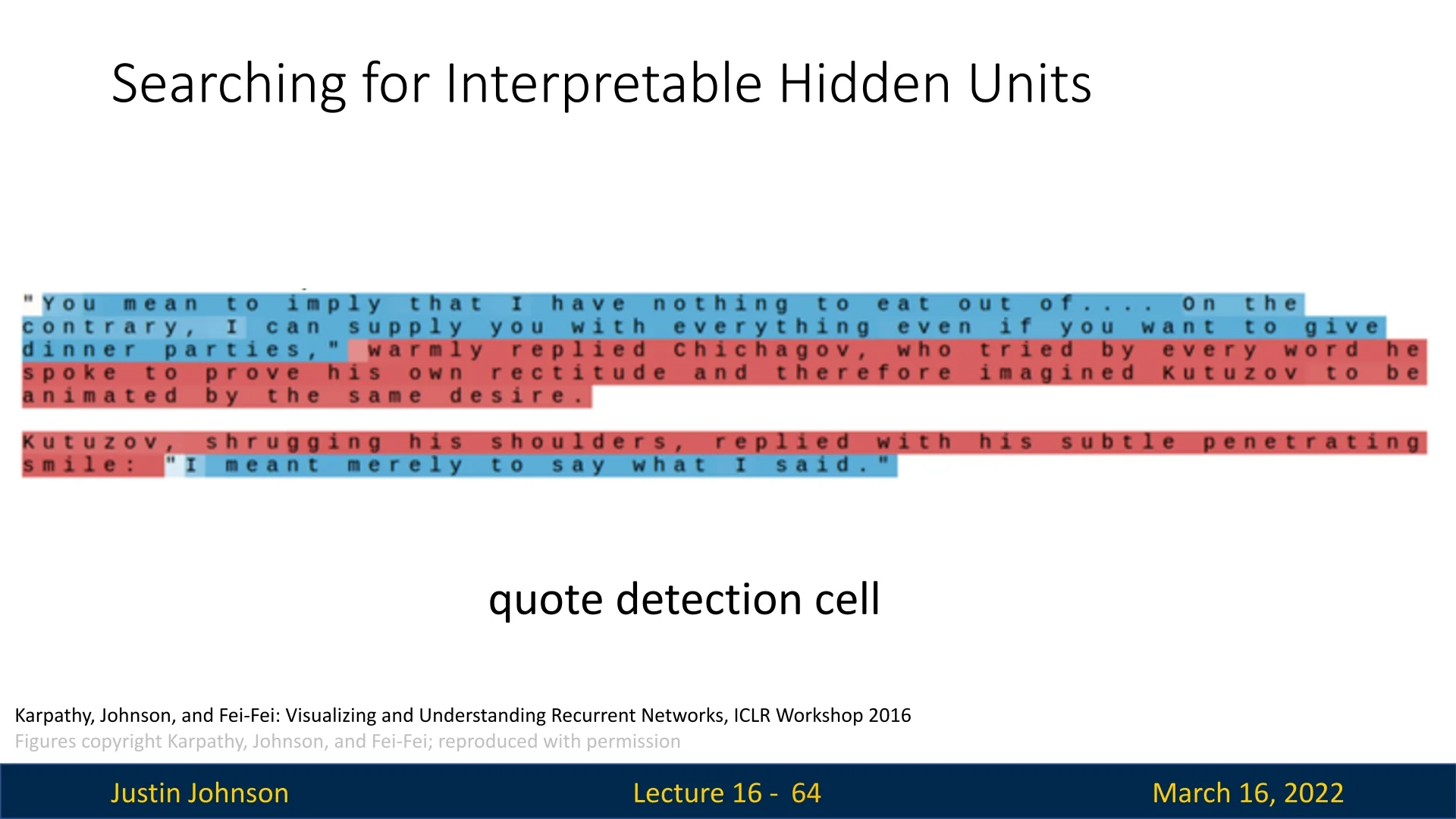

Some hidden units activate strongly in the presence of quoted text, as seen in Figure 16.10.

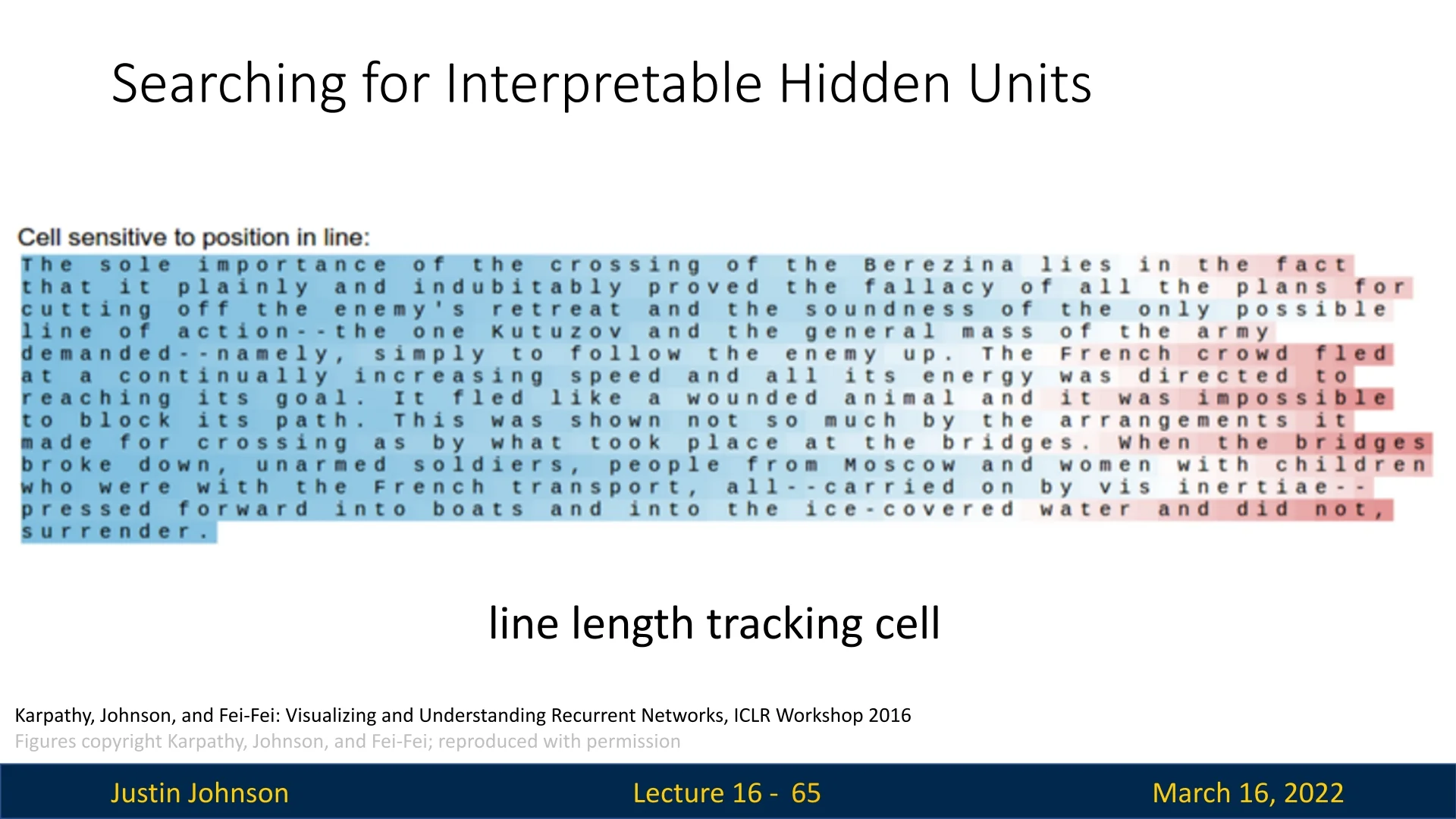

Line Length Tracking Cell Another hidden unit tracks the number of characters in a line, transitioning smoothly from blue to red as it approaches 80 characters per line (a common convention in code formatting), as shown in Figure 16.11.

This demonstrates that some RNN neurons track specific long-range dependencies, encoding useful properties of the dataset.

Other meaningful hidden state activations include:

- Comment Detector: Some units activate strongly in commented-out sections of code.

- Code Depth Tracker: Certain units track the depth of nested code structures (e.g., counting how many open brackets exist in C code).

- Keyword Highlighter: Some neurons respond selectively to keywords such as if, for, or return in programming languages.

Key Takeaways from Interpretable Units

The analysis by Karpathy et al. highlights several important insights:

- RNNs can learn abstract properties of sequences. Some hidden units respond to high-level features, such as quoted text, line length, or code structure.

- Not all hidden units are interpretable. Many dimensions in the hidden state vector appear to activate randomly, making it difficult to extract clear meaning from every neuron.

- Neurons behave differently based on the dataset. The same RNN architecture trained on different corpora may develop completely different internal representations.

While these findings provide insight into what RNNs learn, interpreting hidden states remains an open challenge in deep learning research. This motivates further study into techniques such as attention mechanisms and gated architectures, which offer more structured ways to track long-term dependencies.

16.6.3 Image Captioning

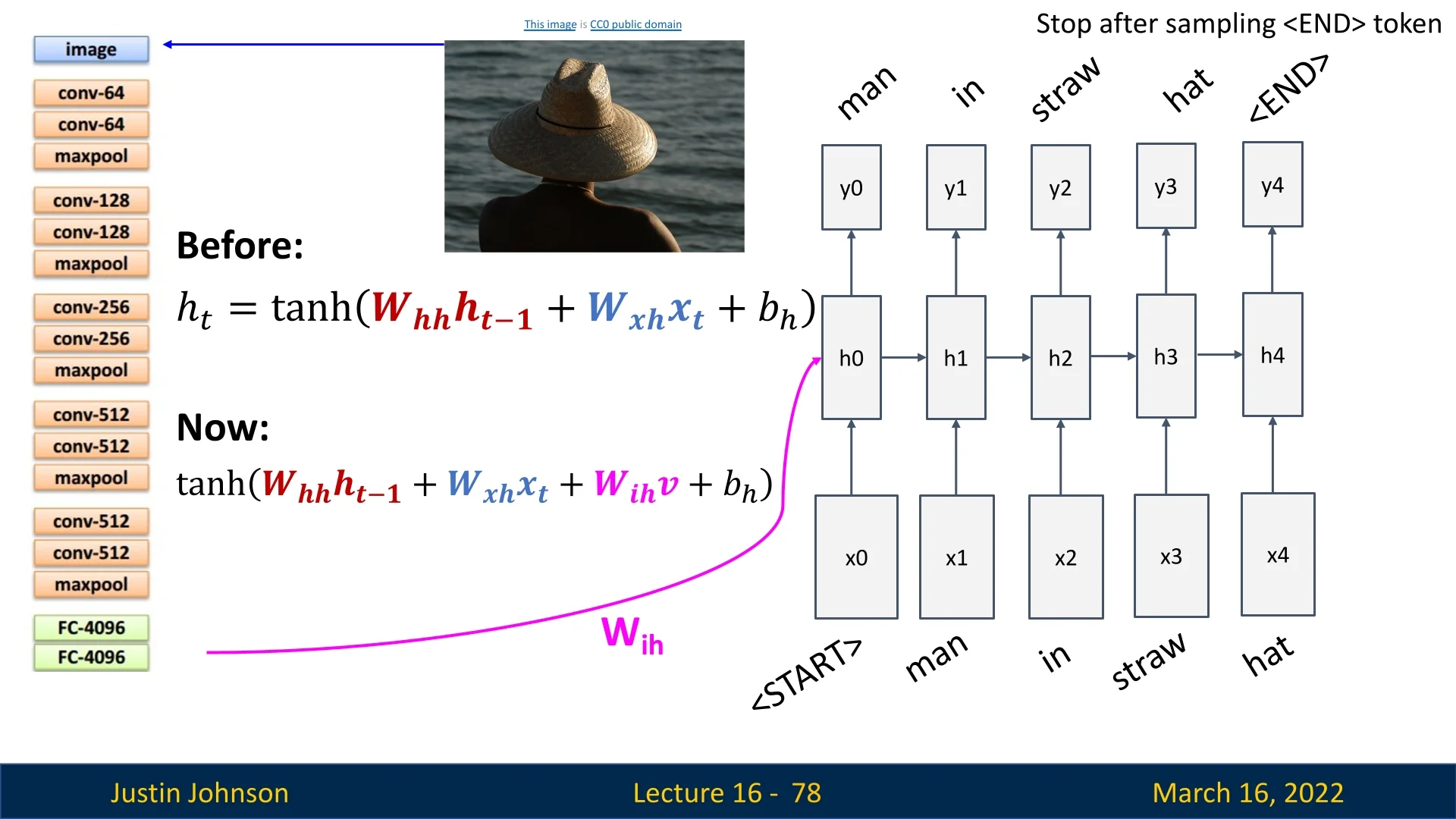

Image captioning is the task of generating a textual description of an image by combining computer vision (to extract meaningful features) and natural language processing (to generate coherent text). The standard pipeline consists of two main components:

- 1.

- Feature Extraction with a Pre-Trained CNN: A convolutional neural network (CNN), originally trained for image classification (e.g., on ImageNet), is used to encode the image into a high-level feature representation. The final fully connected layers are removed, leaving only/mostly the convolutional layers to produce an image embedding.

- 2.

- Caption Generation with an RNN: The extracted image features serve as additional input to an RNN, which generates a description one word at a time, starting from a special <START> token and stopping at an <END> token.

The standard RNN hidden state update equation: \[ \mathbf {h}_t = \tanh \big ( \mathbf {W}_{xh} \mathbf {x}_t + \mathbf {W}_{hh} \mathbf {h}_{t-1} \big ), \] is modified to incorporate the image features: \[ \mathbf {h}_t = \tanh \big ( \mathbf {W}_{xh} \mathbf {x}_t + \mathbf {W}_{hh} \mathbf {h}_{t-1} + \mathbf {W}_{ih} \mathbf {v} \big ), \] where:

- \( \mathbf {x}_t \) is the current input word,

- \( \mathbf {h}_{t-1} \) is the previous hidden state,

- \( \mathbf {v} \) is the image embedding from the CNN,

- \( \mathbf {W}_{ih} \) learns how to integrate image features into the sequence model.

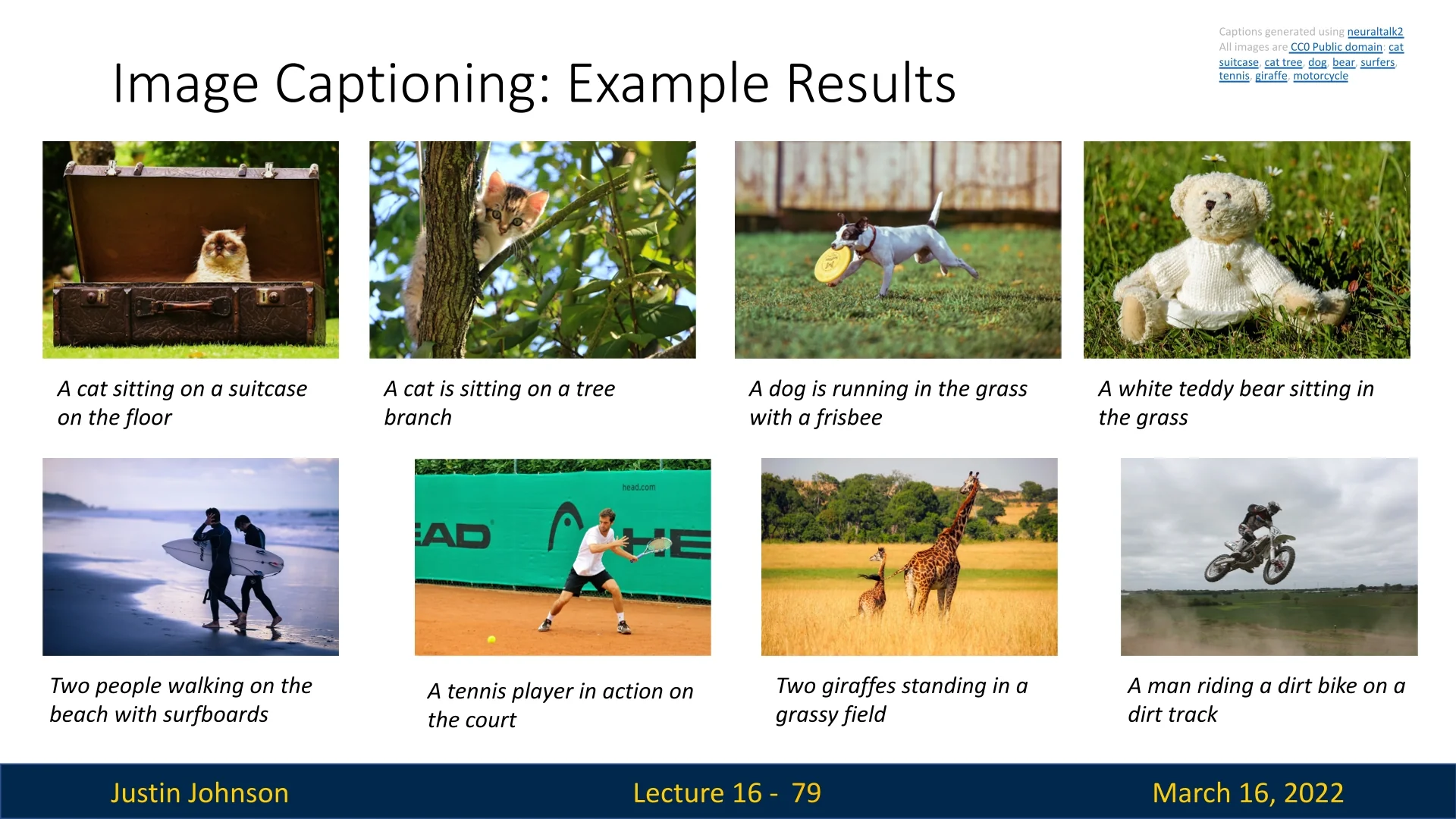

16.6.4 Image Captioning Results

When trained effectively, RNN-based image captioning models generate descriptions that align well with the content of an image.

Some strengths of the model include:

- Identifying objects and their relationships (e.g., ”a cat sitting on a suitcase”).

- Capturing spatial context within the scene.

- Producing fluent, grammatically correct sentences.

However, the model is limited in its reasoning abilities, often making systematic errors.

16.6.5 Failure Cases in Image Captioning

Despite generating plausible captions, RNN-based models struggle with dataset biases and lack true scene understanding.

Notable failure cases include:

- Texture Confusion: ”A woman is holding a cat in her hand.”

→ Incorrect. The model misinterprets a fur coat as a cat due to similar texture. - Outdated Training Data: ”A person holding a computer mouse on

a desk.”

→ Incorrect. Since the dataset predates smartphones, the model assumes any small handheld object near a desk is a computer mouse. - Contextual Overgeneralization: ”A woman standing on a beach

holding a surfboard.”

→ Incorrect. The model associates beaches with surfing due to frequent co-occurrence in the dataset. - Co-Occurrence Bias: ”A bird is perched on a tree branch.”

→ Incorrect. The model predicts a bird even though none are present, likely due to birds frequently appearing in similar scenes in the dataset. - Failure to Understand Actions: ”A man in a baseball uniform

throwing a ball.”

→ Incorrect. The model fails to distinguish between throwing and catching, highlighting a lack of true scene comprehension.